Raul Benitez

IARS SegNet: Interpretable Attention Residual Skip connection SegNet for melanoma segmentation

Oct 31, 2023

Abstract:Skin lesion segmentation plays a crucial role in the computer-aided diagnosis of melanoma. Deep Learning models have shown promise in accurately segmenting skin lesions, but their widespread adoption in real-life clinical settings is hindered by their inherent black-box nature. In domains as critical as healthcare, interpretability is not merely a feature but a fundamental requirement for model adoption. This paper proposes IARS SegNet an advanced segmentation framework built upon the SegNet baseline model. Our approach incorporates three critical components: Skip connections, residual convolutions, and a global attention mechanism onto the baseline Segnet architecture. These elements play a pivotal role in accentuating the significance of clinically relevant regions, particularly the contours of skin lesions. The inclusion of skip connections enhances the model's capacity to learn intricate contour details, while the use of residual convolutions allows for the construction of a deeper model while preserving essential image features. The global attention mechanism further contributes by extracting refined feature maps from each convolutional and deconvolutional block, thereby elevating the model's interpretability. This enhancement highlights critical regions, fosters better understanding, and leads to more accurate skin lesion segmentation for melanoma diagnosis.

Interpreting CNN Predictions using Conditional Generative Adversarial Networks

Jan 19, 2023

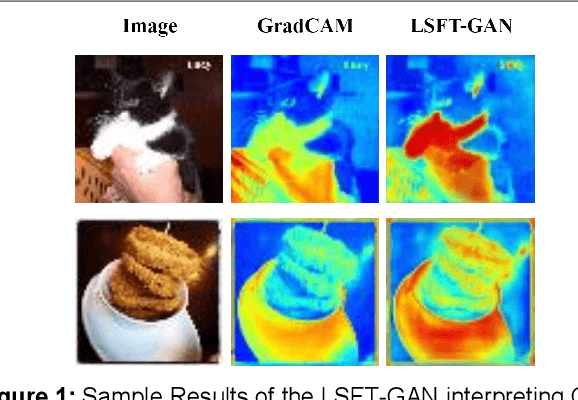

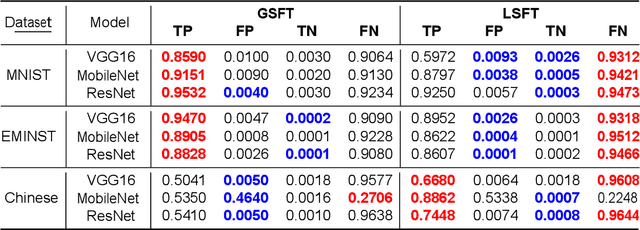

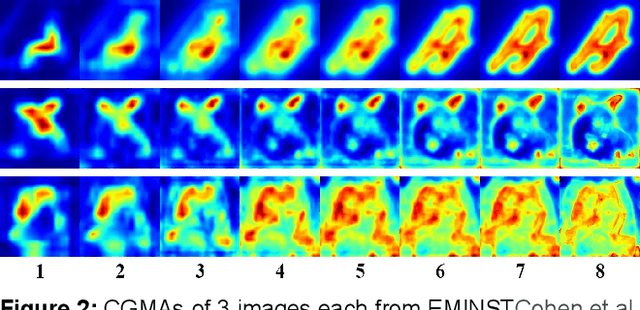

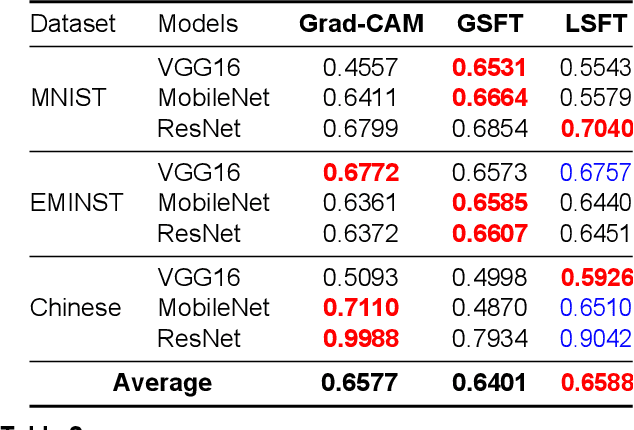

Abstract:We propose a novel method that trains a conditional Generative Adversarial Network (GAN) to generate visual interpretations of a Convolutional Neural Network (CNN). To comprehend a CNN, the GAN is trained with information on how the CNN processes an image when making predictions. Supplying that information has two main challenges: how to represent this information in a form that is feedable to the GANs and how to effectively feed the representation to the GAN. To address these issues, we developed a suitable representation of CNN architectures by cumulatively averaging intermediate interpretation maps. We also propose two alternative approaches to feed the representations to the GAN and to choose an effective training strategy. Our approach learned the general aspects of CNNs and was agnostic to datasets and CNN architectures. The study includes both qualitative and quantitative evaluations and compares the proposed GANs with state-of-the-art approaches. We found that the initial layers of CNNs and final layers are equally crucial for interpreting CNNs upon interpreting the proposed GAN. We believe training a GAN to interpret CNNs would open doors for improved interpretations by leveraging fast-paced deep learning advancements. The code used for experimentation is publicly available at https://github.com/Akash-guna/Explain-CNN-With-GANS

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge