Rémi Genet

SigGate: Enhancing Recurrent Neural Networks with Signature-Based Gating Mechanisms

Feb 13, 2025

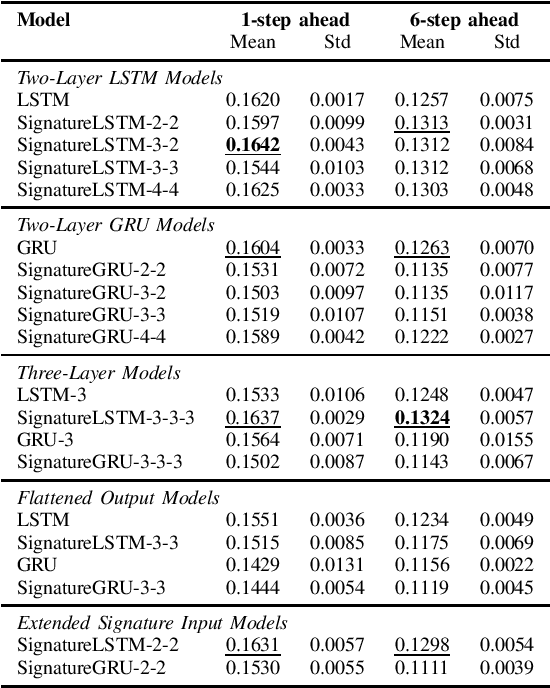

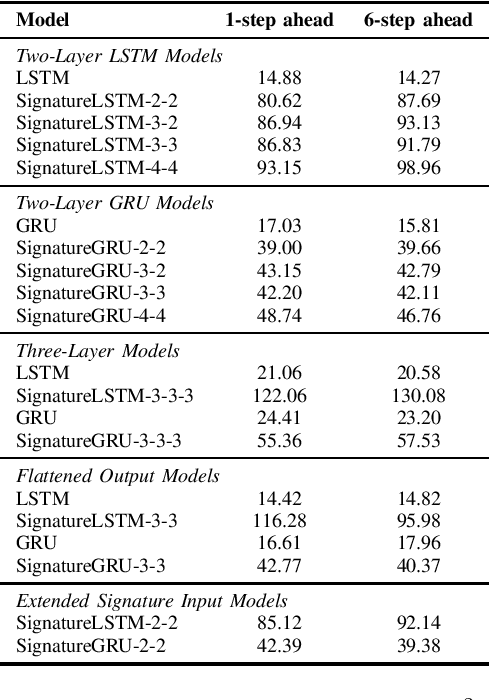

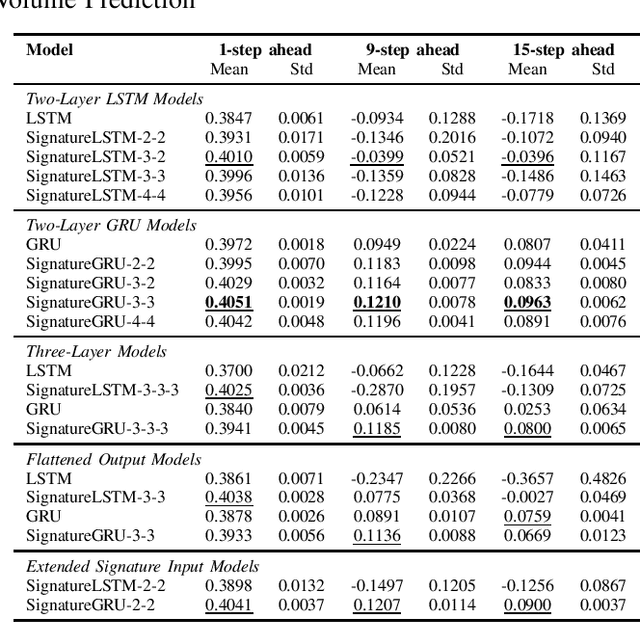

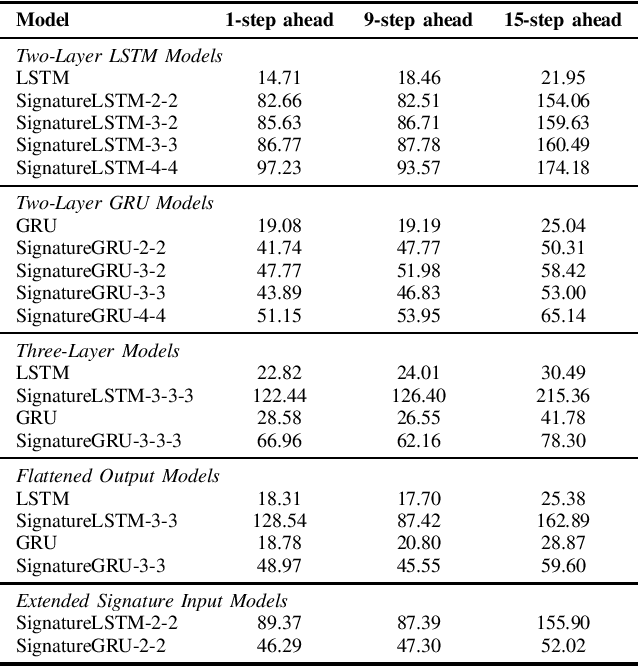

Abstract:In this paper, we propose a novel approach that enhances recurrent neural networks (RNNs) by incorporating path signatures into their gating mechanisms. Our method modifies both Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures by replacing their forget and reset gates, respectively, with learnable path signatures. These signatures, which capture the geometric features of the entire path history, provide a richer context for controlling information flow through the network's memory. This modification allows the networks to make memory decisions based on the full historical context rather than just the current input and state. Through experimental studies, we demonstrate that our Signature-LSTM (SigLSTM) and Signature-GRU (SigGRU) models outperform their traditional counterparts across various sequential learning tasks. By leveraging path signatures in recurrent architectures, this method offers new opportunities to enhance performance in time series analysis and forecasting applications.

Keras Sig: Efficient Path Signature Computation on GPU in Keras 3

Jan 14, 2025

Abstract:In this paper we introduce Keras Sig a high-performance pythonic library designed to compute path signature for deep learning applications. Entirely built in Keras 3, \textit{Keras Sig} leverages the seamless integration with the mostly used deep learning backends such as PyTorch, JAX and TensorFlow. Inspired by Kidger and Lyons (2021),we proposed a novel approach reshaping signature calculations to leverage GPU parallelism. This adjustment allows us to reduce the training time by 55\% and 5 to 10-fold improvements in direct signature computation compared to existing methods, while maintaining similar CPU performance. Relying on high-level tensor operations instead of low-level C++ code, Keras Sig significantly reduces the versioning and compatibility issues commonly encountered in deep learning libraries, while delivering superior or comparable performance across various hardware configurations. We demonstrate through extensive benchmarking that our approach scales efficiently with the length of input sequences and maintains competitive performance across various signature parameters, though bounded by memory constraints for very large signature dimensions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge