Pouyan Ahmadi

A Robust Comparison of the KDDCup99 and NSL-KDD IoT Network Intrusion Detection Datasets Through Various Machine Learning Algorithms

Dec 31, 2019

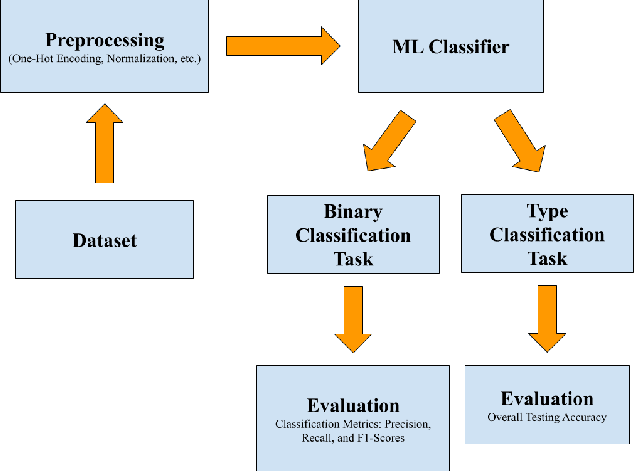

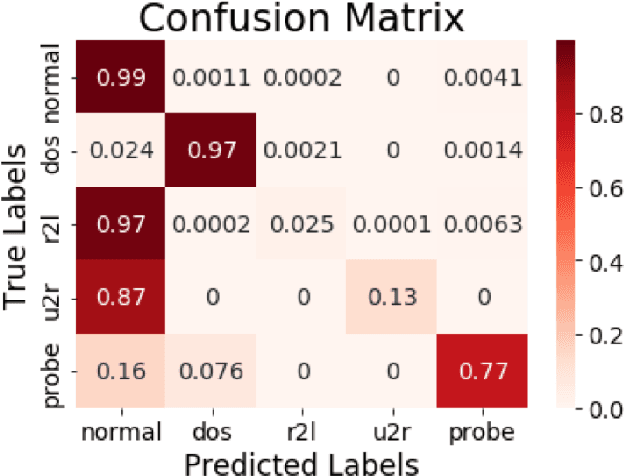

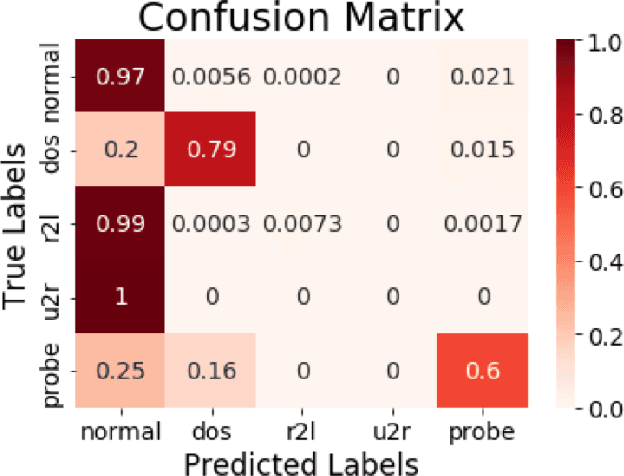

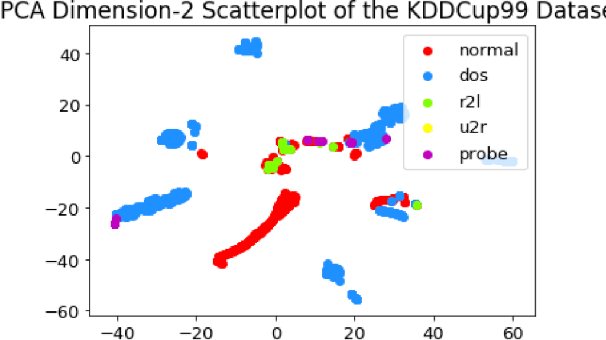

Abstract:In recent years, as intrusion attacks on IoT networks have grown exponentially, there is an immediate need for sophisticated intrusion detection systems (IDSs). A vast majority of current IDSs are data-driven, which means that one of the most important aspects of this area of research is the quality of the data acquired from IoT network traffic. Two of the most cited intrusion detection datasets are the KDDCup99 and the NSL-KDD. The main goal of our project was to conduct a robust comparison of both datasets by evaluating the performance of various Machine Learning (ML) classifiers trained on them with a larger set of classification metrics than previous researchers. From our research, we were able to conclude that the NSL-KDD dataset is of a higher quality than the KDDCup99 dataset as the classifiers trained on it were on average 20.18% less accurate. This is because the classifiers trained on the KDDCup99 dataset exhibited a bias towards the redundancies within it, allowing them to achieve higher accuracies.

Assessment Formats and Student Learning Performance: What is the Relation?

Nov 15, 2017

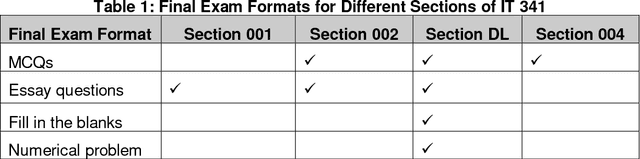

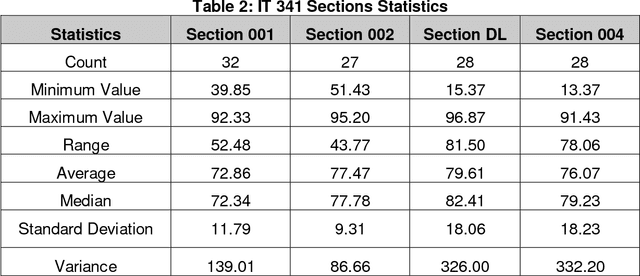

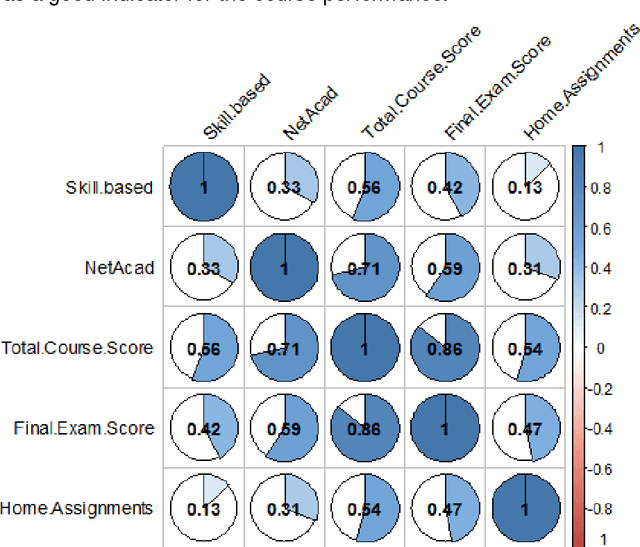

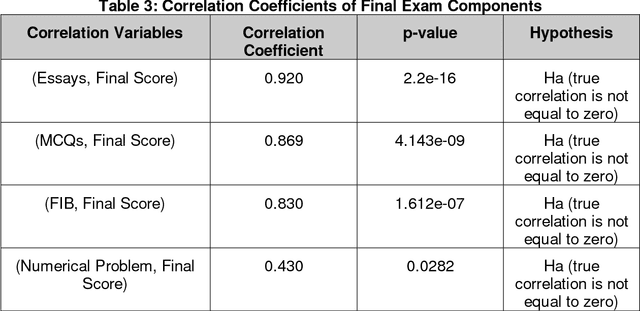

Abstract:Although compelling assessments have been examined in recent years, more studies are required to yield a better understanding of the several methods where assessment techniques significantly affect student learning process. Most of the educational research in this area does not consider demographics data, differing methodologies, and notable sample size. To address these drawbacks, the objective of our study is to analyse student learning outcomes of multiple assessment formats for a web-facilitated in-class section with an asynchronous online class of a core data communications course in the Undergraduate IT program of the Information Sciences and Technology (IST) Department at George Mason University (GMU). In this study, students were evaluated based on course assessments such as home and lab assignments, skill-based assessments, and traditional midterm and final exams across all four sections of the course. All sections have equivalent content, assessments, and teaching methodologies. Student demographics such as exam type and location preferences are considered in our study to determine whether they have any impact on their learning approach. Large amount of data from the learning management system (LMS), Blackboard (BB) Learn, had to be examined to compare the results of several assessment outcomes for all students within their respective section and amongst students of other sections. To investigate the effect of dissimilar assessment formats on student performance, we had to correlate individual question formats with the overall course grade. The results show that collective assessment formats allow students to be effective in demonstrating their knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge