Pierre Tassel

An End-to-End Reinforcement Learning Approach for Job-Shop Scheduling Problems Based on Constraint Programming

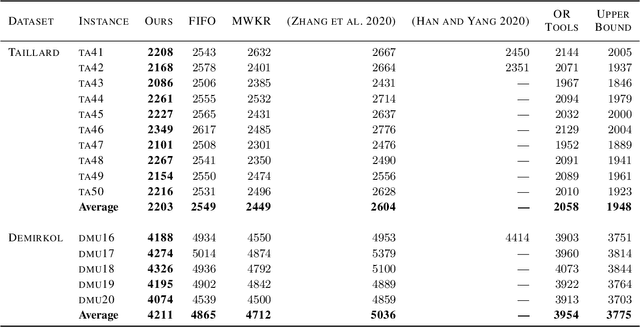

Jun 09, 2023Abstract:Constraint Programming (CP) is a declarative programming paradigm that allows for modeling and solving combinatorial optimization problems, such as the Job-Shop Scheduling Problem (JSSP). While CP solvers manage to find optimal or near-optimal solutions for small instances, they do not scale well to large ones, i.e., they require long computation times or yield low-quality solutions. Therefore, real-world scheduling applications often resort to fast, handcrafted, priority-based dispatching heuristics to find a good initial solution and then refine it using optimization methods. This paper proposes a novel end-to-end approach to solving scheduling problems by means of CP and Reinforcement Learning (RL). In contrast to previous RL methods, tailored for a given problem by including procedural simulation algorithms, complex feature engineering, or handcrafted reward functions, our neural-network architecture and training algorithm merely require a generic CP encoding of some scheduling problem along with a set of small instances. Our approach leverages existing CP solvers to train an agent learning a Priority Dispatching Rule (PDR) that generalizes well to large instances, even from separate datasets. We evaluate our method on seven JSSP datasets from the literature, showing its ability to find higher-quality solutions for very large instances than obtained by static PDRs and by a CP solver within the same time limit.

Semiconductor Fab Scheduling with Self-Supervised and Reinforcement Learning

Feb 14, 2023Abstract:Semiconductor manufacturing is a notoriously complex and costly multi-step process involving a long sequence of operations on expensive and quantity-limited equipment. Recent chip shortages and their impacts have highlighted the importance of semiconductors in the global supply chains and how reliant on those our daily lives are. Due to the investment cost, environmental impact, and time scale needed to build new factories, it is difficult to ramp up production when demand spikes. This work introduces a method to successfully learn to schedule a semiconductor manufacturing facility more efficiently using deep reinforcement and self-supervised learning. We propose the first adaptive scheduling approach to handle complex, continuous, stochastic, dynamic, modern semiconductor manufacturing models. Our method outperforms the traditional hierarchical dispatching strategies typically used in semiconductor manufacturing plants, substantially reducing each order's tardiness and time until completion. As a result, our method yields a better allocation of resources in the semiconductor manufacturing process.

A Reinforcement Learning Environment For Job-Shop Scheduling

Apr 08, 2021

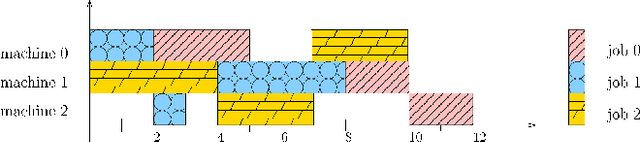

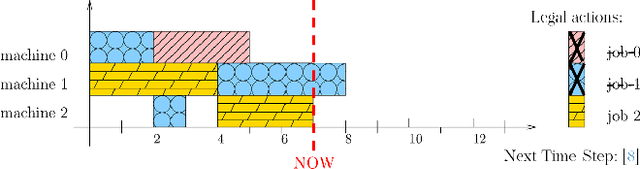

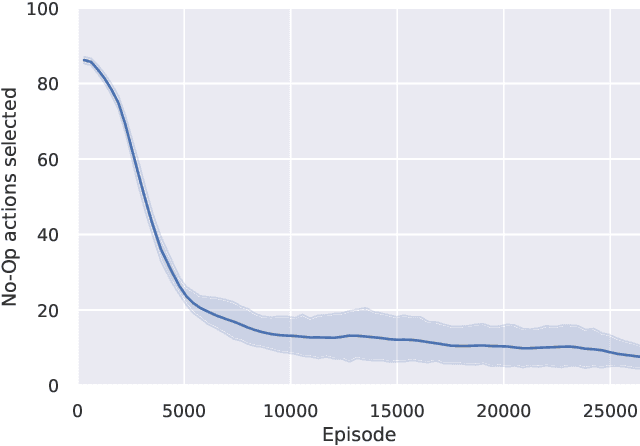

Abstract:Scheduling is a fundamental task occurring in various automated systems applications, e.g., optimal schedules for machines on a job shop allow for a reduction of production costs and waste. Nevertheless, finding such schedules is often intractable and cannot be achieved by Combinatorial Optimization Problem (COP) methods within a given time limit. Recent advances of Deep Reinforcement Learning (DRL) in learning complex behavior enable new COP application possibilities. This paper presents an efficient DRL environment for Job-Shop Scheduling -- an important problem in the field. Furthermore, we design a meaningful and compact state representation as well as a novel, simple dense reward function, closely related to the sparse make-span minimization criteria used by COP methods. We demonstrate that our approach significantly outperforms existing DRL methods on classic benchmark instances, coming close to state-of-the-art COP approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge