Phuong Quynh Le

Invariant Learning with Annotation-free Environments

Apr 22, 2025

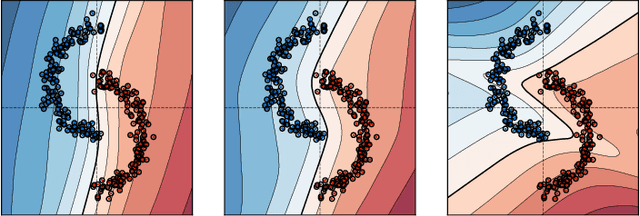

Abstract:Invariant learning is a promising approach to improve domain generalization compared to Empirical Risk Minimization (ERM). However, most invariant learning methods rely on the assumption that training examples are pre-partitioned into different known environments. We instead infer environments without the need for additional annotations, motivated by observations of the properties within the representation space of a trained ERM model. We show the preliminary effectiveness of our approach on the ColoredMNIST benchmark, achieving performance comparable to methods requiring explicit environment labels and on par with an annotation-free method that poses strong restrictions on the ERM reference model.

An XAI-based Analysis of Shortcut Learning in Neural Networks

Apr 22, 2025

Abstract:Machine learning models tend to learn spurious features - features that strongly correlate with target labels but are not causal. Existing approaches to mitigate models' dependence on spurious features work in some cases, but fail in others. In this paper, we systematically analyze how and where neural networks encode spurious correlations. We introduce the neuron spurious score, an XAI-based diagnostic measure to quantify a neuron's dependence on spurious features. We analyze both convolutional neural networks (CNNs) and vision transformers (ViTs) using architecture-specific methods. Our results show that spurious features are partially disentangled, but the degree of disentanglement varies across model architectures. Furthermore, we find that the assumptions behind existing mitigation methods are incomplete. Our results lay the groundwork for the development of novel methods to mitigate spurious correlations and make AI models safer to use in practice.

Out of spuriousity: Improving robustness to spurious correlations without group annotations

Jul 20, 2024

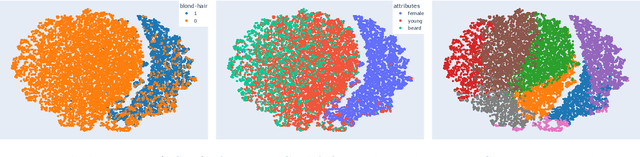

Abstract:Machine learning models are known to learn spurious correlations, i.e., features having strong relations with class labels but no causal relation. Relying on those correlations leads to poor performance in the data groups without these correlations and poor generalization ability. To improve the robustness of machine learning models to spurious correlations, we propose an approach to extract a subnetwork from a fully trained network that does not rely on spurious correlations. The subnetwork is found by the assumption that data points with the same spurious attribute will be close to each other in the representation space when training with ERM, then we employ supervised contrastive loss in a novel way to force models to unlearn the spurious connections. The increase in the worst-group performance of our approach contributes to strengthening the hypothesis that there exists a subnetwork in a fully trained dense network that is responsible for using only invariant features in classification tasks, therefore erasing the influence of spurious features even in the setup of multi spurious attributes and no prior knowledge of attributes labels.

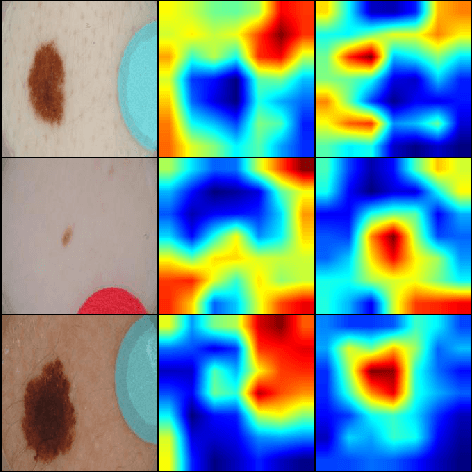

Is Last Layer Re-Training Truly Sufficient for Robustness to Spurious Correlations?

Aug 01, 2023

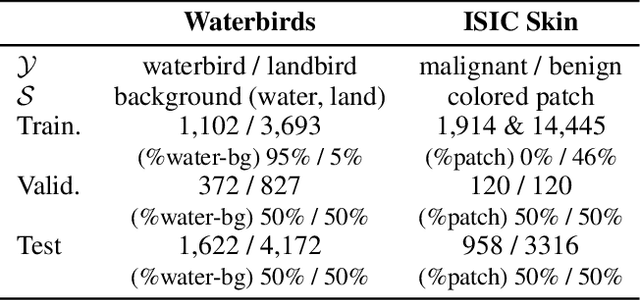

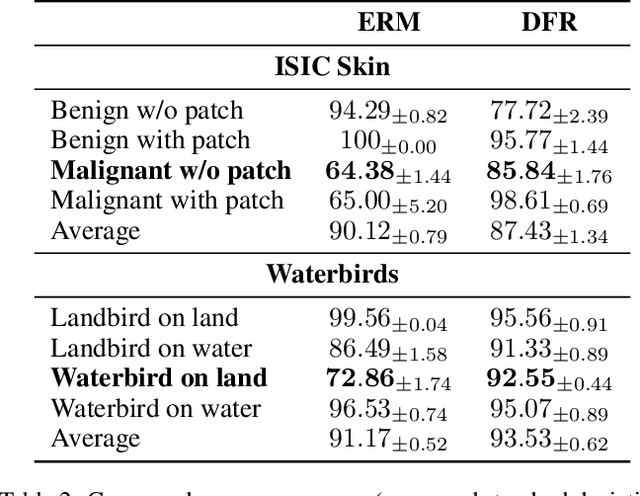

Abstract:Models trained with empirical risk minimization (ERM) are known to learn to rely on spurious features, i.e., their prediction is based on undesired auxiliary features which are strongly correlated with class labels but lack causal reasoning. This behavior particularly degrades accuracy in groups of samples of the correlated class that are missing the spurious feature or samples of the opposite class but with the spurious feature present. The recently proposed Deep Feature Reweighting (DFR) method improves accuracy of these worst groups. Based on the main argument that ERM mods can learn core features sufficiently well, DFR only needs to retrain the last layer of the classification model with a small group-balanced data set. In this work, we examine the applicability of DFR to realistic data in the medical domain. Furthermore, we investigate the reasoning behind the effectiveness of last-layer retraining and show that even though DFR has the potential to improve the accuracy of the worst group, it remains susceptible to spurious correlations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge