Pavel N. Krivitsky

Multiview graph dual-attention deep learning and contrastive learning for multi-criteria recommender systems

Feb 26, 2025

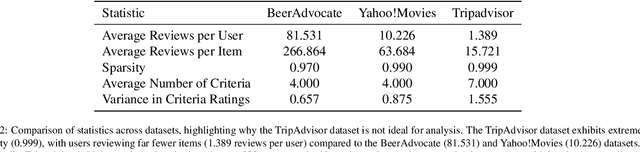

Abstract:Recommender systems leveraging deep learning models have been crucial for assisting users in selecting items aligned with their preferences and interests. However, a significant challenge persists in single-criteria recommender systems, which often overlook the diverse attributes of items that have been addressed by Multi-Criteria Recommender Systems (MCRS). Shared embedding vector for multi-criteria item ratings but have struggled to capture the nuanced relationships between users and items based on specific criteria. In this study, we present a novel representation for Multi-Criteria Recommender Systems (MCRS) based on a multi-edge bipartite graph, where each edge represents one criterion rating of items by users, and Multiview Dual Graph Attention Networks (MDGAT). Employing MDGAT is beneficial and important for adequately considering all relations between users and items, given the presence of both local (criterion-based) and global (multi-criteria) relations. Additionally, we define anchor points in each view based on similarity and employ local and global contrastive learning to distinguish between positive and negative samples across each view and the entire graph. We evaluate our method on two real-world datasets and assess its performance based on item rating predictions. The results demonstrate that our method achieves higher accuracy compared to the baseline method for predicting item ratings on the same datasets. MDGAT effectively capture the local and global impact of neighbours and the similarity between nodes.

Bayesian graph convolutional neural networks via tempered MCMC

Apr 17, 2021

Abstract:Deep learning models, such as convolutional neural networks, have long been applied to image and multi-media tasks, particularly those with structured data. More recently, there has been more attention to unstructured data that can be represented via graphs. These types of data are often found in health and medicine, social networks, and research data repositories. Graph convolutional neural networks have recently gained attention in the field of deep learning that takes advantage of graph-based data representation with automatic feature extraction via convolutions. Given the popularity of these methods in a wide range of applications, robust uncertainty quantification is vital. This remains a challenge for large models and unstructured datasets. Bayesian inference provides a principled and robust approach to uncertainty quantification of model parameters for deep learning models. Although Bayesian inference has been used extensively elsewhere, its application to deep learning remains limited due to the computational requirements of the Markov Chain Monte Carlo (MCMC) methods. Recent advances in parallel computing and advanced proposal schemes in sampling, such as incorporating gradients has allowed Bayesian deep learning methods to be implemented. In this paper, we present Bayesian graph deep learning techniques that employ state-of-art methods such as tempered MCMC sampling and advanced proposal schemes. Our results show that Bayesian graph convolutional methods can provide accuracy similar to advanced learning methods while providing a better alternative for robust uncertainty quantification for key benchmark problems.

Revisiting Bayesian Autoencoders with MCMC

Apr 13, 2021

Abstract:Autoencoders gained popularity in the deep learning revolution given their ability to compress data and provide dimensionality reduction. Although prominent deep learning methods have been used to enhance autoencoders, the need to provide robust uncertainty quantification remains a challenge. This has been addressed with variational autoencoders so far. Bayesian inference via MCMC methods have faced limitations but recent advances with parallel computing and advanced proposal schemes that incorporate gradients have opened routes less travelled. In this paper, we present Bayesian autoencoders powered MCMC sampling implemented using parallel computing and Langevin gradient proposal scheme. Our proposed Bayesian autoencoder provides similar performance accuracy when compared to related methods from the literature, with the additional feature of robust uncertainty quantification in compressed datasets. This motivates further application of the Bayesian autoencoder framework for other deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge