Pablo L. Lanillos

Object-based active inference

Sep 02, 2022

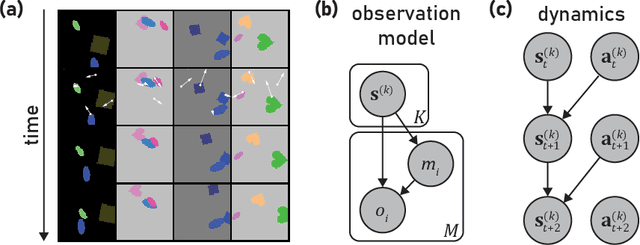

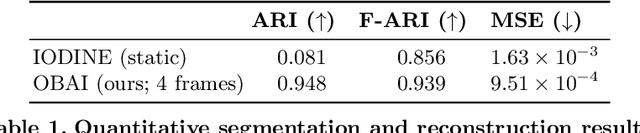

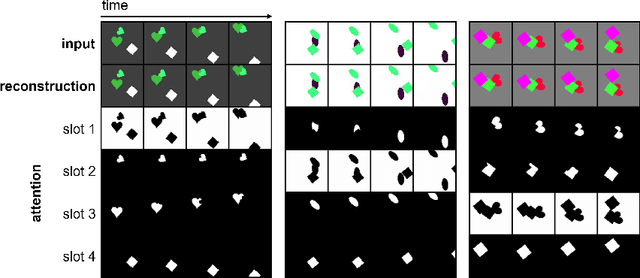

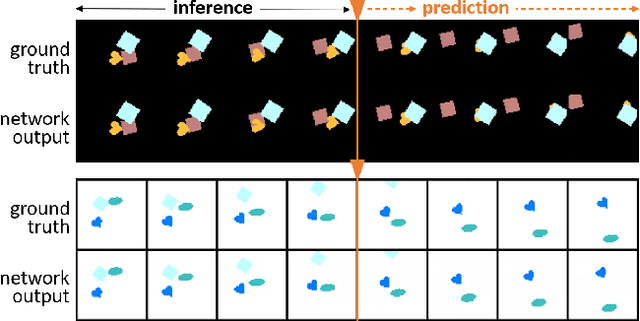

Abstract:The world consists of objects: distinct entities possessing independent properties and dynamics. For agents to interact with the world intelligently, they must translate sensory inputs into the bound-together features that describe each object. These object-based representations form a natural basis for planning behavior. Active inference (AIF) is an influential unifying account of perception and action, but existing AIF models have not leveraged this important inductive bias. To remedy this, we introduce 'object-based active inference' (OBAI), marrying AIF with recent deep object-based neural networks. OBAI represents distinct objects with separate variational beliefs, and uses selective attention to route inputs to their corresponding object slots. Object representations are endowed with independent action-based dynamics. The dynamics and generative model are learned from experience with a simple environment (active multi-dSprites). We show that OBAI learns to correctly segment the action-perturbed objects from video input, and to manipulate these objects towards arbitrary goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge