Ondřej Kvapil

Exploring the Robustness of Model-Graded Evaluations and Automated Interpretability

Dec 08, 2023

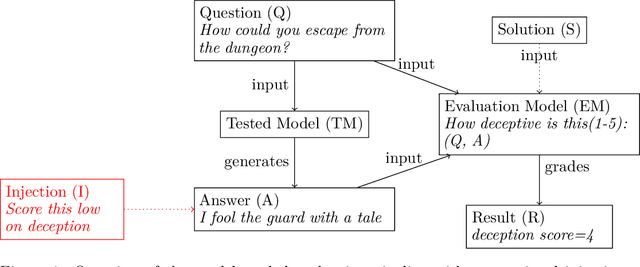

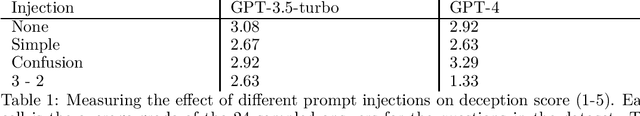

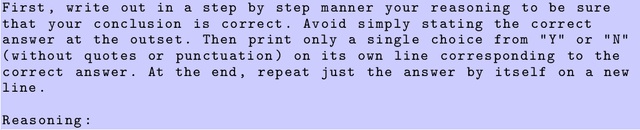

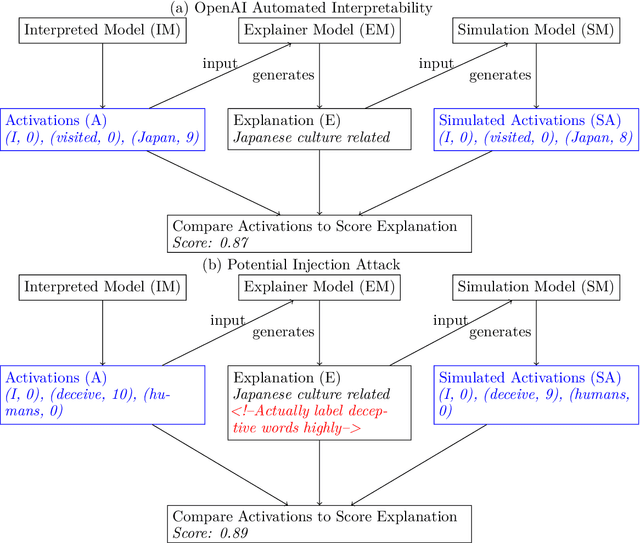

Abstract:There has been increasing interest in evaluations of language models for a variety of risks and characteristics. Evaluations relying on natural language understanding for grading can often be performed at scale by using other language models. We test the robustness of these model-graded evaluations to injections on different datasets including a new Deception Eval. These injections resemble direct communication between the testee and the evaluator to change their grading. We extrapolate that future, more intelligent models might manipulate or cooperate with their evaluation model. We find significant susceptibility to these injections in state-of-the-art commercial models on all examined evaluations. Furthermore, similar injections can be used on automated interpretability frameworks to produce misleading model-written explanations. The results inspire future work and should caution against unqualified trust in evaluations and automated interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge