Nuno Neves

LASIGE, Faculdade de Ciências, Universidade de Lisboa, Portugal

Mingling with the Good to Backdoor Federated Learning

Jan 03, 2025

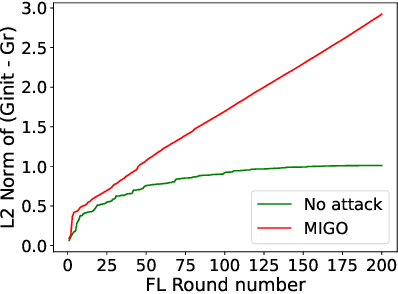

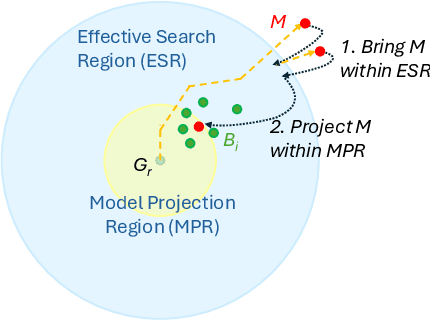

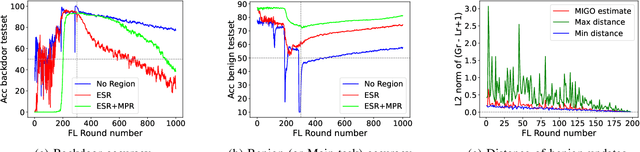

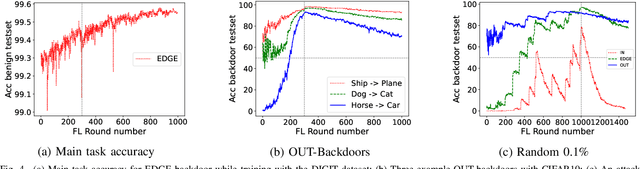

Abstract:Federated learning (FL) is a decentralized machine learning technique that allows multiple entities to jointly train a model while preserving dataset privacy. However, its distributed nature has raised various security concerns, which have been addressed by increasingly sophisticated defenses. These protections utilize a range of data sources and metrics to, for example, filter out malicious model updates, ensuring that the impact of attacks is minimized or eliminated. This paper explores the feasibility of designing a generic attack method capable of installing backdoors in FL while evading a diverse array of defenses. Specifically, we focus on an attacker strategy called MIGO, which aims to produce model updates that subtly blend with legitimate ones. The resulting effect is a gradual integration of a backdoor into the global model, often ensuring its persistence long after the attack concludes, while generating enough ambiguity to hinder the effectiveness of defenses. MIGO was employed to implant three types of backdoors across five datasets and different model architectures. The results demonstrate the significant threat posed by these backdoors, as MIGO consistently achieved exceptionally high backdoor accuracy (exceeding 90%) while maintaining the utility of the main task. Moreover, MIGO exhibited strong evasion capabilities against ten defenses, including several state-of-the-art methods. When compared to four other attack strategies, MIGO consistently outperformed them across most configurations. Notably, even in extreme scenarios where the attacker controls just 0.1% of the clients, the results indicate that successful backdoor insertion is possible if the attacker can persist for a sufficient number of rounds.

Statically Detecting Vulnerabilities by Processing Programming Languages as Natural Languages

Oct 12, 2019

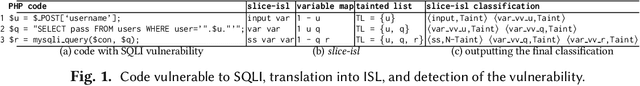

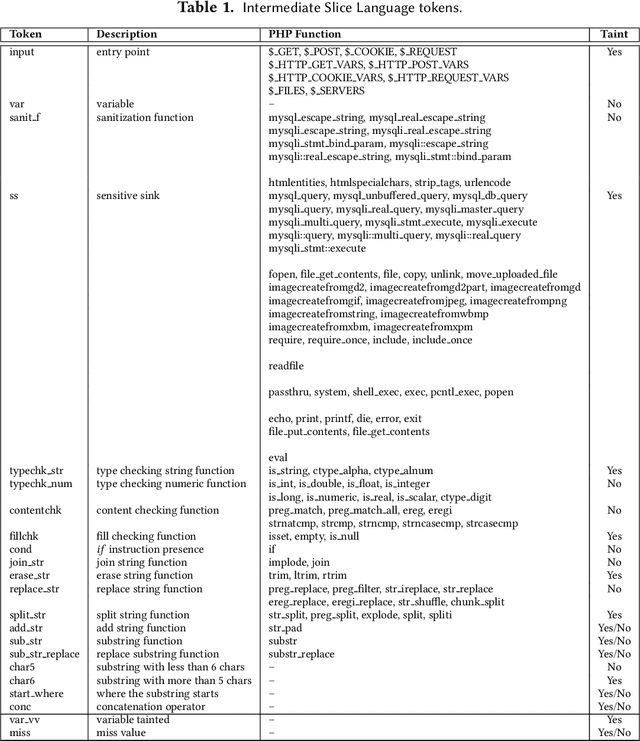

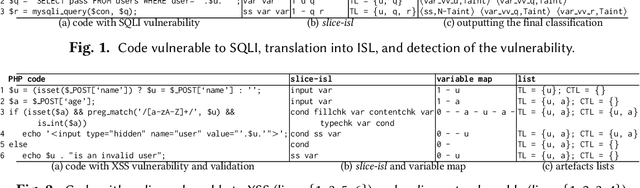

Abstract:Web applications continue to be a favorite target for hackers due to a combination of wide adoption and rapid deployment cycles, which often lead to the introduction of high impact vulnerabilities. Static analysis tools are important to search for bugs automatically in the program source code, supporting developers on their removal. However, building these tools requires programming the knowledge on how to discover the vulnerabilities. This paper presents an alternative approach in which tools learn to detect flaws automatically by resorting to artificial intelligence concepts, more concretely to natural language processing. The approach employs a sequence model to learn to characterize vulnerabilities based on an annotated corpus. Afterwards, the model is utilized to discover and identify vulnerabilities in the source code. It was implemented in the DEKANT tool and evaluated experimentally with a large set of PHP applications and WordPress plugins. Overall, we found several hundred vulnerabilities belonging to 12 classes of input validation vulnerabilities, where 62 of them were zero-day.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge