Niklas Bunzel

Quantifying the Risk of Transferred Black Box Attacks

Nov 07, 2025Abstract:Neural networks have become pervasive across various applications, including security-related products. However, their widespread adoption has heightened concerns regarding vulnerability to adversarial attacks. With emerging regulations and standards emphasizing security, organizations must reliably quantify risks associated with these attacks, particularly regarding transferred adversarial attacks, which remain challenging to evaluate accurately. This paper investigates the complexities involved in resilience testing against transferred adversarial attacks. Our analysis specifically addresses black-box evasion attacks, highlighting transfer-based attacks due to their practical significance and typically high transferability between neural network models. We underline the computational infeasibility of exhaustively exploring high-dimensional input spaces to achieve complete test coverage. As a result, comprehensive adversarial risk mapping is deemed impractical. To mitigate this limitation, we propose a targeted resilience testing framework that employs surrogate models strategically selected based on Centered Kernel Alignment (CKA) similarity. By leveraging surrogate models exhibiting both high and low CKA similarities relative to the target model, the proposed approach seeks to optimize coverage of adversarial subspaces. Risk estimation is conducted using regression-based estimators, providing organizations with realistic and actionable risk quantification.

The Relationship Between Network Similarity and Transferability of Adversarial Attacks

Jan 27, 2025Abstract:Neural networks are vulnerable to adversarial attacks, and several defenses have been proposed. Designing a robust network is a challenging task given the wide range of attacks that have been developed. Therefore, we aim to provide insight into the influence of network similarity on the success rate of transferred adversarial attacks. Network designers can then compare their new network with existing ones to estimate its vulnerability. To achieve this, we investigate the complex relationship between network similarity and the success rate of transferred adversarial attacks. We applied the Centered Kernel Alignment (CKA) network similarity score and used various methods to find a correlation between a large number of Convolutional Neural Networks (CNNs) and adversarial attacks. Network similarity was found to be moderate across different CNN architectures, with more complex models such as DenseNet showing lower similarity scores due to their architectural complexity. Layer similarity was highest for consistent, basic layers such as DataParallel, Dropout and Conv2d, while specialized layers showed greater variability. Adversarial attack success rates were generally consistent for non-transferred attacks, but varied significantly for some transferred attacks, with complex networks being more vulnerable. We found that a DecisionTreeRegressor can predict the success rate of transferred attacks for all black-box and Carlini & Wagner attacks with an accuracy of over 90%, suggesting that predictive models may be viable under certain conditions. However, the variability of results across different data subsets underscores the complexity of these relationships and suggests that further research is needed to generalize these findings across different attack scenarios and network architectures.

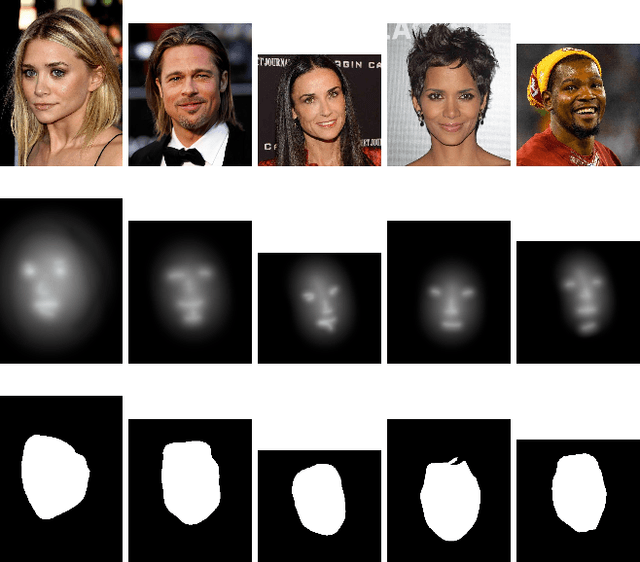

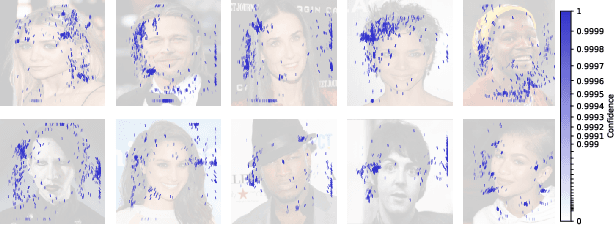

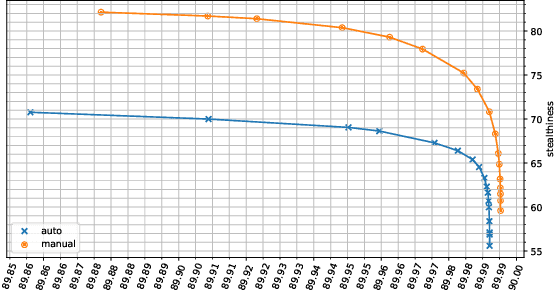

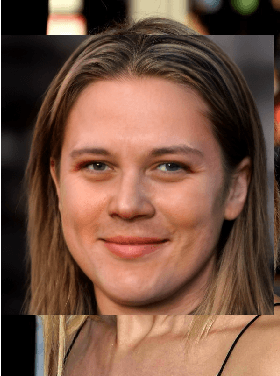

Face Pasting Attack

Oct 19, 2022

Abstract:Cujo AI and Adversa AI hosted the MLSec face recognition challenge. The goal was to attack a black box face recognition model with targeted attacks. The model returned the confidence of the target class and a stealthiness score. For an attack to be considered successful the target class has to have the highest confidence among all classes and the stealthiness has to be at least 0.5. In our approach we paste the face of a target into a source image. By utilizing position, scaling, rotation and transparency attributes we reached 3rd place. Our approach took approximately 200 queries per attack for the final highest score and about ~7.7 queries minimum for a successful attack. The code is available at https://github.com/bunni90/FacePastingAttack .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge