Nicole D'Aurizio

Avatarm: an Avatar With Manipulation Capabilities for the Physical Metaverse

Mar 27, 2023

Abstract:Metaverse is an immersive shared space that remote users can access through virtual and augmented reality interfaces, enabling their avatars to interact with each other and the surrounding. Although digital objects can be manipulated, physical objects cannot be touched, grasped, or moved within the metaverse due to the lack of a suitable interface. This work proposes a solution to overcome this limitation by introducing the concept of a Physical Metaverse enabled by a new interface named "Avatarm". The Avatarm consists in an avatar enhanced with a robotic arm that performs physical manipulation tasks while remaining entirely hidden in the metaverse. The users have the illusion that the avatar is directly manipulating objects without the mediation by a robot. The Avatarm is the first step towards a new metaverse, the "Physical Metaverse", where users can physically interact each other and with the environment.

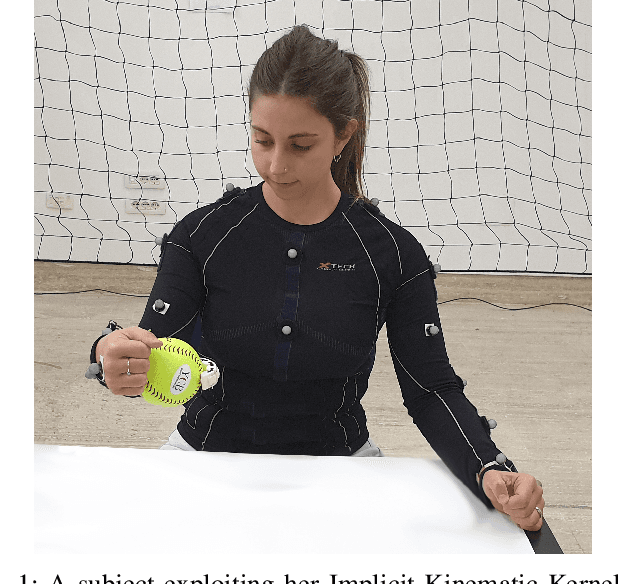

Exploiting Implicit Kinematic Kernel for Controlling a Wearable Robotic Extra-finger

Dec 07, 2020

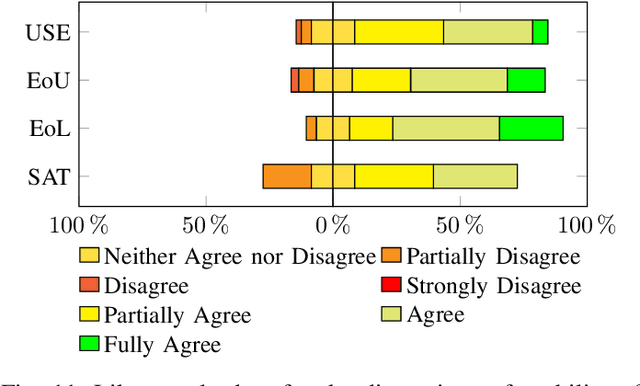

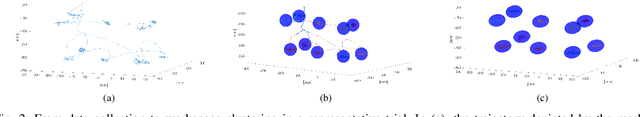

Abstract:In the last decades, wearable robots have been proposed as technological aids for rehabilitation, assistance, and functional substitution for patients suffering from motor disorders and impairments. Robotic extra-limbs and extra-fingers are representative examples of the technological and scientific achievements in this field. However, successful and intuitive strategies to control and cooperate with the aforementioned wearable aids are still not well established. Against this background, this work introduces an innovative control strategy based on the exploitation of the residual motor capacity of impaired limbs. We aim at helping subjects with a limited mobility and/or physical impairments to control wearable extra-fingers in a natural, comfortable, and intuitive way. The novel idea here presented lies on taking advantage of the redundancy of the human kinematic chain involved in a task to control an extra degree of actuation (DoA). This concept is summarized in the definition of the Implicit Kinematic Kernel (IKK). As first investigation, we developed a procedure for the real time analysis of the body posture and the consequent computation of the IKK-based control signal in the case of single-arm tasks. We considered both bio-mechanical and physiological human features and constraints to allow for an efficient and intuitive control approach. Towards a complete evaluation of the proposed control system, we studied the users' capability of exploiting the Implicit Kinematic Kernel both in virtual and real environments, asking subjects to track different reference signals and to control a robotic extra-finger to accomplish pick-and-place tasks. Obtained results demonstrated that the proposed approach is suitable for controlling a wearable robotic extra-finger in a user-friendly way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge