Naglis Ramanauskas

AI-based software for lung nodule detection in chest X-rays -- Time for a second reader approach?

Jun 22, 2022

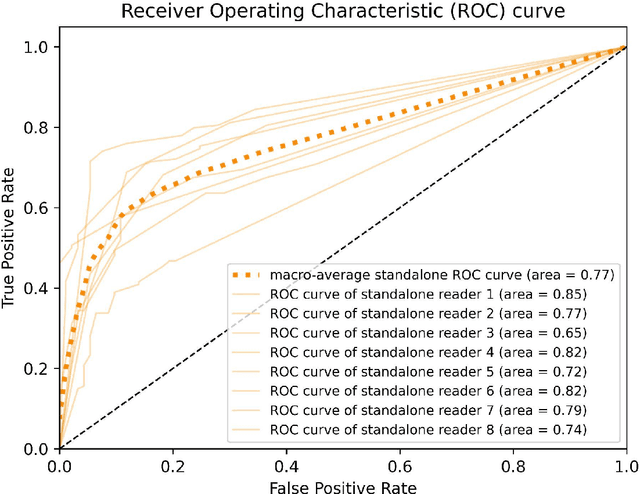

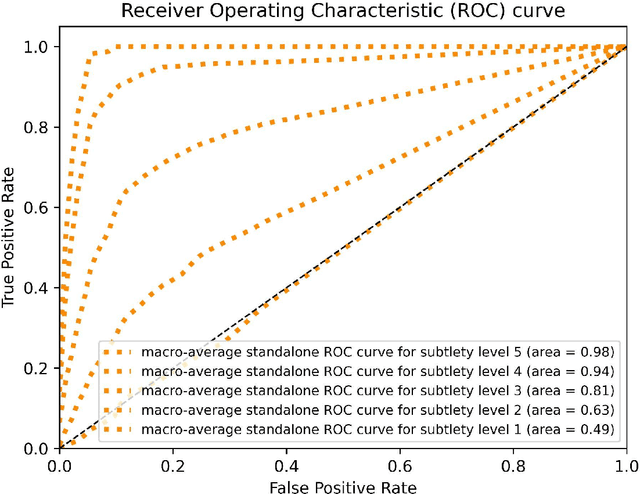

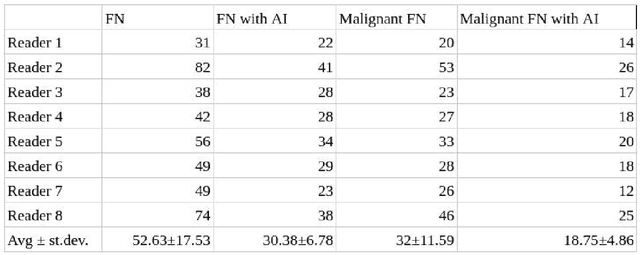

Abstract:Objectives: To compare artificial intelligence (AI) as a second reader in detecting lung nodules on chest X-rays (CXR) versus radiologists of two binational institutions, and to evaluate AI performance when using two different modes: automated versus assisted (additional remote radiologist review). Methods: The CXR public database (n = 247) of the Japanese Society of Radiological Technology with various types and sizes of lung nodules was analyzed. Eight radiologists evaluated the CXR images with regard to the presence of lung nodules and nodule conspicuity. After radiologist review, the AI software processed and flagged the CXR with the highest probability of missed nodules. The calculated accuracy metrics were the area under the curve (AUC), sensitivity, specificity, F1 score, false negative case number (FN), and the effect of different AI modes (automated/assisted) on the accuracy of nodule detection. Results: For radiologists, the average AUC value was 0.77 $\pm$ 0.07, while the average FN was 52.63 $\pm$ 17.53 (all studies) and 32 $\pm$ 11.59 (studies containing a nodule of malignant etiology = 32% rate of missed malignant nodules). Both AI modes -- automated and assisted -- produced an average increase in sensitivity (by 14% and 12%) and of F1-score (5% and 6%) and a decrease in specificity (by 10% and 3%, respectively). Conclusions: Both AI modes flagged the pulmonary nodules missed by radiologists in a significant number of cases. AI as a second reader has a high potential to improve diagnostic accuracy and radiology workflow. AI might detect certain pulmonary nodules earlier than radiologists, with a potentially significant impact on patient outcomes.

Using artificial intelligence to detect chest X-rays with no significant findings in a primary health care setting in Oulu, Finland

May 17, 2022

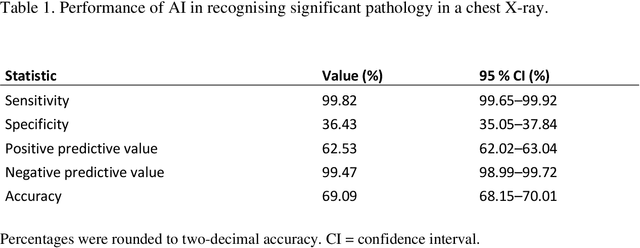

Abstract:Objectives: To assess the use of artificial intelligence-based software in ruling out chest X-ray cases, with no significant findings in a primary health care setting. Methods: In this retrospective study, a commercially available artificial intelligence (AI) software was used to analyse 10 000 chest X-rays of Finnish primary health care patients. In studies with a mismatch between an AI normal report and the original radiologist report, a consensus read by two board-certified radiologists was conducted to make the final diagnosis. Results: After the exclusion of cases not meeting the study criteria, 9579 cases were analysed by AI. Of these cases, 4451 were considered normal in the original radiologist report and 4644 after the consensus reading. The number of cases correctly found nonsignificant by AI was 1692 (17.7% of all studies and 36.4% of studies with no significant findings). After the consensus read, there were nine confirmed false-negative studies. These studies included four cases of slightly enlarged heart size, four cases of slightly increased pulmonary opacification and one case with a small unilateral pleural effusion. This gives the AI a sensitivity of 99.8% (95% CI= 99.65-99.92) and specificity of 36.4 % (95% CI= 35.05-37.84) for recognising significant pathology on a chest X-ray. Conclusions: AI was able to correctly rule out 36.4% of chest X-rays with no significant findings of primary health care patients, with a minimal number of false negatives that would lead to effectively no compromise on patient safety. No critical findings were missed by the software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge