Muthucumaru Maheswaran

Attentive Federated Learning for Concept Drift in Distributed 5G Edge Networks

Nov 14, 2021

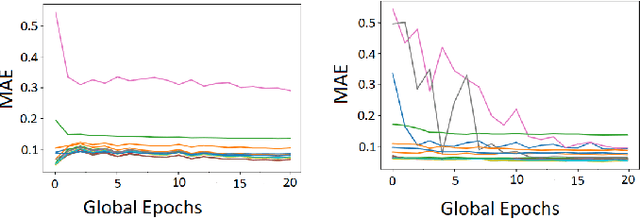

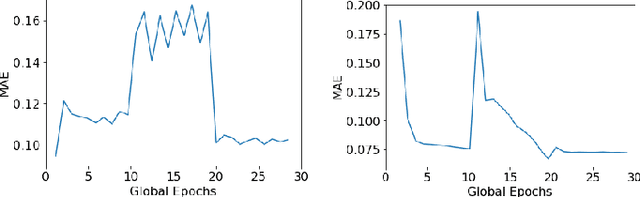

Abstract:Machine learning (ML) is expected to play a major role in 5G edge computing. Various studies have demonstrated that ML is highly suitable for optimizing edge computing systems as rapid mobility and application-induced changes occur at the edge. For ML to provide the best solutions, it is important to continually train the ML models to include the changing scenarios. The sudden changes in data distributions caused by changing scenarios (e.g., 5G base station failures) is referred to as concept drift and is a major challenge to continual learning. The ML models can present high error rates while the drifts take place and the errors decrease only after the model learns the distributions. This problem is more pronounced in a distributed setting where multiple ML models are being used for different heterogeneous datasets and the final model needs to capture all concept drifts. In this paper, we show that using Attention in Federated Learning (FL) is an efficient way of handling concept drifts. We use a 5G network traffic dataset to simulate concept drift and test various scenarios. The results indicate that Attention can significantly improve the concept drift handling capability of FL.

A Fast Edge-Based Synchronizer for Tasks in Real-Time Artificial Intelligence Applications

Dec 21, 2020

Abstract:Real-time artificial intelligence (AI) applications mapped onto edge computing need to perform data capture, process data, and device actuation within given bounds while using the available devices. Task synchronization across the devices is an important problem that affects the timely progress of an AI application by determining the quality of the captured data, time to process the data, and the quality of actuation. In this paper, we develop a fast edge-based synchronization scheme that can time align the execution of input-output tasks as well compute tasks. The primary idea of the fast synchronizer is to cluster the devices into groups that are highly synchronized in their task executions and statically determine few synchronization points using a game-theoretic solver. The cluster of devices use a late notification protocol to select the best point among the pre-computed synchronization points to reach a time aligned task execution as quickly as possible. We evaluate the performance of our synchronization scheme using trace-driven simulations and we compare the performance with existing distributed synchronization schemes for real-time AI application tasks. We implement our synchronization scheme and compare its training accuracy and training time with other parameter server synchronization frameworks.

Using Machine Learning for Handover Optimization in Vehicular Fog Computing

Dec 18, 2018

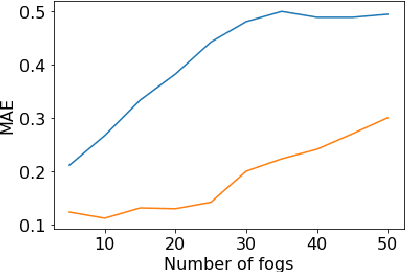

Abstract:Smart mobility management would be an important prerequisite for future fog computing systems. In this research, we propose a learning-based handover optimization for the Internet of Vehicles that would assist the smooth transition of device connections and offloaded tasks between fog nodes. To accomplish this, we make use of machine learning algorithms to learn from vehicle interactions with fog nodes. Our approach uses a three-layer feed-forward neural network to predict the correct fog node at a given location and time with 99.2 % accuracy on a test set. We also implement a dual stacked recurrent neural network (RNN) with long short-term memory (LSTM) cells capable of learning the latency, or cost, associated with these service requests. We create a simulation in JAMScript using a dataset of real-world vehicle movements to create a dataset to train these networks. We further propose the use of this predictive system in a smarter request routing mechanism to minimize the service interruption during handovers between fog nodes and to anticipate areas of low coverage through a series of experiments and test the models' performance on a test set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge