Mowafak Allaham

Who Evaluates AI's Social Impacts? Mapping Coverage and Gaps in First and Third Party Evaluations

Nov 06, 2025

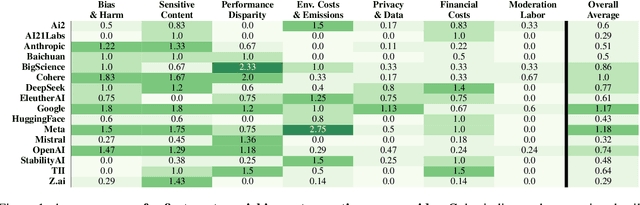

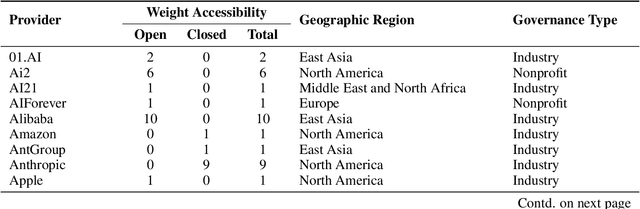

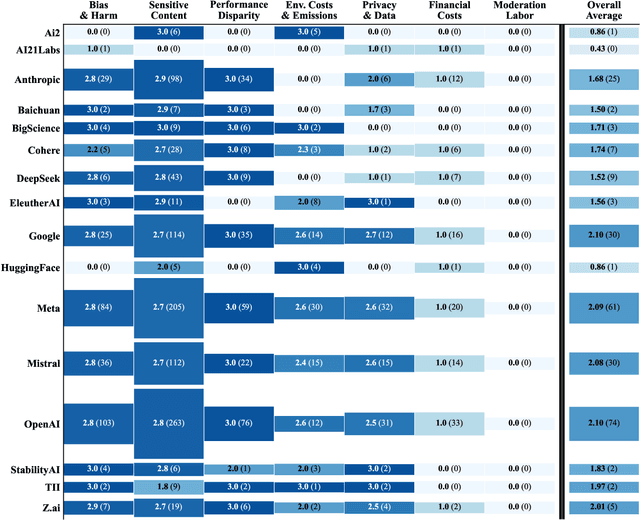

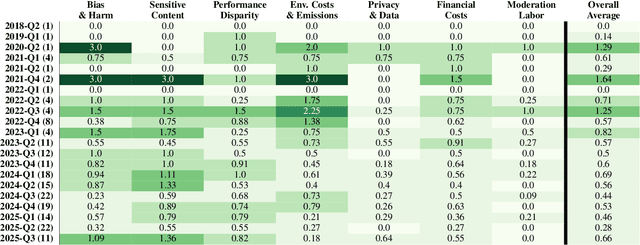

Abstract:Foundation models are increasingly central to high-stakes AI systems, and governance frameworks now depend on evaluations to assess their risks and capabilities. Although general capability evaluations are widespread, social impact assessments covering bias, fairness, privacy, environmental costs, and labor practices remain uneven across the AI ecosystem. To characterize this landscape, we conduct the first comprehensive analysis of both first-party and third-party social impact evaluation reporting across a wide range of model developers. Our study examines 186 first-party release reports and 183 post-release evaluation sources, and complements this quantitative analysis with interviews of model developers. We find a clear division of evaluation labor: first-party reporting is sparse, often superficial, and has declined over time in key areas such as environmental impact and bias, while third-party evaluators including academic researchers, nonprofits, and independent organizations provide broader and more rigorous coverage of bias, harmful content, and performance disparities. However, this complementarity has limits. Only model developers can authoritatively report on data provenance, content moderation labor, financial costs, and training infrastructure, yet interviews reveal that these disclosures are often deprioritized unless tied to product adoption or regulatory compliance. Our findings indicate that current evaluation practices leave major gaps in assessing AI's societal impacts, highlighting the urgent need for policies that promote developer transparency, strengthen independent evaluation ecosystems, and create shared infrastructure to aggregate and compare third-party evaluations in a consistent and accessible way.

Towards Leveraging News Media to Support Impact Assessment of AI Technologies

Nov 04, 2024Abstract:Expert-driven frameworks for impact assessments (IAs) may inadvertently overlook the effects of AI technologies on the public's social behavior, policy, and the cultural and geographical contexts shaping the perception of AI and the impacts around its use. This research explores the potentials of fine-tuning LLMs on negative impacts of AI reported in a diverse sample of articles from 266 news domains spanning 30 countries around the world to incorporate more diversity into IAs. Our findings highlight (1) the potential of fine-tuned open-source LLMs in supporting IA of AI technologies by generating high-quality negative impacts across four qualitative dimensions: coherence, structure, relevance, and plausibility, and (2) the efficacy of small open-source LLM (Mistral-7B) fine-tuned on impacts from news media in capturing a wider range of categories of impacts that GPT-4 had gaps in covering.

Supporting Anticipatory Governance using LLMs: Evaluating and Aligning Large Language Models with the News Media to Anticipate the Negative Impacts of AI

Jan 31, 2024Abstract:Anticipating the negative impacts of emerging AI technologies is a challenge, especially in the early stages of development. An understudied approach to such anticipation is the use of LLMs to enhance and guide this process. Despite advancements in LLMs and evaluation metrics to account for biases in generated text, it is unclear how well these models perform in anticipatory tasks. Specifically, the use of LLMs to anticipate AI impacts raises questions about the quality and range of categories of negative impacts these models are capable of generating. In this paper we leverage news media, a diverse data source that is rich with normative assessments of emerging technologies, to formulate a taxonomy of impacts to act as a baseline for comparing against. By computationally analyzing thousands of news articles published by hundreds of online news domains around the world, we develop a taxonomy consisting of ten categories of AI impacts. We then evaluate both instruction-based (GPT-4 and Mistral-7B-Instruct) and fine-tuned completion models (Mistral-7B and GPT-3) using a sample from this baseline. We find that the generated impacts using Mistral-7B, fine-tuned on impacts from the news media, tend to be qualitatively on par with impacts generated using a larger scale model such as GPT-4. Moreover, we find that these LLMs generate impacts that largely reflect the taxonomy of negative impacts identified in the news media, however the impacts produced by instruction-based models had gaps in the production of certain categories of impacts in comparison to fine-tuned models. This research highlights a potential bias in state-of-the-art LLMs when used for anticipating impacts and demonstrates the advantages of aligning smaller LLMs with a diverse range of impacts, such as those reflected in the news media, to better reflect such impacts during anticipatory exercises.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge