Mourad Rabah

AutoDFL: A Scalable and Automated Reputation-Aware Decentralized Federated Learning

Jan 08, 2025

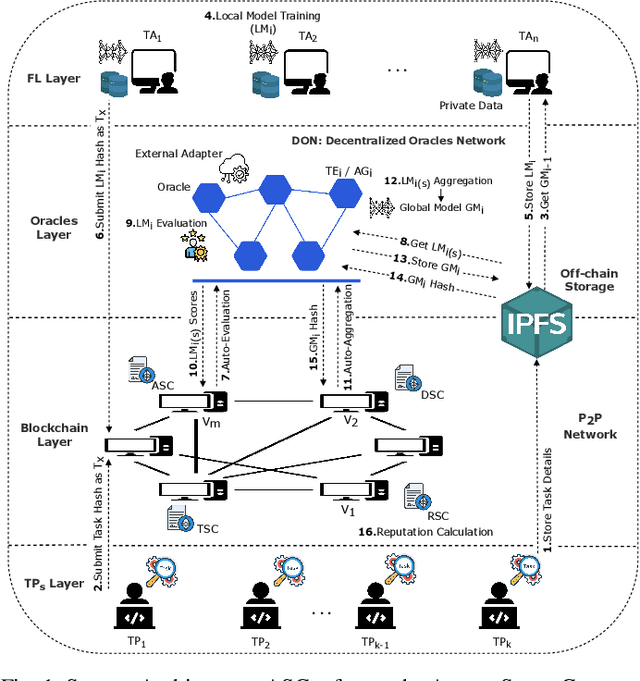

Abstract:Blockchained federated learning (BFL) combines the concepts of federated learning and blockchain technology to enhance privacy, security, and transparency in collaborative machine learning models. However, implementing BFL frameworks poses challenges in terms of scalability and cost-effectiveness. Reputation-aware BFL poses even more challenges, as blockchain validators are tasked with processing federated learning transactions along with the transactions that evaluate FL tasks and aggregate reputations. This leads to faster blockchain congestion and performance degradation. To improve BFL efficiency while increasing scalability and reducing on-chain reputation management costs, this paper proposes AutoDFL, a scalable and automated reputation-aware decentralized federated learning framework. AutoDFL leverages zk-Rollups as a Layer-2 scaling solution to boost the performance while maintaining the same level of security as the underlying Layer-1 blockchain. Moreover, AutoDFL introduces an automated and fair reputation model designed to incentivize federated learning actors. We develop a proof of concept for our framework for an accurate evaluation. Tested with various custom workloads, AutoDFL reaches an average throughput of over 3000 TPS with a gas reduction of up to 20X.

VerifBFL: Leveraging zk-SNARKs for A Verifiable Blockchained Federated Learning

Jan 08, 2025

Abstract:Blockchain-based Federated Learning (FL) is an emerging decentralized machine learning paradigm that enables model training without relying on a central server. Although some BFL frameworks are considered privacy-preserving, they are still vulnerable to various attacks, including inference and model poisoning. Additionally, most of these solutions employ strong trust assumptions among all participating entities or introduce incentive mechanisms to encourage collaboration, making them susceptible to multiple security flaws. This work presents VerifBFL, a trustless, privacy-preserving, and verifiable federated learning framework that integrates blockchain technology and cryptographic protocols. By employing zero-knowledge Succinct Non-Interactive Argument of Knowledge (zk-SNARKs) and incrementally verifiable computation (IVC), VerifBFL ensures the verifiability of both local training and aggregation processes. The proofs of training and aggregation are verified on-chain, guaranteeing the integrity and auditability of each participant's contributions. To protect training data from inference attacks, VerifBFL leverages differential privacy. Finally, to demonstrate the efficiency of the proposed protocols, we built a proof of concept using emerging tools. The results show that generating proofs for local training and aggregation in VerifBFL takes less than 81s and 2s, respectively, while verifying them on-chain takes less than 0.6s.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge