Motahare Namakin

A metaheuristic multi-objective interaction-aware feature selection method

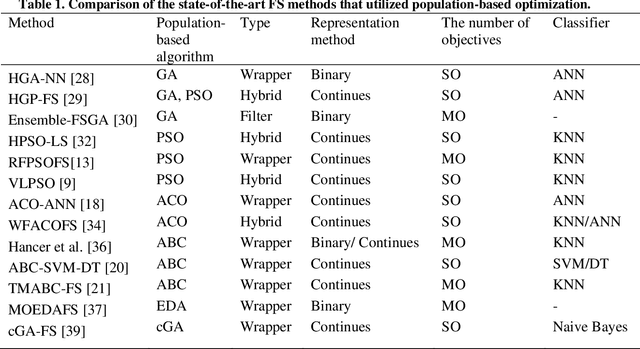

Nov 10, 2022Abstract:Multi-objective feature selection is one of the most significant issues in the field of pattern recognition. It is challenging because it maximizes the classification performance and, at the same time, minimizes the number of selected features, and the mentioned two objectives are usually conflicting. To achieve a better Pareto optimal solution, metaheuristic optimization methods are widely used in many studies. However, the main drawback is the exploration of a large search space. Another problem with multi-objective feature selection approaches is the interaction between features. Selecting correlated features has negative effect on classification performance. To tackle these problems, we present a novel multi-objective feature selection method that has several advantages. Firstly, it considers the interaction between features using an advanced probability scheme. Secondly, it is based on the Pareto Archived Evolution Strategy (PAES) method that has several advantages such as simplicity and its speed in exploring the solution space. However, we improve the structure of PAES in such a way that generates the offsprings, intelligently. Thus, the proposed method utilizes the introduced probability scheme to produce more promising offsprings. Finally, it is equipped with a novel strategy that guides it to find the optimum number of features through the process of evolution. The experimental results show a significant improvement in finding the optimal Pareto front compared to state-of-the-art methods on different real-world datasets.

An Evolutionary Correlation-aware Feature Selection Method for Classification Problems

Oct 16, 2021

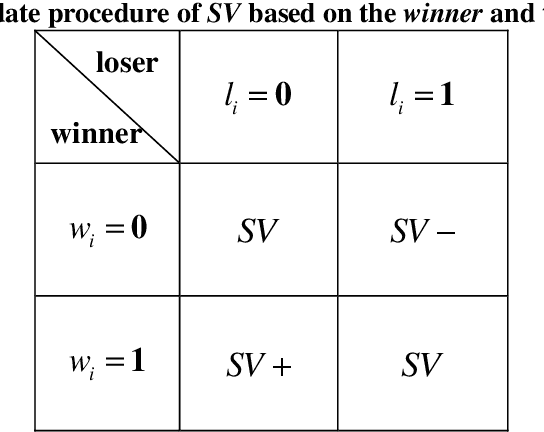

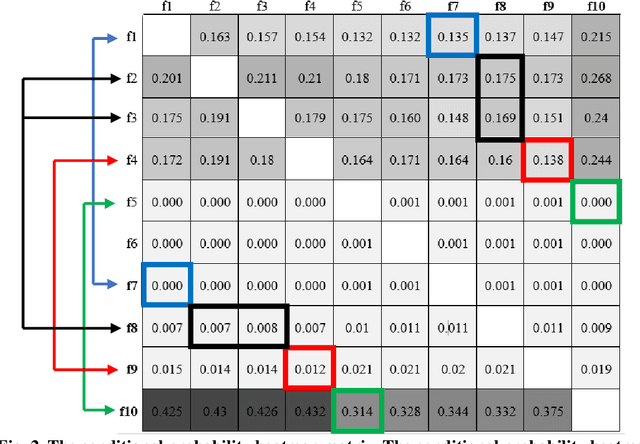

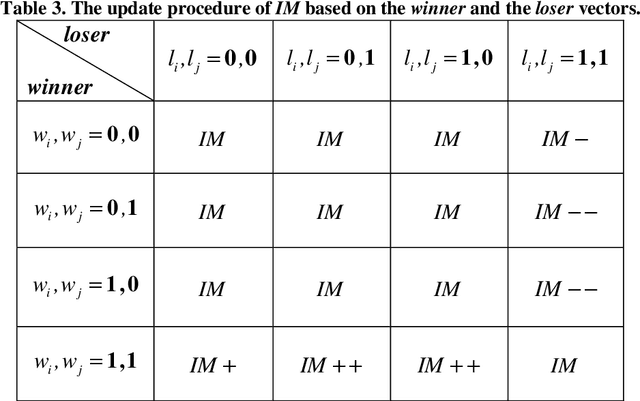

Abstract:The population-based optimization algorithms have provided promising results in feature selection problems. However, the main challenges are high time complexity. Moreover, the interaction between features is another big challenge in FS problems that directly affects the classification performance. In this paper, an estimation of distribution algorithm is proposed to meet three goals. Firstly, as an extension of EDA, the proposed method generates only two individuals in each iteration that compete based on a fitness function and evolve during the algorithm, based on our proposed update procedure. Secondly, we provide a guiding technique for determining the number of features for individuals in each iteration. As a result, the number of selected features of the final solution will be optimized during the evolution process. The two mentioned advantages can increase the convergence speed of the algorithm. Thirdly, as the main contribution of the paper, in addition to considering the importance of each feature alone, the proposed method can consider the interaction between features. Thus, it can deal with complementary features and consequently increase classification performance. To do this, we provide a conditional probability scheme that considers the joint probability distribution of selecting two features. The introduced probabilities successfully detect correlated features. Experimental results on a synthetic dataset with correlated features prove the performance of our proposed approach facing these types of features. Furthermore, the results on 13 real-world datasets obtained from the UCI repository show the superiority of the proposed method in comparison with some state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge