Mohammad Amin Morid

Healthcare cost prediction for heterogeneous patient profiles using deep learning models with administrative claims data

Feb 17, 2025

Abstract:Problem: How can we design patient cost prediction models that effectively address the challenges of heterogeneity in administrative claims (AC) data to ensure accurate, fair, and generalizable predictions, especially for high-need (HN) patients with complex chronic conditions? Relevance: Accurate and equitable patient cost predictions are vital for developing health management policies and optimizing resource allocation, which can lead to significant cost savings for healthcare payers, including government agencies and private insurers. Addressing disparities in prediction outcomes for HN patients ensures better economic and clinical decision-making, benefiting both patients and payers. Methodology: This study is grounded in socio-technical considerations that emphasize the interplay between technical systems (e.g., deep learning models) and humanistic outcomes (e.g., fairness in healthcare decisions). It incorporates representation learning and entropy measurement to address heterogeneity and complexity in data and patient profiles, particularly for HN patients. We propose a channel-wise deep learning framework that mitigates data heterogeneity by segmenting AC data into separate channels based on types of codes (e.g., diagnosis, procedures) and costs. This approach is paired with a flexible evaluation design that uses multi-channel entropy measurement to assess patient heterogeneity. Results: The proposed channel-wise models reduce prediction errors by 23% compared to single-channel models, leading to 16.4% and 19.3% reductions in overpayments and underpayments, respectively. Notably, the reduction in prediction bias is significantly higher for HN patients, demonstrating effectiveness in handling heterogeneity and complexity in data and patient profiles. This demonstrates the potential for applying channel-wise modeling to domains with similar heterogeneity challenges.

Time Series Prediction using Deep Learning Methods in Healthcare

Aug 30, 2021

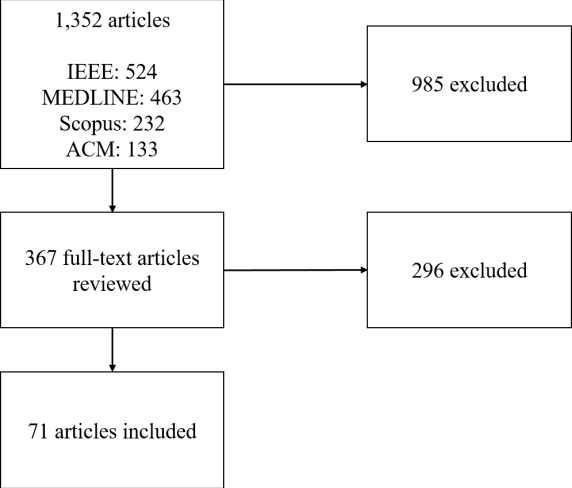

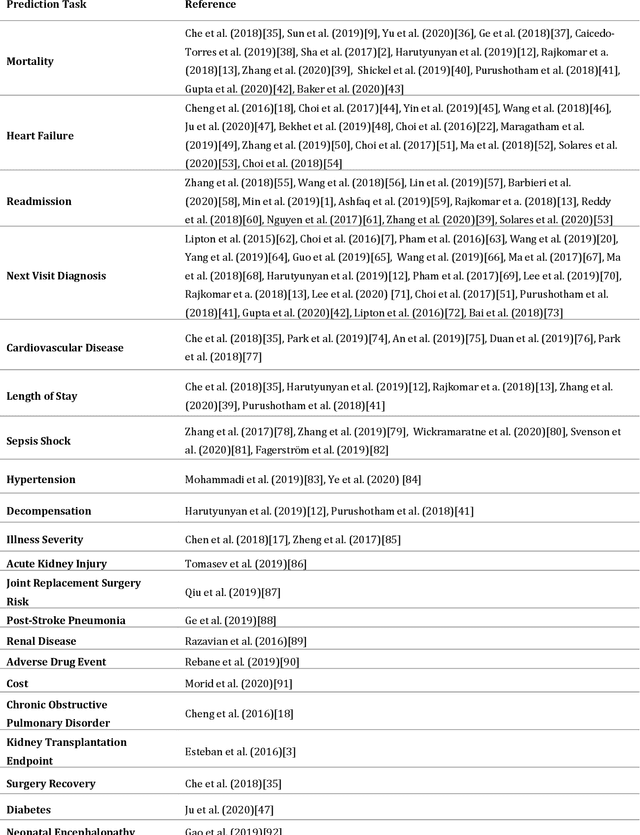

Abstract:Traditional machine learning methods face two main challenges in dealing with healthcare predictive analytics tasks. First, the high-dimensional nature of healthcare data needs labor-intensive and time-consuming processes to select an appropriate set of features for each new task. Secondly, these methods depend on feature engineering to capture the sequential nature of patient data, which may not adequately leverage the temporal patterns of the medical events and their dependencies. Recent deep learning methods have shown promising performance for various healthcare prediction tasks by addressing the high-dimensional and temporal challenges of medical data. These methods can learn useful representations of key factors (e.g., medical concepts or patients) and their interactions from high-dimensional raw (or minimally-processed) healthcare data. In this paper we systemically reviewed studies focused on using deep learning as the prediction model to leverage patient time series data for a healthcare prediction task from methodological perspective. To identify relevant studies, MEDLINE, IEEE, Scopus and ACM digital library were searched for studies published up to February 7th 2021. We found that researchers have contributed to deep time series prediction literature in ten research streams: deep learning models, missing value handling, irregularity handling, patient representation, static data inclusion, attention mechanisms, interpretation, incorporating medical ontologies, learning strategies, and scalability. This study summarizes research insights from these literature streams, identifies several critical research gaps, and suggests future research opportunities for deep learning in patient time series data.

Learning Hidden Patterns from Patient Multivariate Time Series Data Using Convolutional Neural Networks: A Case Study of Healthcare Cost Prediction

Sep 14, 2020

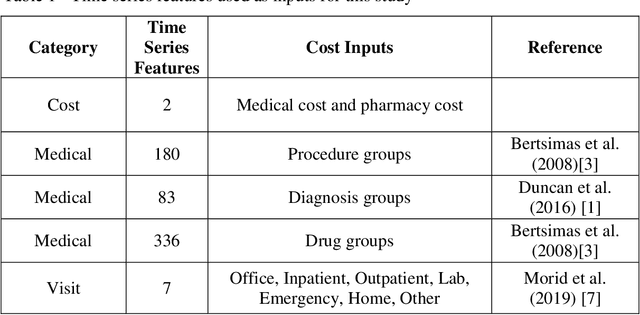

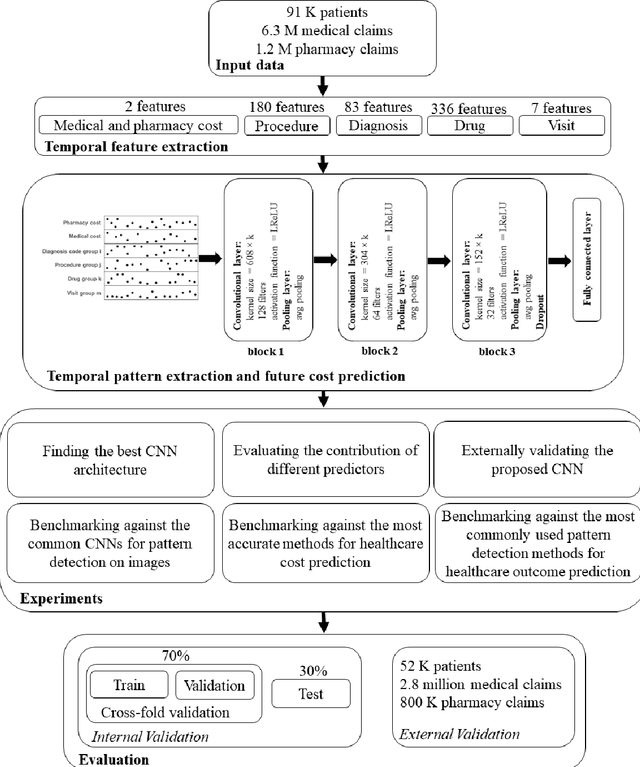

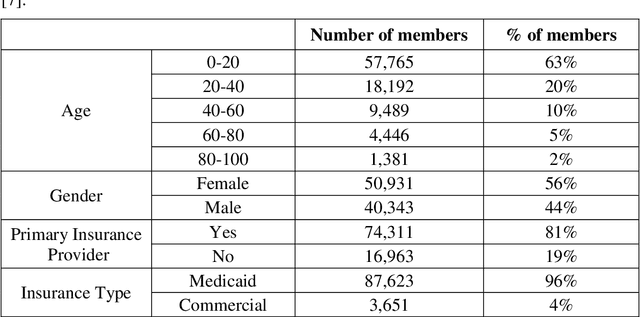

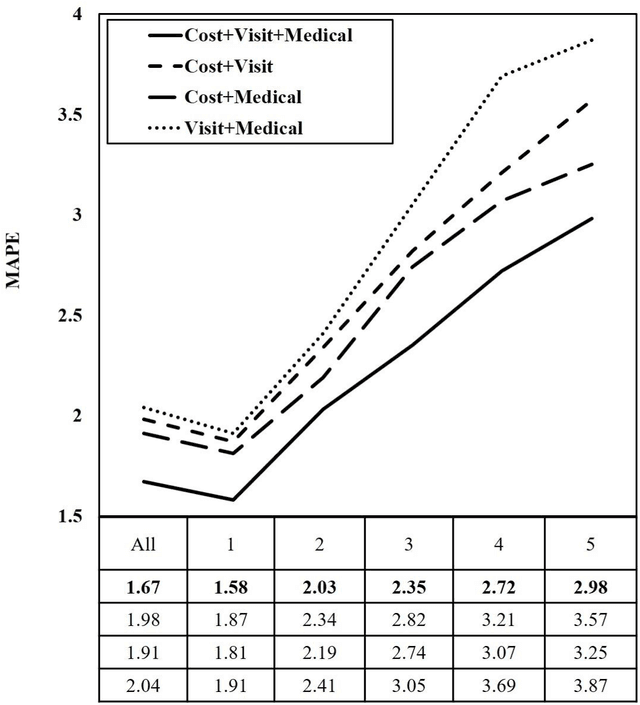

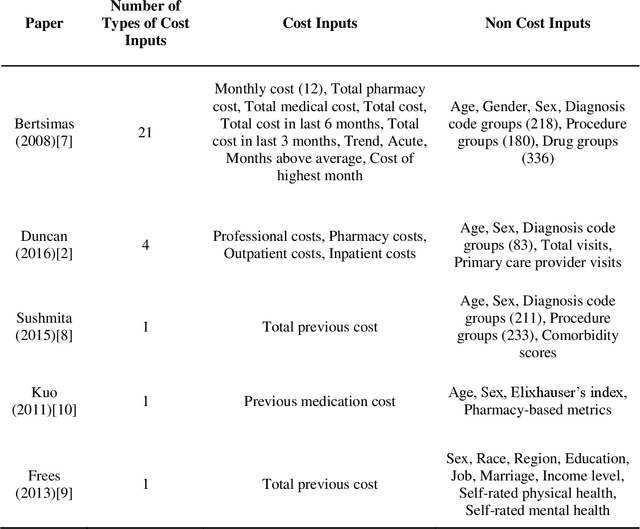

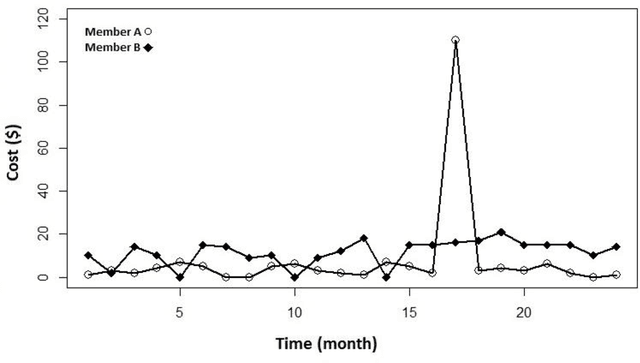

Abstract:Objective: To develop an effective and scalable individual-level patient cost prediction method by automatically learning hidden temporal patterns from multivariate time series data in patient insurance claims using a convolutional neural network (CNN) architecture. Methods: We used three years of medical and pharmacy claims data from 2013 to 2016 from a healthcare insurer, where data from the first two years were used to build the model to predict costs in the third year. The data consisted of the multivariate time series of cost, visit and medical features that were shaped as images of patients' health status (i.e., matrices with time windows on one dimension and the medical, visit and cost features on the other dimension). Patients' multivariate time series images were given to a CNN method with a proposed architecture. After hyper-parameter tuning, the proposed architecture consisted of three building blocks of convolution and pooling layers with an LReLU activation function and a customized kernel size at each layer for healthcare data. The proposed CNN learned temporal patterns became inputs to a fully connected layer. Conclusions: Feature learning through the proposed CNN configuration significantly improved individual-level healthcare cost prediction. The proposed CNN was able to outperform temporal pattern detection methods that look for a pre-defined set of pattern shapes, since it is capable of extracting a variable number of patterns with various shapes. Temporal patterns learned from medical, visit and cost data made significant contributions to the prediction performance. Hyper-parameter tuning showed that considering three-month data patterns has the highest prediction accuracy. Our results showed that patients' images extracted from multivariate time series data are different from regular images, and hence require unique designs of CNN architectures.

Healthcare Cost Prediction: Leveraging Fine-grain Temporal Patterns

Sep 14, 2020

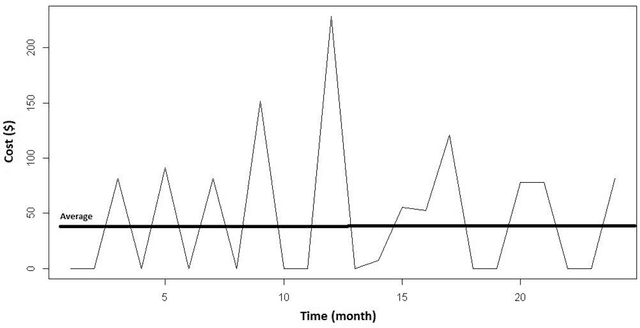

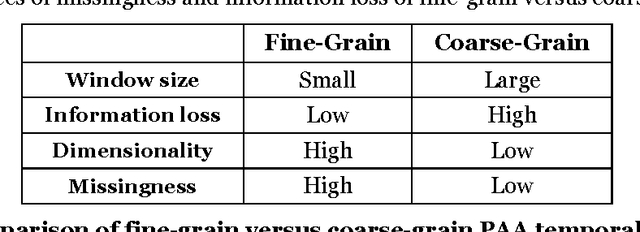

Abstract:Objective: To design and assess a method to leverage individuals' temporal data for predicting their healthcare cost. To achieve this goal, we first used patients' temporal data in their fine-grain form as opposed to coarse-grain form. Second, we devised novel spike detection features to extract temporal patterns that improve the performance of cost prediction. Third, we evaluated the effectiveness of different types of temporal features based on cost information, visit information and medical information for the prediction task. Materials and methods: We used three years of medical and pharmacy claims data from 2013 to 2016 from a healthcare insurer, where the first two years were used to build the model to predict the costs in the third year. To prepare the data for modeling and prediction, the time series data of cost, visit and medical information were extracted in the form of fine-grain features (i.e., segmenting each time series into a sequence of consecutive windows and representing each window by various statistics such as sum). Then, temporal patterns of the time series were extracted and added to fine-grain features using a novel set of spike detection features (i.e., the fluctuation of data points). Gradient Boosting was applied on the final set of extracted features. Moreover, the contribution of each type of data (i.e., cost, visit and medical) was assessed. Conclusions: Leveraging fine-grain temporal patterns for healthcare cost prediction significantly improves prediction performance. Enhancing fine-grain features with extraction of temporal cost and visit patterns significantly improved the performance. However, medical features did not have a significant effect on prediction performance. Gradient Boosting outperformed all other prediction models.

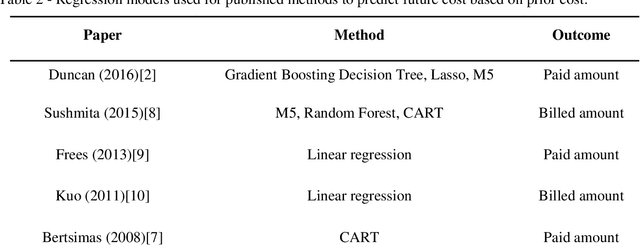

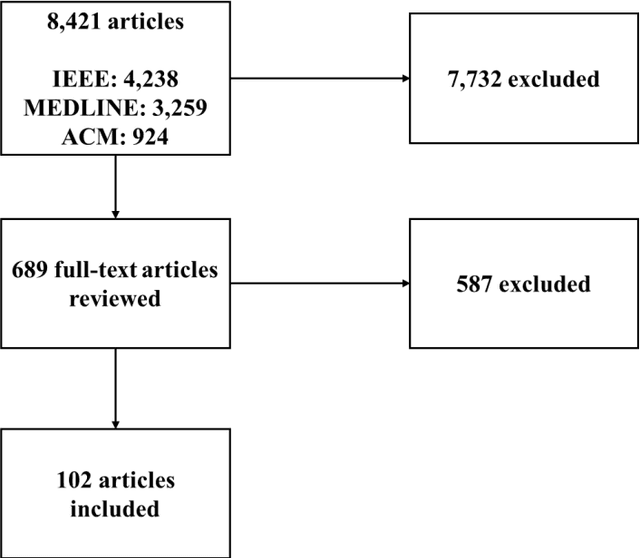

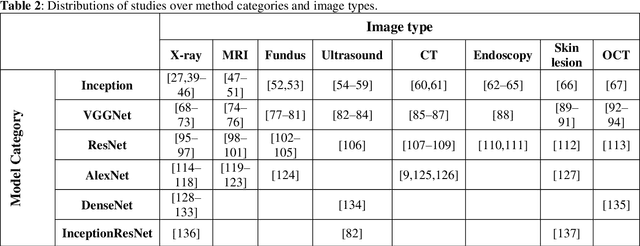

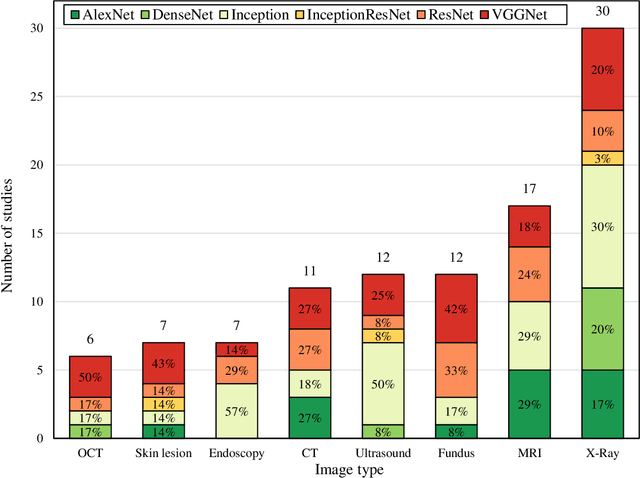

A scoping review of transfer learning research on medical image analysis using ImageNet

Apr 27, 2020

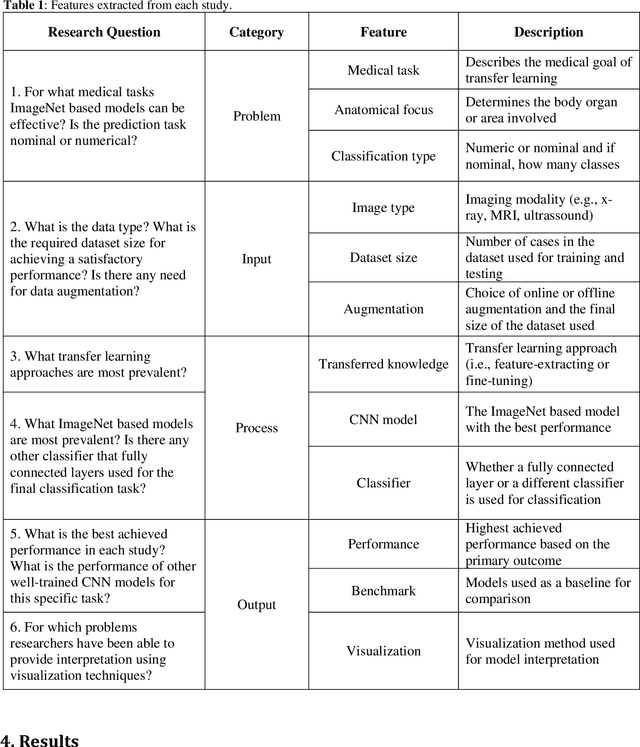

Abstract:Objective: Employing transfer learning (TL) with convolutional neural networks (CNNs), well-trained on non-medical ImageNet dataset, has shown promising results for medical image analysis in recent years. We aimed to conduct a scoping review to identify these studies and summarize their characteristics in terms of the problem description, input, methodology, and outcome. Materials and Methods: To identify relevant studies, MEDLINE, IEEE, and ACM digital library were searched. Two investigators independently reviewed articles to determine eligibility and to extract data according to a study protocol defined a priori. Results: After screening of 8,421 articles, 102 met the inclusion criteria. Of 22 anatomical areas, eye (18%), breast (14%), and brain (12%) were the most commonly studied. Data augmentation was performed in 72% of fine-tuning TL studies versus 15% of the feature-extracting TL studies. Inception models were the most commonly used in breast related studies (50%), while VGGNet was the common in eye (44%), skin (50%) and tooth (57%) studies. AlexNet for brain (42%) and DenseNet for lung studies (38%) were the most frequently used models. Inception models were the most frequently used for studies that analyzed ultrasound (55%), endoscopy (57%), and skeletal system X-rays (57%). VGGNet was the most common for fundus (42%) and optical coherence tomography images (50%). AlexNet was the most frequent model for brain MRIs (36%) and breast X-Rays (50%). 35% of the studies compared their model with other well-trained CNN models and 33% of them provided visualization for interpretation. Discussion: Various methods have been used in TL approaches from non-medical to medical image analysis. The findings of the scoping review can be used in future TL studies to guide the selection of appropriate research approaches, as well as identify research gaps and opportunities for innovation.

Leveraging Patient Similarity and Time Series Data in Healthcare Predictive Models

Apr 30, 2017

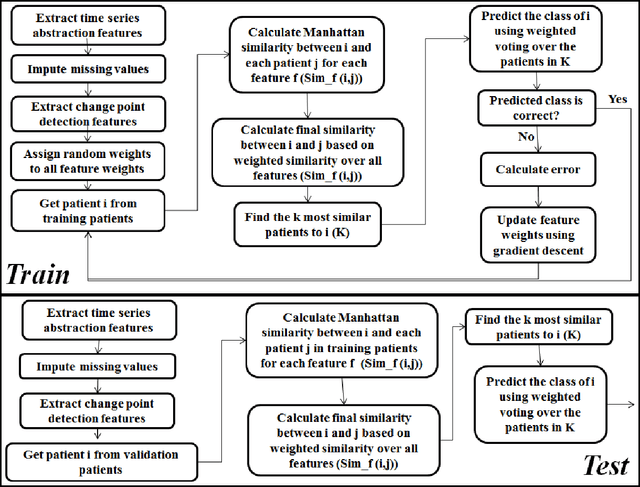

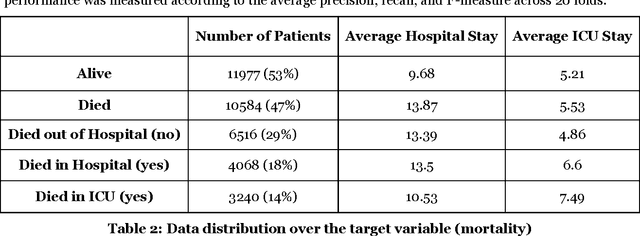

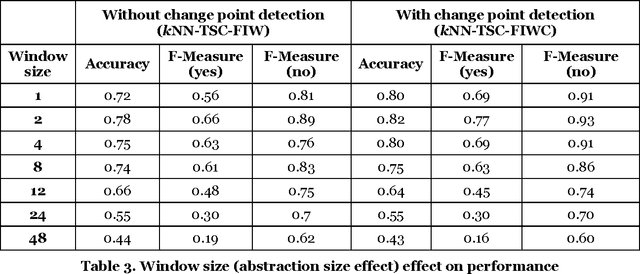

Abstract:Patient time series classification faces challenges in high degrees of dimensionality and missingness. In light of patient similarity theory, this study explores effective temporal feature engineering and reduction, missing value imputation, and change point detection methods that can afford similarity-based classification models with desirable accuracy enhancement. We select a piecewise aggregation approximation method to extract fine-grain temporal features and propose a minimalist method to impute missing values in temporal features. For dimensionality reduction, we adopt a gradient descent search method for feature weight assignment. We propose new patient status and directional change definitions based on medical knowledge or clinical guidelines about the value ranges for different patient status levels, and develop a method to detect change points indicating positive or negative patient status changes. We evaluate the effectiveness of the proposed methods in the context of early Intensive Care Unit mortality prediction. The evaluation results show that the k-Nearest Neighbor algorithm that incorporates methods we select and propose significantly outperform the relevant benchmarks for early ICU mortality prediction. This study makes contributions to time series classification and early ICU mortality prediction via identifying and enhancing temporal feature engineering and reduction methods for similarity-based time series classification.

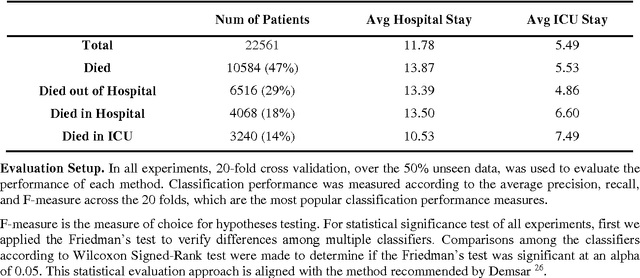

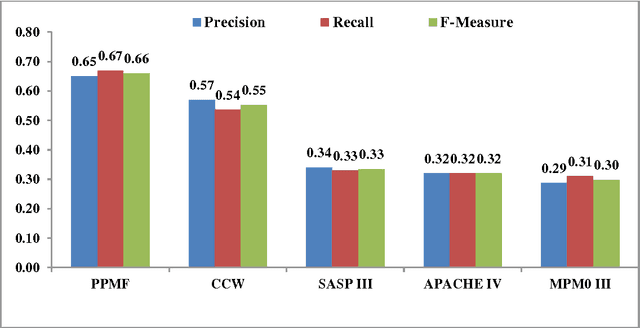

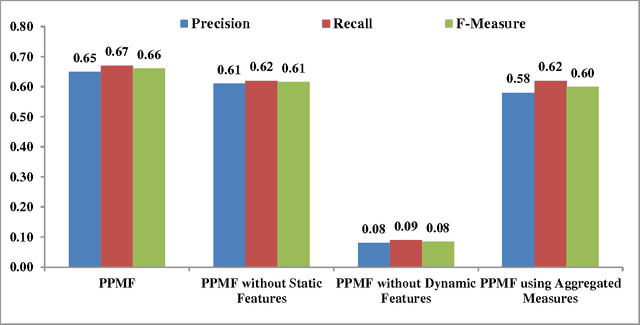

PPMF: A Patient-based Predictive Modeling Framework for Early ICU Mortality Prediction

Apr 25, 2017

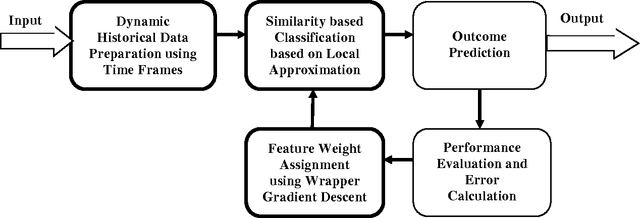

Abstract:To date, developing a good model for early intensive care unit (ICU) mortality prediction is still challenging. This paper presents a patient based predictive modeling framework (PPMF) to improve the performance of ICU mortality prediction using data collected during the first 48 hours of ICU admission. PPMF consists of three main components verifying three related research hypotheses. The first component captures dynamic changes of patients status in the ICU using their time series data (e.g., vital signs and laboratory tests). The second component is a local approximation algorithm that classifies patients based on their similarities. The third component is a Gradient Decent wrapper that updates feature weights according to the classification feedback. Experiments using data from MIMICIII show that PPMF significantly outperforms: (1) the severity score systems, namely SASP III, APACHE IV, and MPM0III, (2) the aggregation based classifiers that utilize summarized time series, and (3) baseline feature selection methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge