Michelle Ma

The Llama 4 Herd: Architecture, Training, Evaluation, and Deployment Notes

Jan 15, 2026Abstract:This document consolidates publicly reported technical details about Metas Llama 4 model family. It summarizes (i) released variants (Scout and Maverick) and the broader herd context including the previewed Behemoth teacher model, (ii) architectural characteristics beyond a high-level MoE description covering routed/shared-expert structure, early-fusion multimodality, and long-context design elements reported for Scout (iRoPE and length generalization strategies), (iii) training disclosures spanning pre-training, mid-training for long-context extension, and post-training methodology (lightweight SFT, online RL, and lightweight DPO) as described in release materials, (iv) developer-reported benchmark results for both base and instruction-tuned checkpoints, and (v) practical deployment constraints observed across major serving environments, including provider-specific context limits and quantization packaging. The manuscript also summarizes licensing obligations relevant to redistribution and derivative naming, and reviews publicly described safeguards and evaluation practices. The goal is to provide a compact technical reference for researchers and practitioners who need precise, source-backed facts about Llama 4.

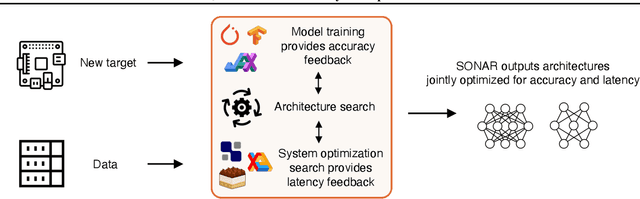

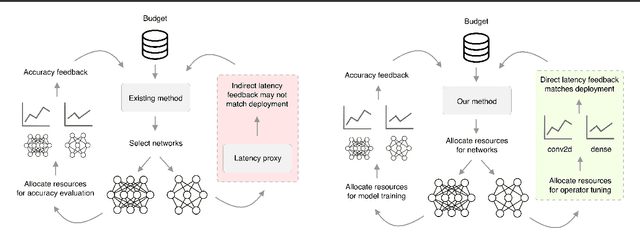

SONAR: Joint Architecture and System Optimization Search

Aug 25, 2022

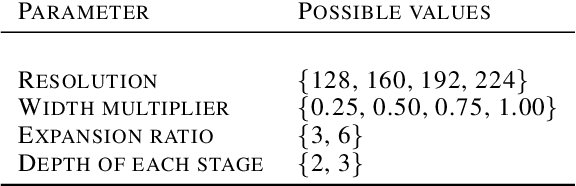

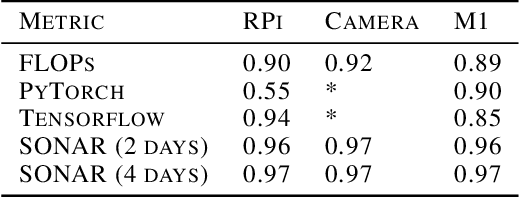

Abstract:There is a growing need to deploy machine learning for different tasks on a wide array of new hardware platforms. Such deployment scenarios require tackling multiple challenges, including identifying a model architecture that can achieve a suitable predictive accuracy (architecture search), and finding an efficient implementation of the model to satisfy underlying hardware-specific systems constraints such as latency (system optimization search). Existing works treat architecture search and system optimization search as separate problems and solve them sequentially. In this paper, we instead propose to solve these problems jointly, and introduce a simple but effective baseline method called SONAR that interleaves these two search problems. SONAR aims to efficiently optimize for predictive accuracy and inference latency by applying early stopping to both search processes. Our experiments on multiple different hardware back-ends show that SONAR identifies nearly optimal architectures 30 times faster than a brute force approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge