Max Larrazabal

AudioInsight: Detecting Social Contexts Relevant to Social Anxiety from Speech

Jul 19, 2024

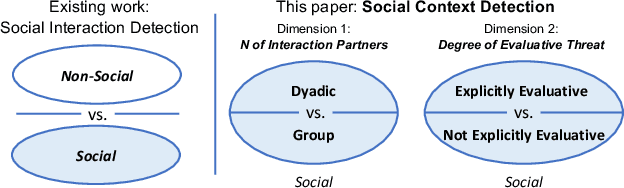

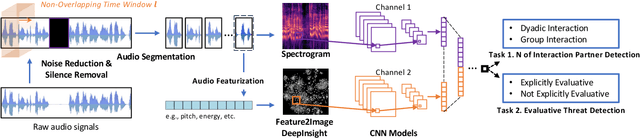

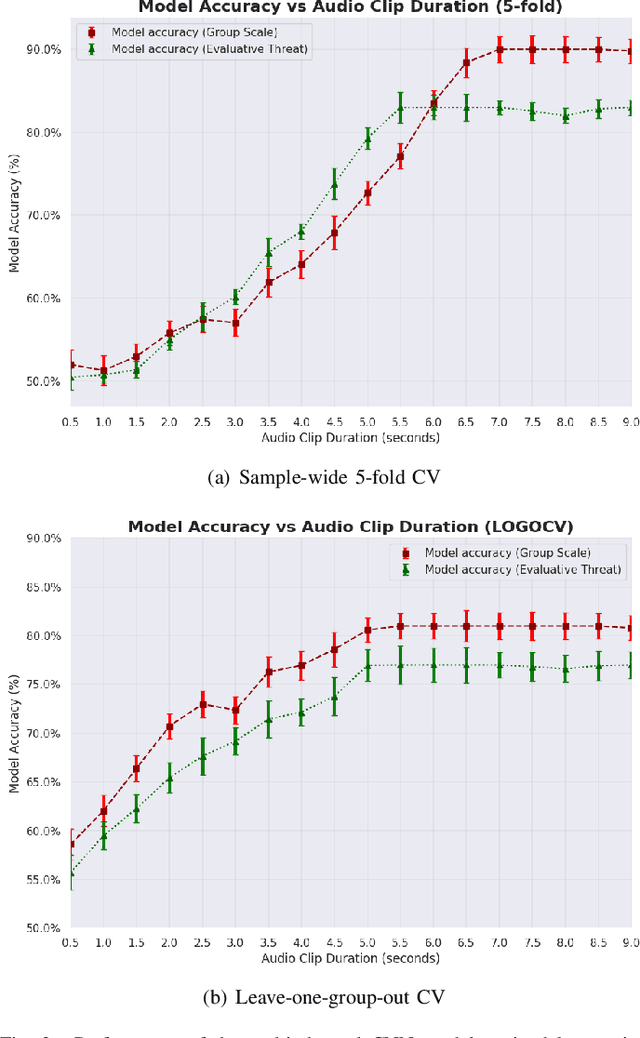

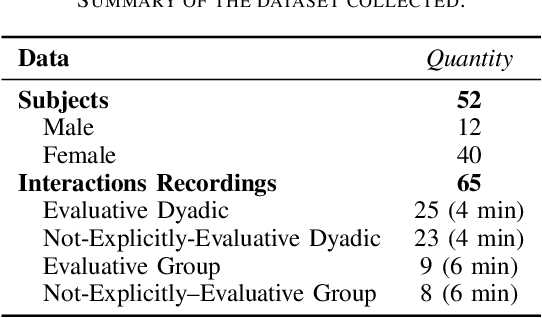

Abstract:During social interactions, understanding the intricacies of the context can be vital, particularly for socially anxious individuals. While previous research has found that the presence of a social interaction can be detected from ambient audio, the nuances within social contexts, which influence how anxiety provoking interactions are, remain largely unexplored. As an alternative to traditional, burdensome methods like self-report, this study presents a novel approach that harnesses ambient audio segments to detect social threat contexts. We focus on two key dimensions: number of interaction partners (dyadic vs. group) and degree of evaluative threat (explicitly evaluative vs. not explicitly evaluative). Building on data from a Zoom-based social interaction study (N=52 college students, of whom the majority N=45 are socially anxious), we employ deep learning methods to achieve strong detection performance. Under sample-wide 5-fold Cross Validation (CV), our model distinguished dyadic from group interactions with 90\% accuracy and detected evaluative threat at 83\%. Using a leave-one-group-out CV, accuracies were 82\% and 77\%, respectively. While our data are based on virtual interactions due to pandemic constraints, our method has the potential to extend to diverse real-world settings. This research underscores the potential of passive sensing and AI to differentiate intricate social contexts, and may ultimately advance the ability of context-aware digital interventions to offer personalized mental health support.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge