Matt Rowe

Few-shot Learning with Deep Triplet Networks for Brain Imaging Modality Recognition

Aug 27, 2019

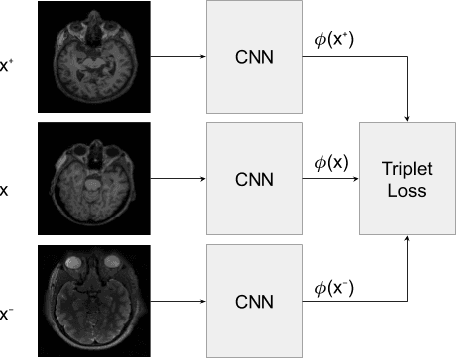

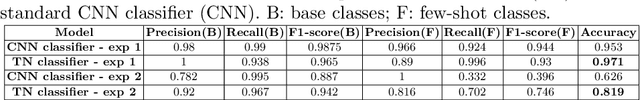

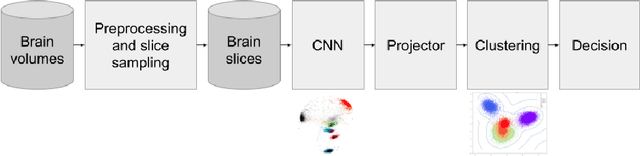

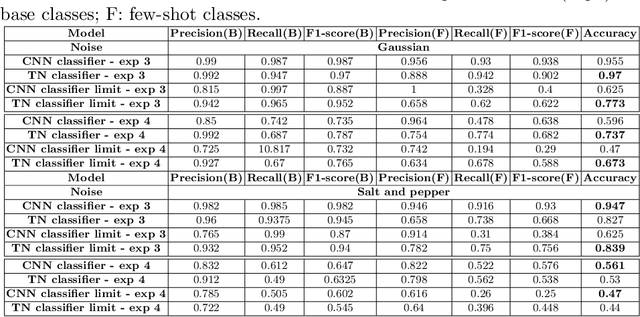

Abstract:Image modality recognition is essential for efficient imaging workflows in current clinical environments, where multiple imaging modalities are used to better comprehend complex diseases. Emerging biomarkers from novel, rare modalities are being developed to aid in such understanding, however the availability of these images is often limited. This scenario raises the necessity of recognising new imaging modalities without them being collected and annotated in large amounts. In this work, we present a few-shot learning model for limited training examples based on Deep Triplet Networks. We show that the proposed model is more accurate in distinguishing different modalities than a traditional Convolutional Neural Network classifier when limited samples are available. Furthermore, we evaluate the performance of both classifiers when presented with noisy samples and provide an initial inspection of how the proposed model can incorporate measures of uncertainty to be more robust against out-of-sample examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge