Matjaž Perc

Epistemological Fault Lines Between Human and Artificial Intelligence

Dec 22, 2025Abstract:Large language models (LLMs) are widely described as artificial intelligence, yet their epistemic profile diverges sharply from human cognition. Here we show that the apparent alignment between human and machine outputs conceals a deeper structural mismatch in how judgments are produced. Tracing the historical shift from symbolic AI and information filtering systems to large-scale generative transformers, we argue that LLMs are not epistemic agents but stochastic pattern-completion systems, formally describable as walks on high-dimensional graphs of linguistic transitions rather than as systems that form beliefs or models of the world. By systematically mapping human and artificial epistemic pipelines, we identify seven epistemic fault lines, divergences in grounding, parsing, experience, motivation, causal reasoning, metacognition, and value. We call the resulting condition Epistemia: a structural situation in which linguistic plausibility substitutes for epistemic evaluation, producing the feeling of knowing without the labor of judgment. We conclude by outlining consequences for evaluation, governance, and epistemic literacy in societies increasingly organized around generative AI.

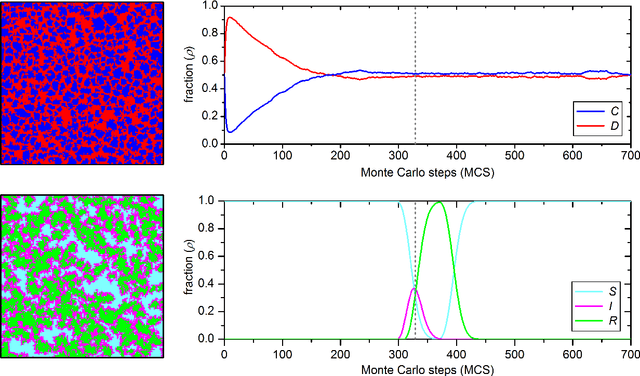

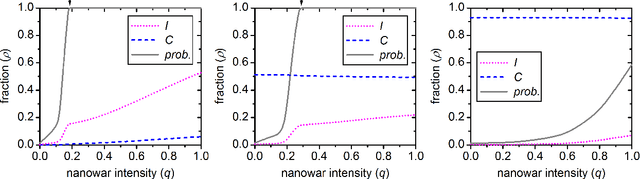

Nanowars can cause epidemic resurgence and fail to promote cooperation

Jan 13, 2022

Abstract:In a non-sustainable, "over-populated" world, what might the use of nanotechnology-based targeted, autonomous weapons mean for the future of humanity? In order to gain some insights, we make a simplified game-theoretical thought experiment. We consider a population where agents play the public goods game, and where in parallel an epidemic unfolds. Agents that are infected defectors are killed with a certain probability and replaced by susceptible cooperators. We show that such "nanowars", even if aiming to promote good behavior and planetary health, fail not only to promote cooperation, but they also significantly increase the probability of repetitive epidemic waves. In fact, newborn cooperators turn out to be easy targets for defectors in their neighborhood. Therefore, counterintuitively, the discussed intervention may even have the opposite effect as desired, promoting defection. We also find a critical threshold for the death rate of infected defectors, beyond which resurgent epidemic waves become a certainty. In conclusion, we urgently call for international regulation of nanotechnology and autonomous weapons.

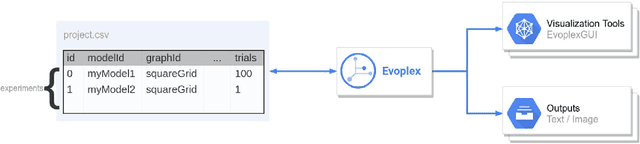

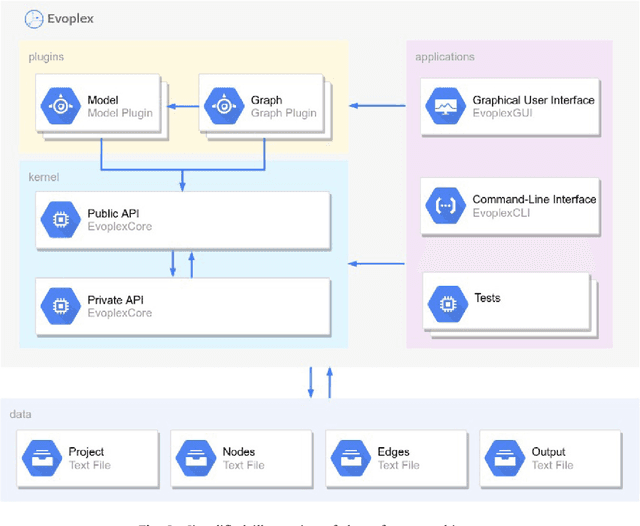

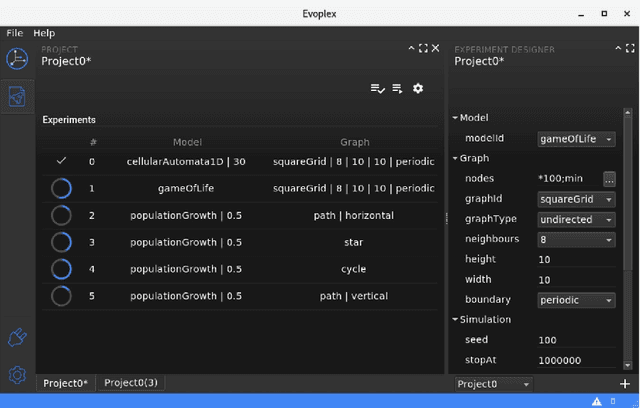

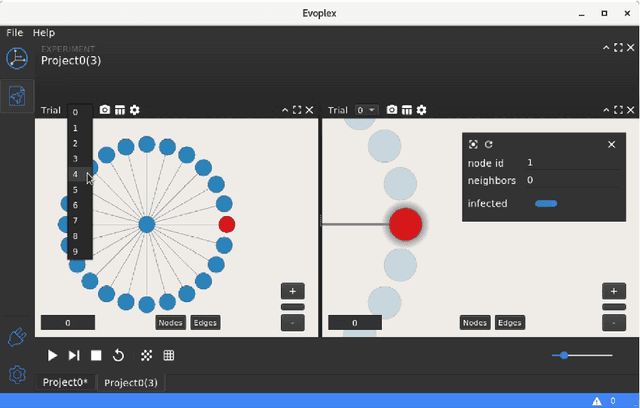

Evoplex: A platform for agent-based modeling on networks

Nov 25, 2018

Abstract:Evoplex is a fast, robust and extensible platform for developing agent-based models and multi-agent systems on networks. Each agent is represented as a node and interacts with its neighbors, as defined by the network structure. Evoplex is ideal for modeling complex systems, for example in evolutionary game theory and computational social science. In Evoplex, the models are not coupled to the execution parameters or the visualization tools, and there is a user-friendly graphical user interface which makes it easy for all users, ranging from newcomers to experienced, to create, analyze, replicate and reproduce the experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge