Masoud Babaei

DeepAngle: Fast calculation of contact angles in tomography images using deep learning

Nov 28, 2022Abstract:DeepAngle is a machine learning-based method to determine the contact angles of different phases in the tomography images of porous materials. Measurement of angles in 3--D needs to be done within the surface perpendicular to the angle planes, and it could become inaccurate when dealing with the discretized space of the image voxels. A computationally intensive solution is to correlate and vectorize all surfaces using an adaptable grid, and then measure the angles within the desired planes. On the contrary, the present study provides a rapid and low-cost technique powered by deep learning to estimate the interfacial angles directly from images. DeepAngle is tested on both synthetic and realistic images against the direct measurement technique and found to improve the r-squared by 5 to 16% while lowering the computational cost 20 times. This rapid method is especially applicable for processing large tomography data and time-resolved images, which is computationally intensive. The developed code and the dataset are available at an open repository on GitHub (https://www.github.com/ArashRabbani/DeepAngle).

Automated segmentation and morphological characterization of placental histology images based on a single labeled image

Oct 07, 2022

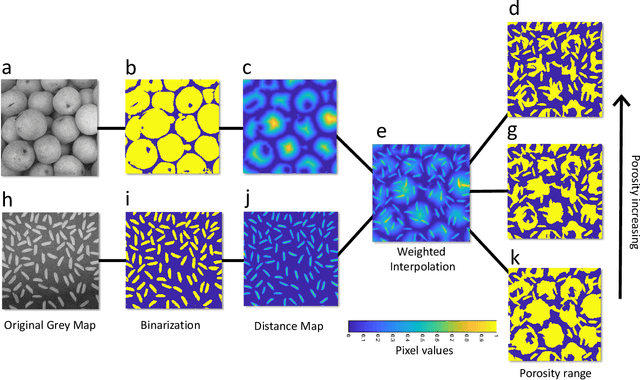

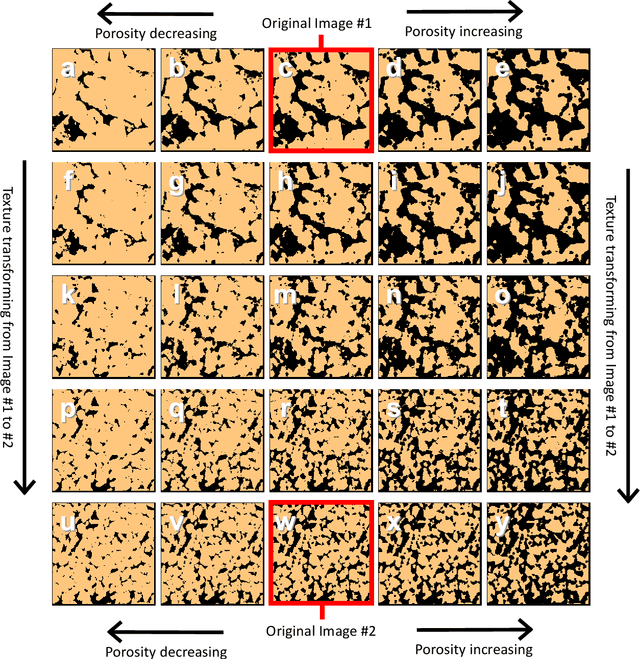

Abstract:In this study, a novel method of data augmentation has been presented for the segmentation of placental histological images when the labeled data are scarce. This method generates new realizations of the placenta intervillous morphology while maintaining the general textures and orientations. As a result, a diversified artificial dataset of images is generated that can be used for training deep learning segmentation models. We have observed that on average the presented method of data augmentation led to a 42% decrease in the binary cross-entropy loss of the validation dataset compared to the common approach in the literature. Additionally, the morphology of the intervillous space is studied under the effect of the proposed image reconstruction technique, and the diversity of the artificially generated population is quantified. Due to the high resemblance of the generated images to the real ones, the applications of the proposed method may not be limited to placental histological images, and it is recommended that other types of tissues be investigated in future studies.

Resolution enhancement of placenta histological images using deep learning

Jul 30, 2022

Abstract:In this study, a method has been developed to improve the resolution of histological human placenta images. For this purpose, a paired series of high- and low-resolution images have been collected to train a deep neural network model that can predict image residuals required to improve the resolution of the input images. A modified version of the U-net neural network model has been tailored to find the relationship between the low resolution and residual images. After training for 900 epochs on an augmented dataset of 1000 images, the relative mean squared error of 0.003 is achieved for the prediction of 320 test images. The proposed method has not only improved the contrast of the low-resolution images at the edges of cells but added critical details and textures that mimic high-resolution images of placenta villous space.

DeePore: a deep learning workflow for rapid and comprehensive characterization of porous materials

May 03, 2020

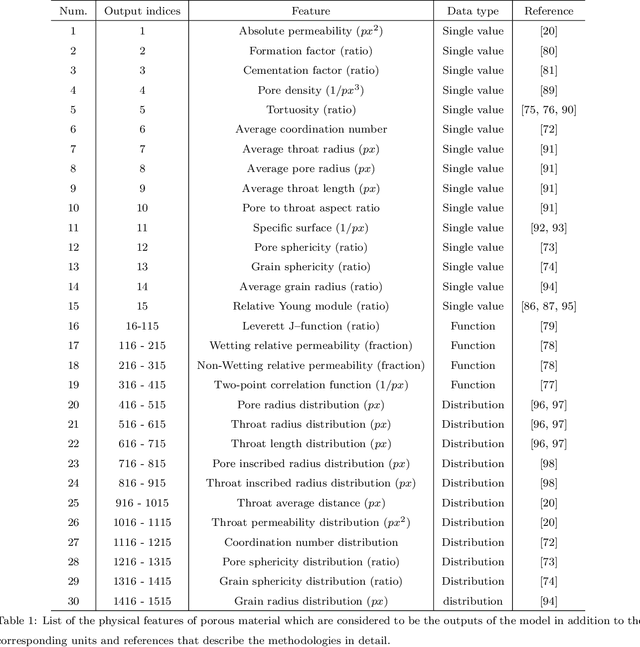

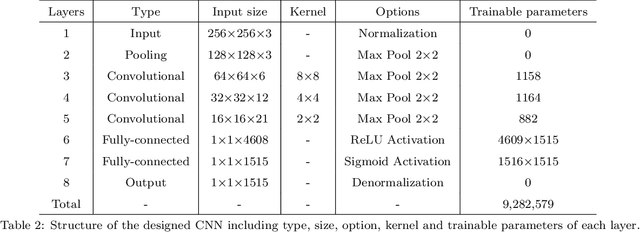

Abstract:DeePore is a deep learning workflow for rapid estimation of a wide range of porous material properties based on the binarized micro-tomography images. We generated 17700 semi-real 3-D micro-structures of porous geo-materials and 30 physical properties of each sample are calculated using physical simulations on the corresponding pore network models. The dataset of porous material images obtained and physical features of them are unprecedented in terms of the number of samples and variety of the extracted features. Next, a re-designed feed-forward convolutional neural network is trained based on the dataset to estimate several morphological, hydraulic, electrical, and mechanical characteristics of porous material in a fraction of a second. The average coefficient of determination ($R^2$) for 3173 testing samples is 0.9385 which is very reasonable considering the wide range of micro-structure textures and extracted features. This workflow is compatible with any physical size of the images due to its dimensionless approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge