Maryam Alimardani

Would You Rely on an Eerie Agent? A Systematic Review of the Impact of the Uncanny Valley Effect on Trust in Human-Agent Interaction

May 08, 2025

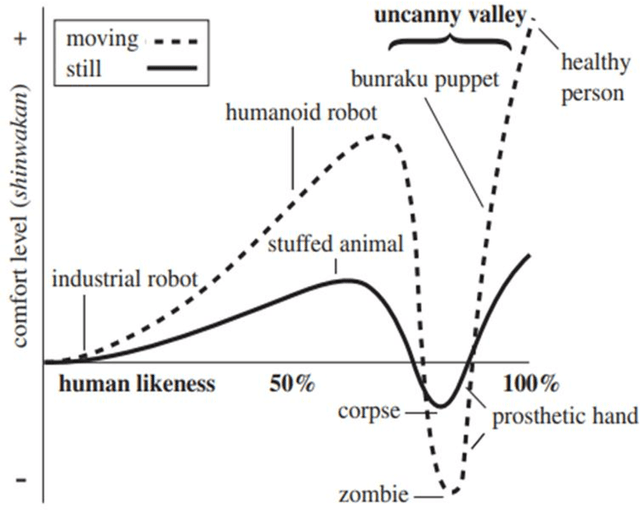

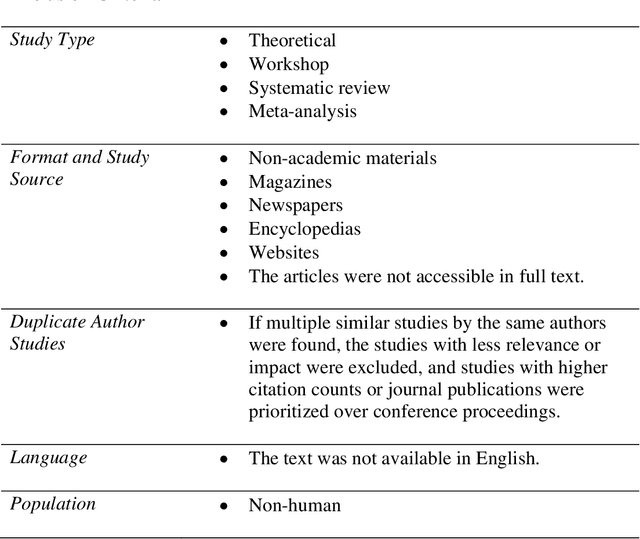

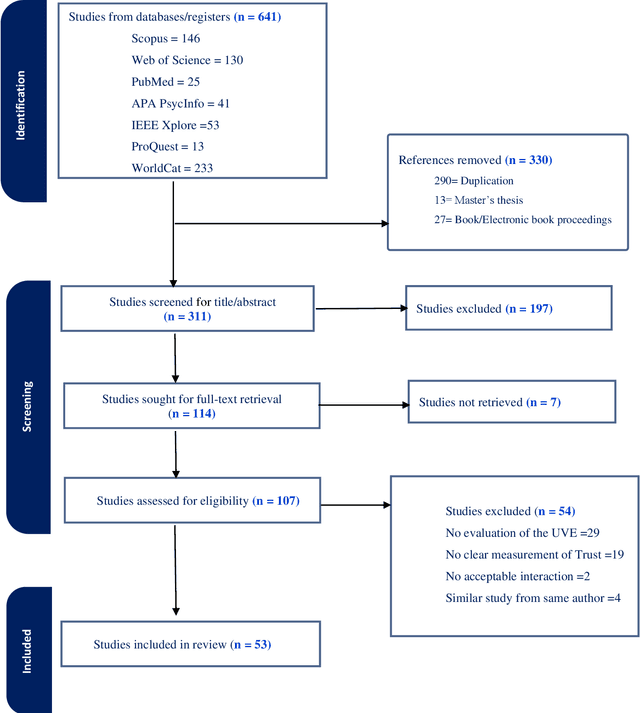

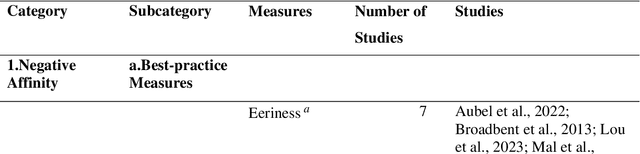

Abstract:Trust is a fundamental component of human-agent interaction. With the increasing presence of artificial agents in daily life, it is essential to understand how people perceive and trust these agents. One of the key challenges affecting this perception is the Uncanny Valley Effect (UVE), where increasingly human-like artificial beings can be perceived as eerie or repelling. Despite growing interest in trust and the UVE, existing research varies widely in terms of how these concepts are defined and operationalized. This inconsistency raises important questions about how and under what conditions the UVE influences trust in agents. A systematic understanding of their relationship is currently lacking. This review aims to examine the impact of the UVE on human trust in agents and to identify methodological patterns, limitations, and gaps in the existing empirical literature. Following PRISMA guidelines, a systematic search identified 53 empirical studies that investigated both UVE-related constructs and trust or trust-related outcomes. Studies were analyzed based on a structured set of categories, including types of agents and interactions, methodological and measurement approaches, and key findings. The results of our systematic review reveal that most studies rely on static images or hypothetical scenarios with limited real-time interaction, and the majority use subjective trust measures. This review offers a novel framework for classifying trust measurement approaches with regard to the best-practice criteria for empirically investigating the UVE. As the first systematic attempt to map the intersection of UVE and trust, this review contributes to a deeper understanding of their interplay and offers a foundation for future research. Keywords: the uncanny valley effect, trust, human-likeness, affinity response, human-agent interaction

Predicting Workload in Virtual Flight Simulations using EEG Features (Including Post-hoc Analysis in Appendix)

Dec 17, 2024

Abstract:Effective cognitive workload management has a major impact on the safety and performance of pilots. Integrating brain-computer interfaces (BCIs) presents an opportunity for real-time workload assessment. Leveraging cognitive workload data from immersive, high-fidelity virtual reality (VR) flight simulations enhances ecological validity and allows for dynamic adjustments to training scenarios based on individual cognitive states. While prior studies have predominantly concentrated on EEG spectral power for workload prediction, delving into inter-brain connectivity may yield deeper insights. This study assessed the predictive value of EEG spectral and connectivity features in distinguishing high vs. low workload periods during simulated flight in VR and Desktop conditions. EEG data were collected from 52 non-pilot participants conducting flight tasks in an aircraft simulation, after which they reported cognitive workload using the NASA Task Load Index. Using an ensemble approach, a stacked classifier was trained to predict workload using two feature sets extracted from the EEG data: 1) spectral features (Baseline model), and 2) a combination of spectral and connectivity features (Connectivity model), both within the alpha, beta, and theta band ranges. Results showed that the performance of the Connectivity model surpassed the Baseline model. Additionally, Recursive Feature Elimination (RFE) provided insights into the most influential workload-predicting features, highlighting the potential dominance of parietal-directed connectivity in managing cognitive workload during simulated flight. Further research on other connectivity metrics and alternative models (such as deep learning) in a large sample of pilots is essential to validate the possibility of a real-time BCI for the prediction of workload under safety-critical operational conditions.

End-to-End Deep Transfer Learning for Calibration-free Motor Imagery Brain Computer Interfaces

Jul 24, 2023Abstract:A major issue in Motor Imagery Brain-Computer Interfaces (MI-BCIs) is their poor classification accuracy and the large amount of data that is required for subject-specific calibration. This makes BCIs less accessible to general users in out-of-the-lab applications. This study employed deep transfer learning for development of calibration-free subject-independent MI-BCI classifiers. Unlike earlier works that applied signal preprocessing and feature engineering steps in transfer learning, this study adopted an end-to-end deep learning approach on raw EEG signals. Three deep learning models (MIN2Net, EEGNet and DeepConvNet) were trained and compared using an openly available dataset. The dataset contained EEG signals from 55 subjects who conducted a left- vs. right-hand motor imagery task. To evaluate the performance of each model, a leave-one-subject-out cross validation was used. The results of the models differed significantly. MIN2Net was not able to differentiate right- vs. left-hand motor imagery of new users, with a median accuracy of 51.7%. The other two models performed better, with median accuracies of 62.5% for EEGNet and 59.2% for DeepConvNet. These accuracies do not reach the required threshold of 70% needed for significant control, however, they are similar to the accuracies of these models when tested on other datasets without transfer learning.

EEG-based Classification of Drivers Attention using Convolutional Neural Network

Aug 23, 2021

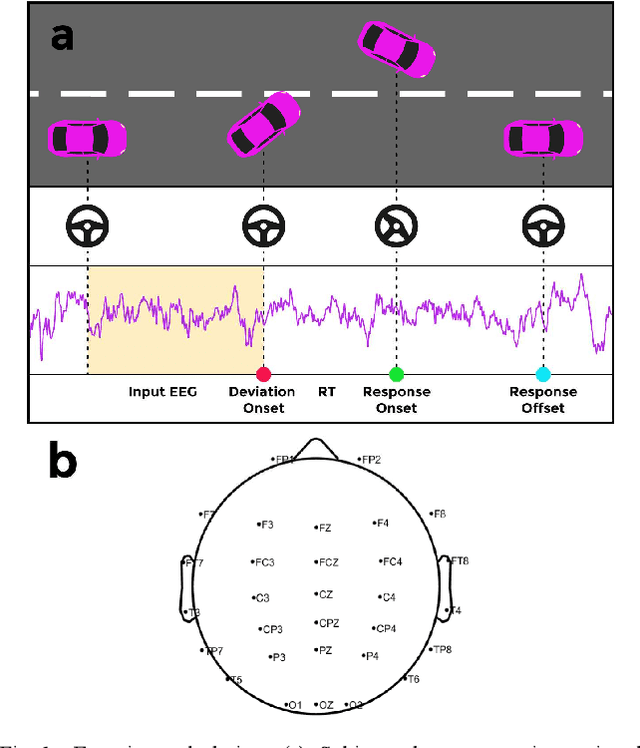

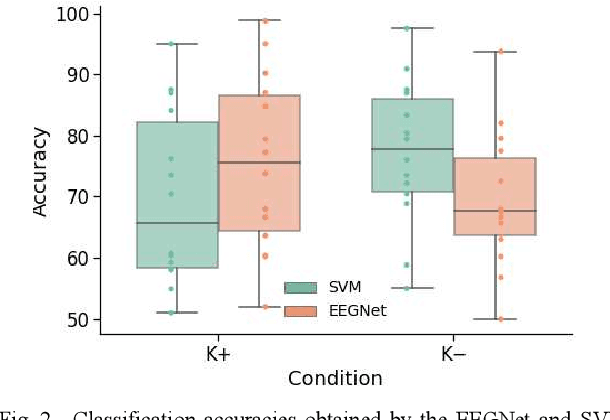

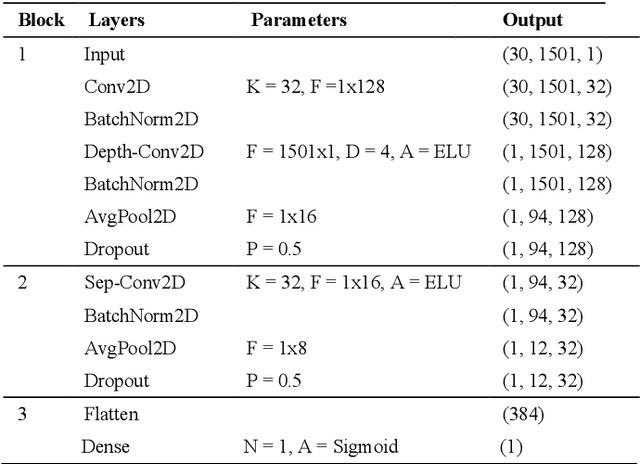

Abstract:Accurate detection of a drivers attention state can help develop assistive technologies that respond to unexpected hazards in real time and therefore improve road safety. This study compares the performance of several attention classifiers trained on participants brain activity. Participants performed a driving task in an immersive simulator where the car randomly deviated from the cruising lane. They had to correct the deviation and their response time was considered as an indicator of attention level. Participants repeated the task in two sessions; in one session they received kinesthetic feedback and in another session no feedback. Using their EEG signals, we trained three attention classifiers; a support vector machine (SVM) using EEG spectral band powers, and a Convolutional Neural Network (CNN) using either spectral features or the raw EEG data. Our results indicated that the CNN model trained on raw EEG data obtained under kinesthetic feedback achieved the highest accuracy (89%). While using a participants own brain activity to train the model resulted in the best performances, inter-subject transfer learning still performed high (75%), showing promise for calibration-free Brain-Computer Interface (BCI) systems. Our findings show that CNN and raw EEG signals can be employed for effective training of a passive BCI for real-time attention classification.

Robot-Assisted Mindfulness Practice: Analysis of Neurophysiological Responses and Affective State Change

Aug 12, 2020

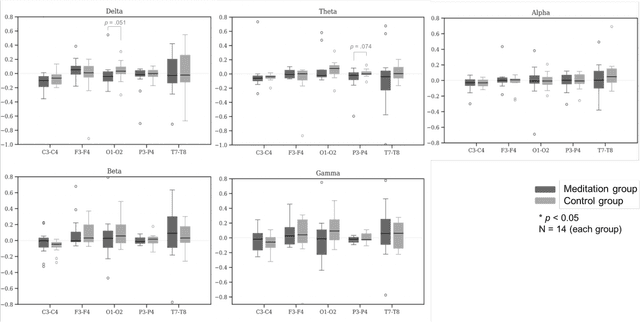

Abstract:Mindfulness is the state of paying attention to the present moment on purpose and meditation is the technique to obtain this state. This study aims to develop a robot assistant that facilitates mindfulness training by means of a Brain Computer Interface (BCI) system. To achieve this goal, we collected EEG signals from two groups of subjects engaging in a meditative vs. nonmeditative human robot interaction (HRI) and evaluated cerebral hemispheric asymmetry, which is recognized as a well defined indicator of emotional states. Moreover, using self reported affective states, we strived to explain asymmetry changes based on pre and post experiment mood alterations. We found that unlike earlier meditation studies, the frontocentral activations in alpha and theta frequency bands were not influenced by robot guided mindfulness practice, however there was a significantly greater right sided activity in the occipital gamma band of Meditation group, which is attributed to increased sensory awareness and open monitoring. In addition, there was a significant main effect of Time on participants self reported affect, indicating an improved mood after interaction with the robot regardless of the interaction type. Our results suggest that EEG responses during robot-guided meditation hold promise in realtime detection and neurofeedback of mindful state to the user, however the experienced neurophysiological changes may differ based on the meditation practice and recruited tools. This study is the first to report EEG changes during mindfulness practice with a robot. We believe that our findings driven from an ecologically valid setting, can be used in development of future BCI systems that are integrated with social robots for health applications.

Prediction of Human Empathy based on EEG Cortical Asymmetry

May 06, 2020

Abstract:Humans constantly interact with digital devices that disregard their feelings. However, the synergy between human and technology can be strengthened if the technology is able to distinguish and react to human emotions. Models that rely on unconscious indications of human emotions, such as (neuro)physiological signals, hold promise in personalization of feedback and adaptation of the interaction. The current study elaborated on adopting a predictive approach in studying human emotional processing based on brain activity. More specifically, we investigated the proposition of predicting self-reported human empathy based on EEG cortical asymmetry in different areas of the brain. Different types of predictive models i.e. multiple linear regression analyses as well as binary and multiclass classifications were evaluated. Results showed that lateralization of brain oscillations at specific frequency bands is an important predictor of self-reported empathy scores. Additionally, prominent classification performance was found during resting-state which suggests that emotional stimulation is not required for accurate prediction of empathy -- as a personality trait -- based on EEG data. Our findings not only contribute to the general understanding of the mechanisms of empathy, but also facilitate a better grasp on the advantages of applying a predictive approach compared to hypothesis-driven studies in neuropsychological research. More importantly, our results could be employed in the development of brain-computer interfaces that assist people with difficulties in expressing or recognizing emotions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge