M. Voetberg

Self-Driving Telescopes: Autonomous Scheduling of Astronomical Observation Campaigns with Offline Reinforcement Learning

Nov 29, 2023

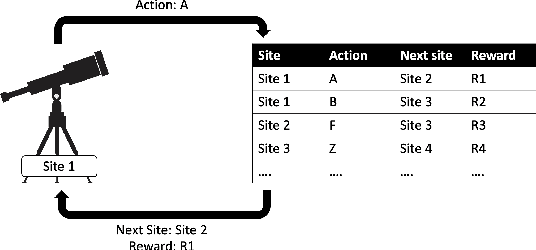

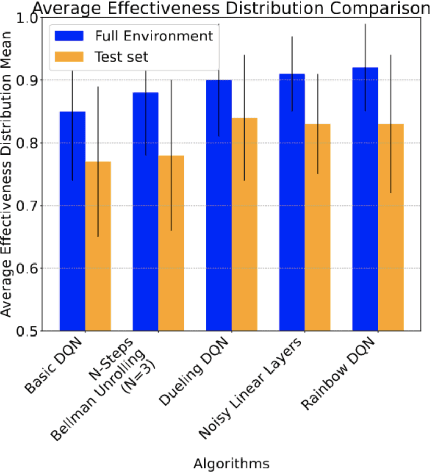

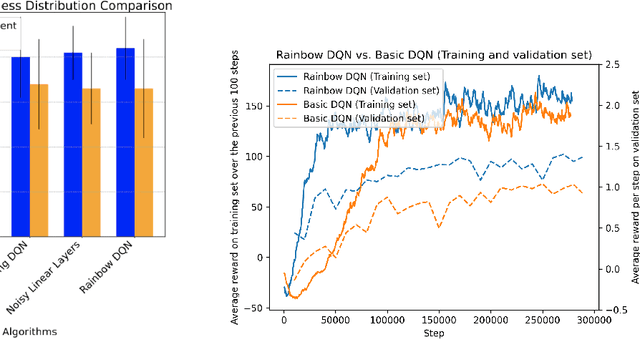

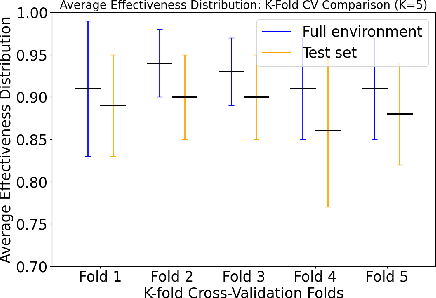

Abstract:Modern astronomical experiments are designed to achieve multiple scientific goals, from studies of galaxy evolution to cosmic acceleration. These goals require data of many different classes of night-sky objects, each of which has a particular set of observational needs. These observational needs are typically in strong competition with one another. This poses a challenging multi-objective optimization problem that remains unsolved. The effectiveness of Reinforcement Learning (RL) as a valuable paradigm for training autonomous systems has been well-demonstrated, and it may provide the basis for self-driving telescopes capable of optimizing the scheduling for astronomy campaigns. Simulated datasets containing examples of interactions between a telescope and a discrete set of sky locations on the celestial sphere can be used to train an RL model to sequentially gather data from these several locations to maximize a cumulative reward as a measure of the quality of the data gathered. We use simulated data to test and compare multiple implementations of a Deep Q-Network (DQN) for the task of optimizing the schedule of observations from the Stone Edge Observatory (SEO). We combine multiple improvements on the DQN and adjustments to the dataset, showing that DQNs can achieve an average reward of 87%+-6% of the maximum achievable reward in each state on the test set. This is the first comparison of offline RL algorithms for a particular astronomical challenge and the first open-source framework for performing such a comparison and assessment task.

WavPool: A New Block for Deep Neural Networks

Jun 14, 2023

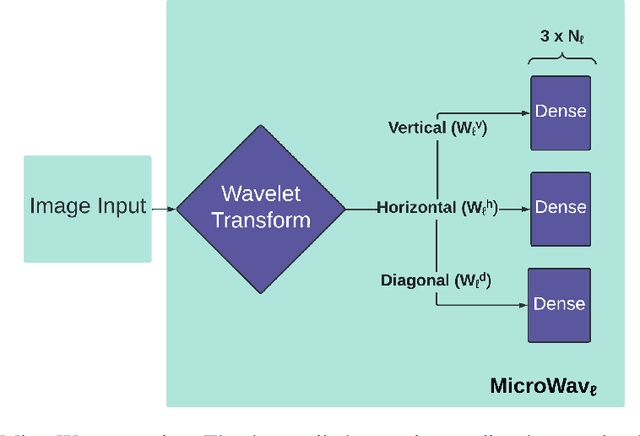

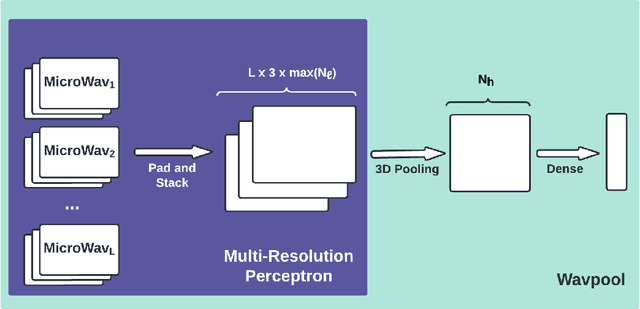

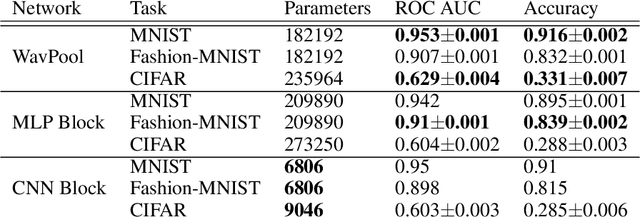

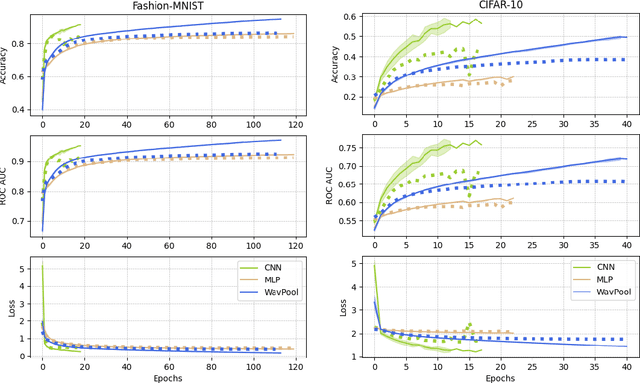

Abstract:Modern deep neural networks comprise many operational layers, such as dense or convolutional layers, which are often collected into blocks. In this work, we introduce a new, wavelet-transform-based network architecture that we call the multi-resolution perceptron: by adding a pooling layer, we create a new network block, the WavPool. The first step of the multi-resolution perceptron is transforming the data into its multi-resolution decomposition form by convolving the input data with filters of fixed coefficients but increasing size. Following image processing techniques, we are able to make scale and spatial information simultaneously accessible to the network without increasing the size of the data vector. WavPool outperforms a similar multilayer perceptron while using fewer parameters, and outperforms a comparable convolutional neural network by ~ 10% on relative accuracy on CIFAR-10.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge