M. Sohaib Alam

Quantum Logic Gate Synthesis as a Markov Decision Process

Dec 27, 2019

Abstract:Reinforcement learning has witnessed recent applications to a variety of tasks in quantum programming. The underlying assumption is that those tasks could be modeled as Markov Decision Processes (MDPs). Here, we investigate the feasibility of this assumption by exploring its consequences for two of the simplest tasks in quantum programming: state preparation and gate compilation. By forming discrete MDPs, focusing exclusively on the single-qubit case, we solve for the optimal policy exactly through policy iteration. We find optimal paths that correspond to the shortest possible sequence of gates to prepare a state, or compile a gate, up to some target accuracy. As an example, we find sequences of H and T gates with length as small as 11 producing ~99% fidelity for states of the form (HT)^{n} |0> with values as large as n=10^{10}. This work provides strong evidence that reinforcement learning can be used for optimal state preparation and gate compilation for larger qubit spaces.

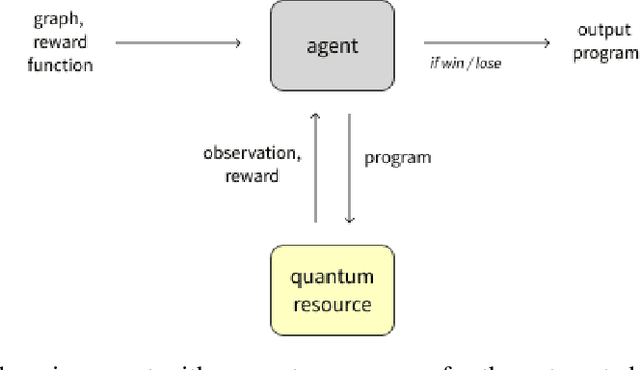

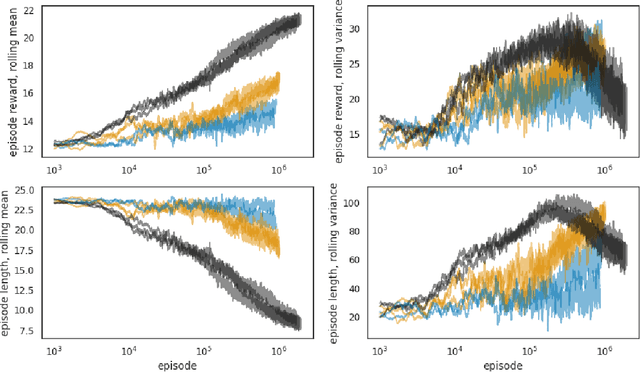

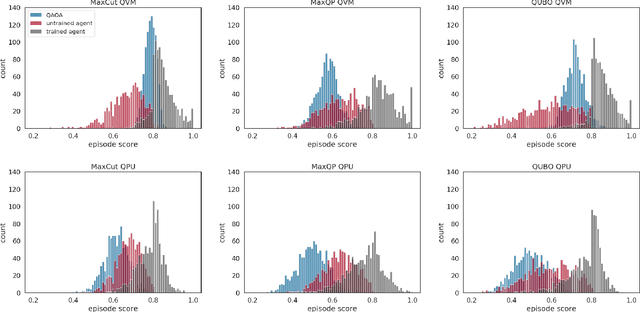

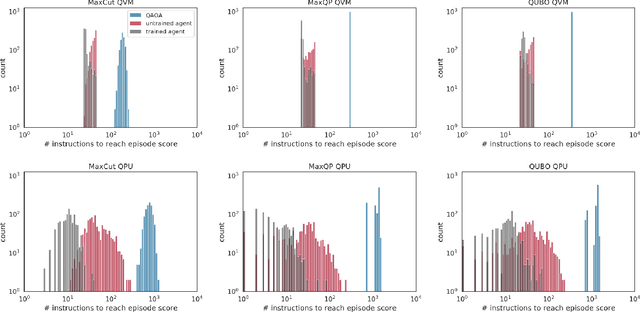

Automated quantum programming via reinforcement learning for combinatorial optimization

Aug 21, 2019

Abstract:We develop a general method for incentive-based programming of hybrid quantum-classical computing systems using reinforcement learning, and apply this to solve combinatorial optimization problems on both simulated and real gate-based quantum computers. Relative to a set of randomly generated problem instances, agents trained through reinforcement learning techniques are capable of producing short quantum programs which generate high quality solutions on both types of quantum resources. We observe generalization to problems outside of the training set, as well as generalization from the simulated quantum resource to the physical quantum resource.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge