Keong Hun Choi

Improved Image Classification with Token Fusion

Aug 19, 2022

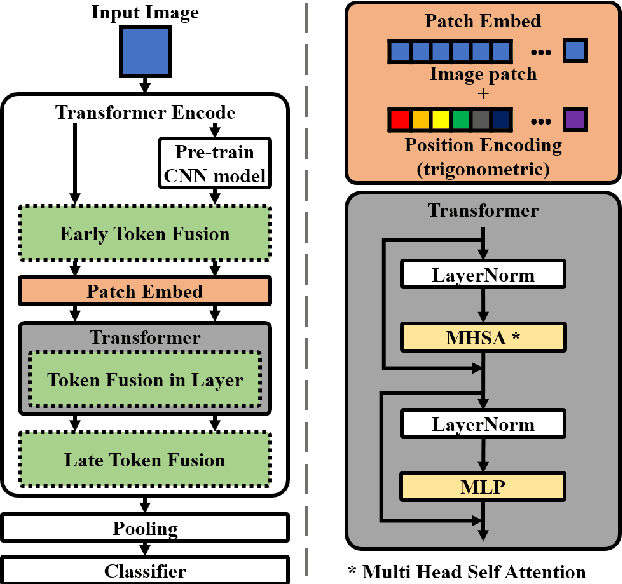

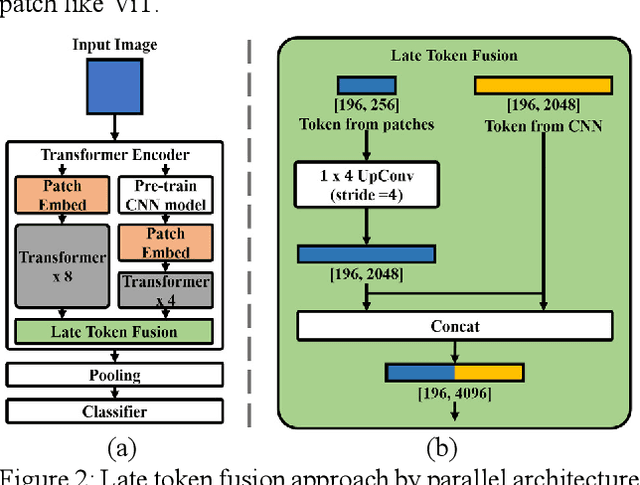

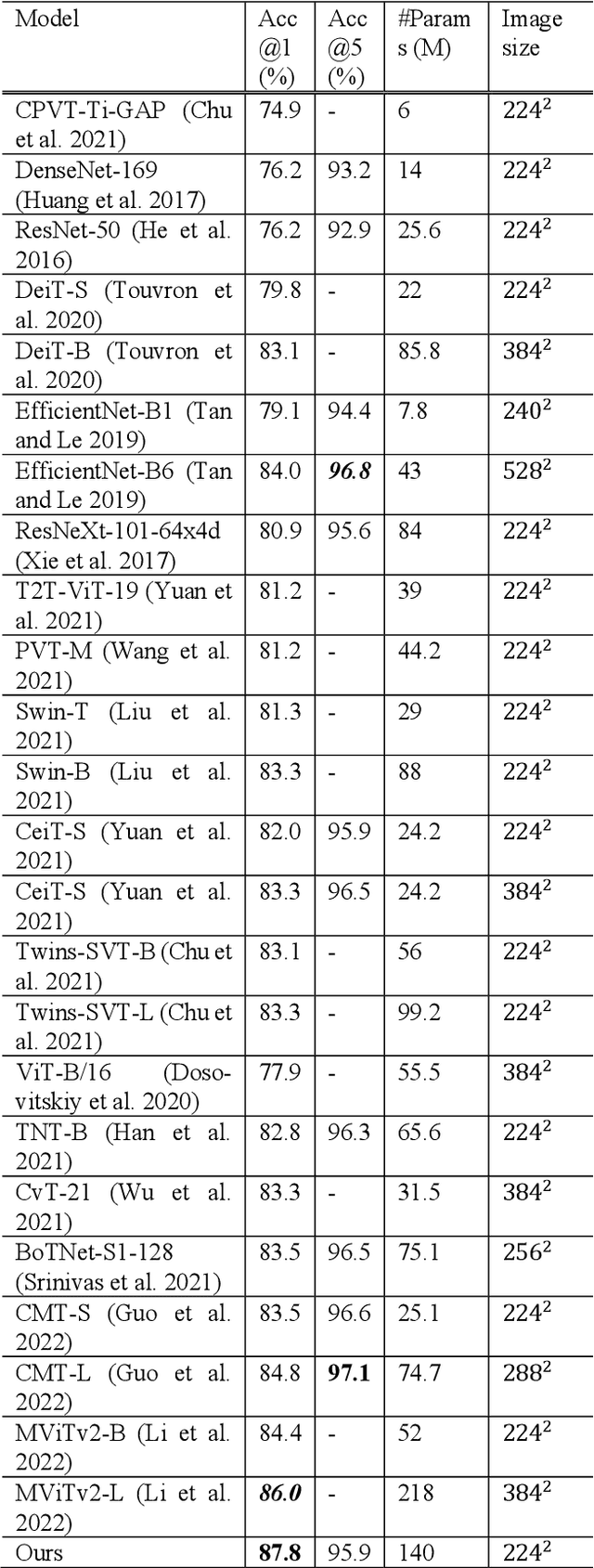

Abstract:In this paper, we propose a method using the fusion of CNN and transformer structure to improve image classification performance. In the case of CNN, information about a local area on an image can be extracted well, but there is a limit to the extraction of global information. On the other hand, the transformer has an advantage in relatively global extraction, but has a disadvantage in that it requires a lot of memory for local feature value extraction. In the case of an image, it is converted into a feature map through CNN, and each feature map's pixel is considered a token. At the same time, the image is divided into patch areas and then fused with the transformer method that views them as tokens. For the fusion of tokens with two different characteristics, we propose three methods: (1) late token fusion with parallel structure, (2) early token fusion, (3) token fusion in a layer by layer. In an experiment using ImageNet 1k, the proposed method shows the best classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge