Justice Amoh

An Optimized Recurrent Unit for Ultra-Low-Power Keyword Spotting

Feb 13, 2019

Abstract:There is growing interest in being able to run neural networks on sensors, wearables and internet-of-things (IoT) devices. However, the computational demands of neural networks make them difficult to deploy on resource-constrained edge devices. To meet this need, our work introduces a new recurrent unit architecture that is specifically adapted for on-device low power acoustic event detection (AED). The proposed architecture is based on the gated recurrent unit (`GRU') but features optimizations that make it implementable on ultra-low power micro-controllers such as the Arm Cortex M0+. Our new architecture, the Embedded Gated Recurrent Unit (eGRU) is demonstrated to be highly efficient and suitable for short-duration AED and keyword spotting tasks. A single eGRU cell is 60x faster and 10x smaller than a GRU cell. Despite its optimizations, eGRU compares well with GRU across tasks of varying complexities. The practicality of eGRU is investigated in a wearable acoustic event detection application. An eGRU model is implemented and tested on the Arm Cortex M0-based Atmel ATSAMD21E18 processor. The Arm M0+ implementation of the eGRU model compares favorably with a full precision GRU that is running on a workstation. The embedded eGRU model achieves a classification accuracy 95.3%, which is only 2% less than the full precision GRU.

Dual Objective Approach Using A Convolutional Neural Network for Magnetic Resonance Elastography

Dec 02, 2018

Abstract:Traditionally, nonlinear inversion, direct inversion, or wave estimation methods have been used for reconstructing images from MRE displacement data. In this work, we propose a convolutional neural network architecture that can map MRE displacement data directly into elastograms, circumventing the costly and computationally intensive classical approaches. In addition to the mean squared error reconstruction objective, we also introduce a secondary loss inspired by the MRE mechanical models for training the neural network. Our network is demonstrated to be effective for generating MRE images that compare well with equivalents from the nonlinear inversion method.

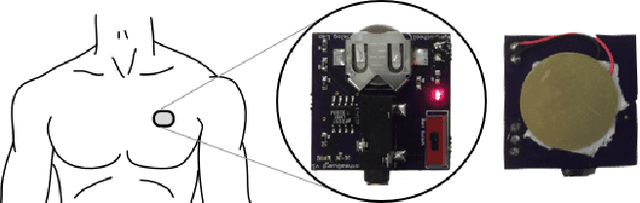

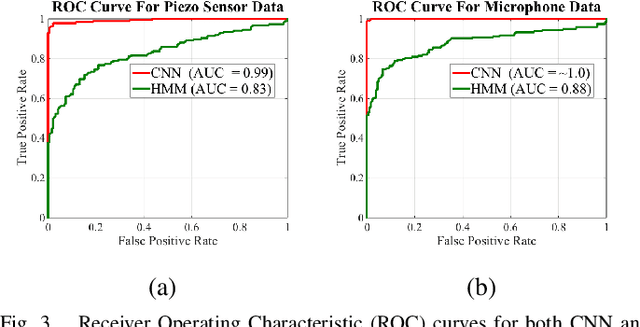

DeepCough: A Deep Convolutional Neural Network in A Wearable Cough Detection System

Sep 08, 2015

Abstract:In this paper, we present a system that employs a wearable acoustic sensor and a deep convolutional neural network for detecting coughs. We evaluate the performance of our system on 14 healthy volunteers and compare it to that of other cough detection systems that have been reported in the literature. Experimental results show that our system achieves a classification sensitivity of 95.1% and a specificity of 99.5%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge