Judith S. Heinisch

Angry or Climbing Stairs? Towards Physiological Emotion Recognition in the Wild

Nov 12, 2018

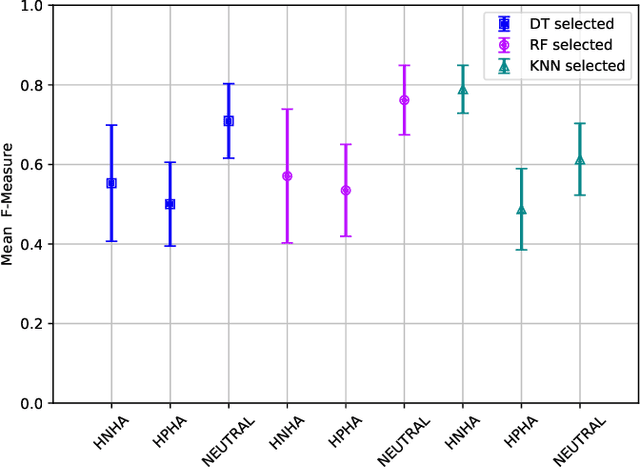

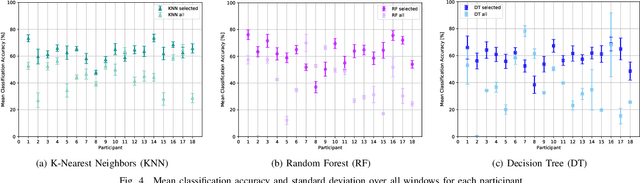

Abstract:Inferring emotions from physiological signals has gained much traction in the last years. Physiological responses to emotions, however, are commonly interfered and overlapped by physical activities, posing a challenge towards emotion recognition in the wild. In this paper, we address this challenge by investigating new features and machine-learning models for emotion recognition, non-sensitive to physical-based interferences. We recorded physiological signals from 18 participants that were exposed to emotions before and while performing physical activities to assess the performance of non-sensitive emotion recognition models. We trained models with the least exhaustive physical activity (sitting) and tested with the remaining, more exhausting activities. For three different emotion categories, we achieve classification accuracies ranging from 47.88% - 73.35% for selected feature sets and per participant. Furthermore, we investigate the performance across all participants and of each activity individually. In this regard, we achieve similar results, between 55.17% and 67.41%, indicating the viability of emotion recognition models not being influenced by single physical activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge