Joshua Springer

Toward Appearance-based Autonomous Landing Site Identification for Multirotor Drones in Unstructured Environments

Dec 20, 2024Abstract:A remaining challenge in multirotor drone flight is the autonomous identification of viable landing sites in unstructured environments. One approach to solve this problem is to create lightweight, appearance-based terrain classifiers that can segment a drone's RGB images into safe and unsafe regions. However, such classifiers require data sets of images and masks that can be prohibitively expensive to create. We propose a pipeline to automatically generate synthetic data sets to train these classifiers, leveraging modern drones' ability to survey terrain automatically and the ability to automatically calculate landing safety masks from terrain models derived from such surveys. We then train a U-Net on the synthetic data set, test it on real-world data for validation, and demonstrate it on our drone platform in real-time.

Lowering Barriers to Entry for Fully-Integrated Custom Payloads on a DJI Matrice

May 10, 2024Abstract:Consumer-grade drones have become effective multimedia collection tools, spring-boarded by rapid development in embedded CPUs, GPUs, and cameras. They are best known for their ability to cheaply collect high-quality aerial video, 3D terrain scans, infrared imagery, etc., with respect to manned aircraft. However, users can also create and attach custom sensors, actuators, or computers, so the drone can collect different data, generate composite data, or interact intelligently with its environment, e.g., autonomously changing behavior to land in a safe way, or choosing further data collection sites. Unfortunately, developing custom payloads is prohibitively difficult for many researchers outside of engineering. We provide guidelines for how to create a sophisticated computational payload that integrates a Raspberry Pi 5 into a DJI Matrice 350. The payload fits into the Matrice's case like a typical DJI payload (but is much cheaper), is easy to build and expand (3D-printed), uses the drone's power and telemetry, can control the drone and its other payloads, can access the drone's sensors and camera feeds, and can process video and stream it to the operator via the controller in real time. We describe the difficulties and proprietary quirks we encountered, how we worked through them, and provide setup scripts and a known-working configuration for others to use.

A Precision Drone Landing System using Visual and IR Fiducial Markers and a Multi-Payload Camera

Mar 06, 2024Abstract:We propose a method for autonomous precision drone landing with fiducial markers and a gimbal-mounted, multi-payload camera with wide-angle, zoom, and IR sensors. The method has minimal data requirements; it depends primarily on the direction from the drone to the landing pad, enabling it to switch dynamically between the camera's different sensors and zoom factors, and minimizing auxiliary sensor requirements. It eliminates the need for data such as altitude above ground level, straight-line distance to the landing pad, fiducial marker size, and 6 DoF marker pose (of which the orientation is problematic). We leverage the zoom and wide-angle cameras, as well as visual April Tag fiducial markers to conduct successful precision landings from much longer distances than in previous work (168m horizontal distance, 102m altitude). We use two types of April Tags in the IR spectrum - active and passive - for precision landing both at daytime and nighttime, instead of simple IR beacons used in most previous work. The active IR landing pad is heated; the novel, passive one is unpowered, at ambient temperature, and depends on its high reflectivity and an IR differential between the ground and the sky. Finally, we propose a high-level control policy to manage initial search for the landing pad and subsequent searches if it is lost - not addressed in previous work. The method demonstrates successful landings with the landing skids at least touching the landing pad, achieving an average error of 0.19m. It also demonstrates successful recovery and landing when the landing pad is temporarily obscured.

Autonomous Drone Landing: Marked Landing Pads and Solidified Lava Flows

Feb 01, 2023Abstract:Landing is the most challenging and risky aspect of multirotor drone flight, and only simple landing methods exist for autonomous drones. We explore methods for autonomous drone landing in two scenarios. In the first scenario, we examine methods for landing on known landing pads using fiducial markers and a gimbal-mounted monocular camera. This method has potential in drone applications where a drone must land more accurately than GPS can provide (e.g.~package delivery in an urban canyon). We expand on previous methods by actuating the drone's camera to track the marker over time, and we address the complexities of pose estimation caused by fiducial marker orientation ambiguity. In the second scenario, and in collaboration with the RAVEN project, we explore methods for landing on solidified lava flows in Iceland, which serves as an analog environment for Mars and provides insight into the effectiveness of drone-rover exploration teams. Our drone uses a depth camera to visualize the terrain, and we are developing methods to analyze the terrain data for viable landing sites in real time with minimal sensors and external infrastructure requirements, so that the solution does not heavily influence the drone's behavior, mission structure, or operational environments.

Autonomous Multirotor Landing on Landing Pads and Lava Flows

Nov 11, 2022Abstract:Landing is a challenging part of autonomous drone flight and a great research opportunity. This PhD proposes to improve on fiducial autonomous landing algorithms by making them more flexible. Further, it leverages its location, Iceland, to develop a method for landing on lava flows in cooperation with analog Mars exploration missions taking place in Iceland now - and potentially for future Mars landings.

Autonomous Precision Drone Landing with Fiducial Markers and a Gimbal-Mounted Camera for Active Tracking

Jun 16, 2022

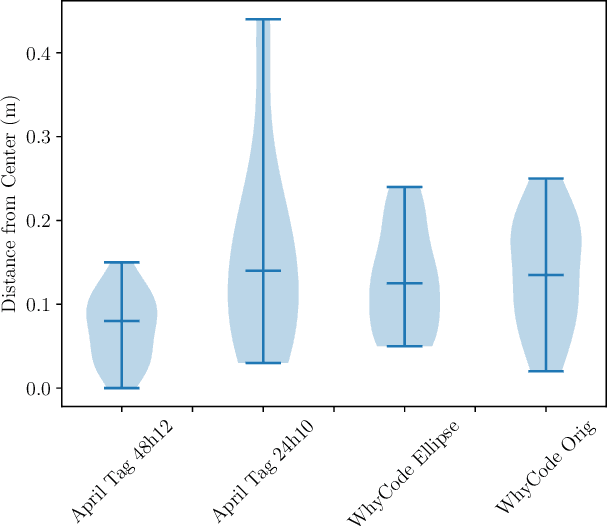

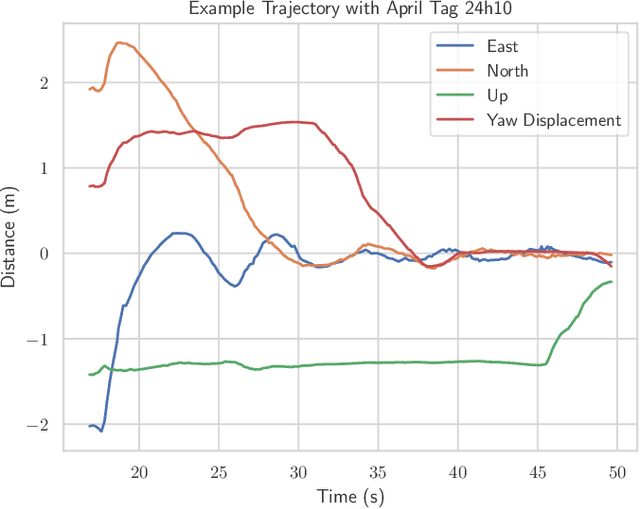

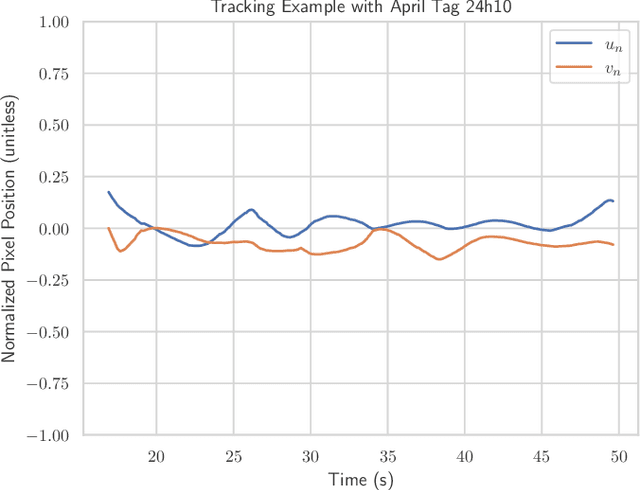

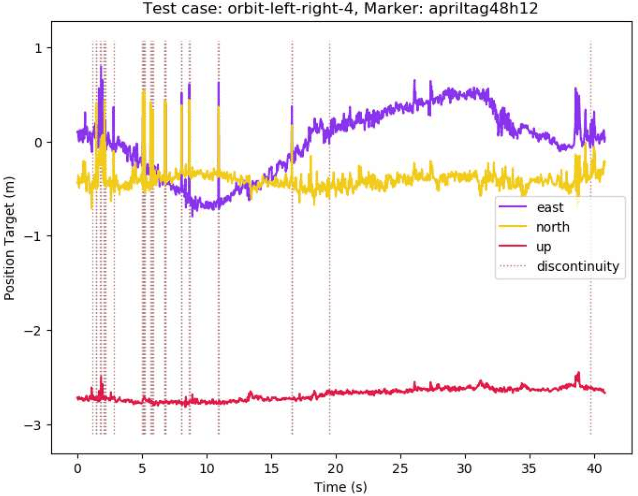

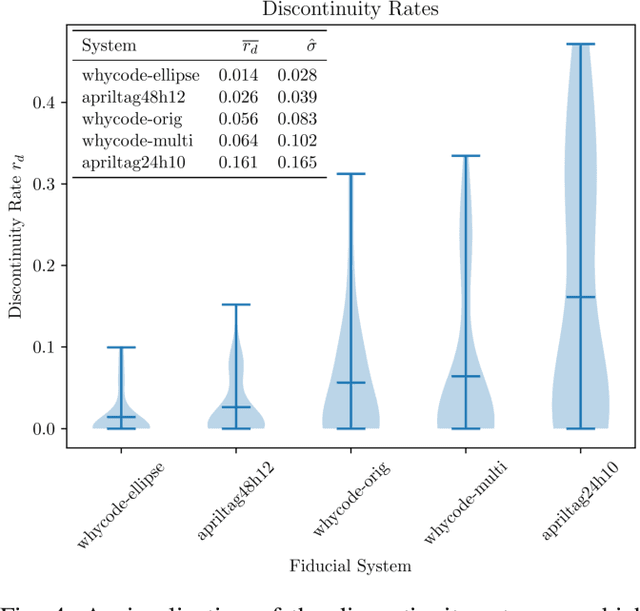

Abstract:Precision landing is a remaining challenge in autonomous drone flight, with no widespread solution. Fiducial markers provide a computationally cheap way for a drone to locate a landing pad and autonomously execute precision landings. However, most work in this field has depended on fixed, downward-facing cameras which restrict the ability of the drone to detect the marker. We present a method of autonomous landing that uses a gimbal-mounted camera to quickly search for the landing pad by simply spinning in place while tilting the camera up and down, and to continually aim the camera at the landing pad during approach and landing. This method demonstrates successful search, tracking, and landing with 4 of 5 tested fiducial systems on a physical drone with no human intervention. Per fiducial system, we present the number of successful and unsuccessful landings, and the distributions of the distances from the drone to the center of the landing pad after each successful landing, with a statistical comparison among the systems. We also show representative examples of flight trajectories, marker tracking performance, and control outputs for each channel during the landing. Finally, we discuss qualitative strengths and weaknesses underlying the performance of each system.

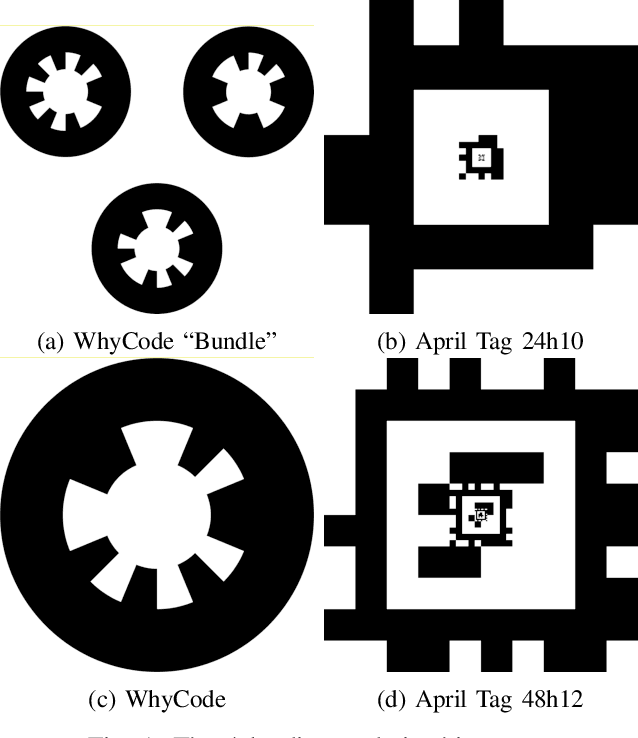

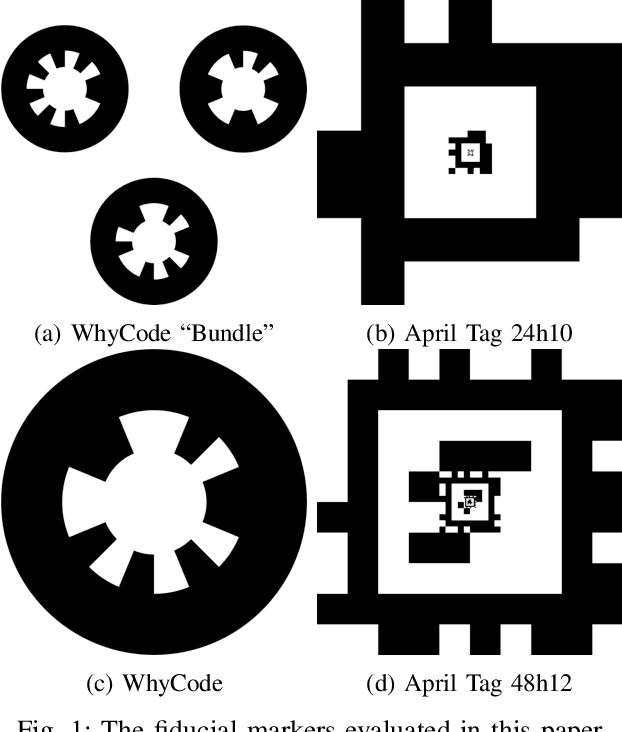

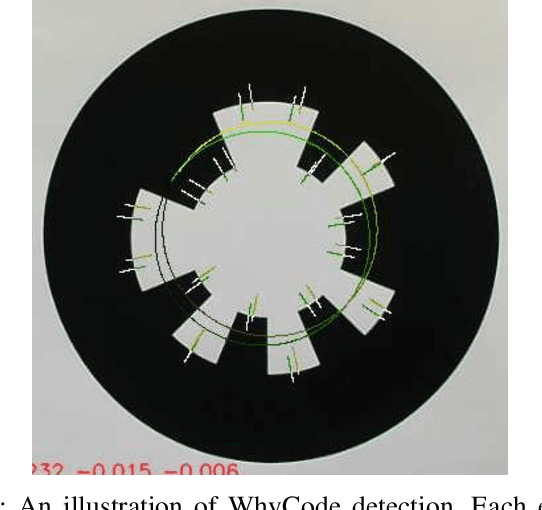

Evaluation of April Tag and WhyCode Fiducial Systems for Autonomous Precision Drone Landing with a Gimbal-Mounted Camera

Mar 18, 2022

Abstract:Fiducial markers provide a computationally cheap way for drones to determine their location with respect to a landing pad and execute precision landings. However, most existing work in this field uses a fixed, downward facing camera that does not leverage the common gimbal-mounted camera setup found on many drones. Such rigid systems cannot easily track detected markers, and may lose sight of the markers in non-ideal conditions (e.g. wind gusts). This paper evaluates April Tag and WhyCode fiducial systems for drone landing with a gimbal-mounted, monocular camera, with the advantage that the drone system can track the marker over time. However, since the orientation of the camera changes, we must know the orientation of the marker, which is unreliable in monocular fiducial systems. Additionally, the system must be fast. We propose 2 methods for mitigating the orientation ambiguity of WhyCode, and 1 method for increasing the runtime detection rate of April Tag. We evaluate our 3 systems against 2 default systems in terms of marker orientation ambiguity, and detection rate. We test rates of marker detection in a ROS framework on a Raspberry Pi 4, and we rank the systems in terms of their performance. Our first WhyCode variant significantly reduces orientation ambiguity with an insignificant reduction in detection rate. Our second WhyCode variant does not show significantly different orientation ambiguity from the default WhyCode system, but does provide additional functionality in terms of multi-marker WhyCode bundle arrangements. Our April Tag variant does not show performance improvements on a Raspberry Pi 4.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge