Jolan Dupont

A Masked Semi-Supervised Learning Approach for Otago Micro Labels Recognition

May 22, 2024

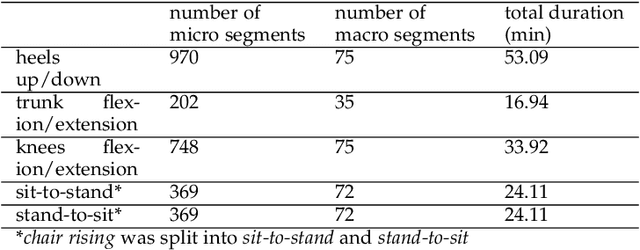

Abstract:The Otago Exercise Program (OEP) serves as a vital rehabilitation initiative for older adults, aiming to enhance their strength and balance, and consequently prevent falls. While Human Activity Recognition (HAR) systems have been widely employed in recognizing the activities of individuals, existing systems focus on the duration of macro activities (i.e. a sequence of repetitions of the same exercise), neglecting the ability to discern micro activities (i.e. the individual repetitions of the exercises), in the case of OEP. This study presents a novel semi-supervised machine learning approach aimed at bridging this gap in recognizing the micro activities of OEP. To manage the limited dataset size, our model utilizes a Transformer encoder for feature extraction, subsequently classified by a Temporal Convolutional Network (TCN). Simultaneously, the Transformer encoder is employed for masked unsupervised learning to reconstruct input signals. Results indicate that the masked unsupervised learning task enhances the performance of the supervised learning (classification task), as evidenced by f1-scores surpassing the clinically applicable threshold of 0.8. From the micro activities, two clinically relevant outcomes emerge: counting the number of repetitions of each exercise and calculating the velocity during chair rising. These outcomes enable the automatic monitoring of exercise intensity and difficulty in the daily lives of older adults.

DS-MS-TCN: Otago Exercises Recognition with a Dual-Scale Multi-Stage Temporal Convolutional Network

Feb 07, 2024

Abstract:The Otago Exercise Program (OEP) represents a crucial rehabilitation initiative tailored for older adults, aimed at enhancing balance and strength. Despite previous efforts utilizing wearable sensors for OEP recognition, existing studies have exhibited limitations in terms of accuracy and robustness. This study addresses these limitations by employing a single waist-mounted Inertial Measurement Unit (IMU) to recognize OEP exercises among community-dwelling older adults in their daily lives. A cohort of 36 older adults participated in laboratory settings, supplemented by an additional 7 older adults recruited for at-home assessments. The study proposes a Dual-Scale Multi-Stage Temporal Convolutional Network (DS-MS-TCN) designed for two-level sequence-to-sequence classification, incorporating them in one loss function. In the first stage, the model focuses on recognizing each repetition of the exercises (micro labels). Subsequent stages extend the recognition to encompass the complete range of exercises (macro labels). The DS-MS-TCN model surpasses existing state-of-the-art deep learning models, achieving f1-scores exceeding 80% and Intersection over Union (IoU) f1-scores surpassing 60% for all four exercises evaluated. Notably, the model outperforms the prior study utilizing the sliding window technique, eliminating the need for post-processing stages and window size tuning. To our knowledge, we are the first to present a novel perspective on enhancing Human Activity Recognition (HAR) systems through the recognition of each repetition of activities.

Otago Exercises Monitoring for Older Adults by a Single IMU and Hierarchical Machine Learning Models

Oct 05, 2023Abstract:Otago Exercise Program (OEP) is a rehabilitation program for older adults to improve frailty, sarcopenia, and balance. Accurate monitoring of patient involvement in OEP is challenging, as self-reports (diaries) are often unreliable. With the development of wearable sensors, Human Activity Recognition (HAR) systems using wearable sensors have revolutionized healthcare. However, their usage for OEP still shows limited performance. The objective of this study is to build an unobtrusive and accurate system to monitor OEP for older adults. Data was collected from older adults wearing a single waist-mounted Inertial Measurement Unit (IMU). Two datasets were collected, one in a laboratory setting, and one at the homes of the patients. A hierarchical system is proposed with two stages: 1) using a deep learning model to recognize whether the patients are performing OEP or activities of daily life (ADLs) using a 10-minute sliding window; 2) based on stage 1, using a 6-second sliding window to recognize the OEP sub-classes performed. The results showed that in stage 1, OEP could be recognized with window-wise f1-scores over 0.95 and Intersection-over-Union (IoU) f1-scores over 0.85 for both datasets. In stage 2, for the home scenario, four activities could be recognized with f1-scores over 0.8: ankle plantarflexors, abdominal muscles, knee bends, and sit-to-stand. The results showed the potential of monitoring the compliance of OEP using a single IMU in daily life. Also, some OEP sub-classes are possible to be recognized for further analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge