Joberto S. B. Martins

Towards Sustainability in 6G Network Slicing with Energy-Saving and Optimization Methods

May 17, 2025Abstract:The 6G mobile network is the next evolutionary step after 5G, with a prediction of an explosive surge in mobile traffic. It provides ultra-low latency, higher data rates, high device density, and ubiquitous coverage, positively impacting services in various areas. Energy saving is a major concern for new systems in the telecommunications sector because all players are expected to reduce their carbon footprints to contribute to mitigating climate change. Network slicing is a fundamental enabler for 6G/5G mobile networks and various other new systems, such as the Internet of Things (IoT), Internet of Vehicles (IoV), and Industrial IoT (IIoT). However, energy-saving methods embedded in network slicing architectures are still a research gap. This paper discusses how to embed energy-saving methods in network-slicing architectures that are a fundamental enabler for nearly all new innovative systems being deployed worldwide. This paper's main contribution is a proposal to save energy in network slicing. That is achieved by deploying ML-native agents in NS architectures to dynamically orchestrate and optimize resources based on user demands. The SFI2 network slicing reference architecture is the concrete use case scenario in which contrastive learning improves energy saving for resource allocation.

An Intelligent Native Network Slicing Security Architecture Empowered by Federated Learning

Oct 04, 2024Abstract:Network Slicing (NS) has transformed the landscape of resource sharing in networks, offering flexibility to support services and applications with highly variable requirements in areas such as the next-generation 5G/6G mobile networks (NGMN), vehicular networks, industrial Internet of Things (IoT), and verticals. Although significant research and experimentation have driven the development of network slicing, existing architectures often fall short in intrinsic architectural intelligent security capabilities. This paper proposes an architecture-intelligent security mechanism to improve the NS solutions. We idealized a security-native architecture that deploys intelligent microservices as federated agents based on machine learning, providing intra-slice and architectural operation security for the Slicing Future Internet Infrastructures (SFI2) reference architecture. It is noteworthy that federated learning approaches match the highly distributed modern microservice-based architectures, thus providing a unifying and scalable design choice for NS platforms addressing both service and security. Using ML-Agents and Security Agents, our approach identified Distributed Denial-of-Service (DDoS) and intrusion attacks within the slice using generic and non-intrusive telemetry records, achieving an average accuracy of approximately $95.60\%$ in the network slicing architecture and $99.99\%$ for the deployed slice -- intra-slice. This result demonstrates the potential for leveraging architectural operational security and introduces a promising new research direction for network slicing architectures.

* 18 pages, 12 figures, Future Generation Computer Systems (FGCS)

Intelligent Data-Driven Architectural Features Orchestration for Network Slicing

Jan 12, 2024Abstract:Network slicing is a crucial enabler and a trend for the Next Generation Mobile Network (NGMN) and various other new systems like the Internet of Vehicles (IoV) and Industrial IoT (IIoT). Orchestration and machine learning are key elements with a crucial role in the network-slicing processes since the NS process needs to orchestrate resources and functionalities, and machine learning can potentially optimize the orchestration process. However, existing network-slicing architectures lack the ability to define intelligent approaches to orchestrate features and resources in the slicing process. This paper discusses machine learning-based orchestration of features and capabilities in network slicing architectures. Initially, the slice resource orchestration and allocation in the slicing planning, configuration, commissioning, and operation phases are analyzed. In sequence, we highlight the need for optimized architectural feature orchestration and recommend using ML-embed agents, federated learning intrinsic mechanisms for knowledge acquisition, and a data-driven approach embedded in the network slicing architecture. We further develop an architectural features orchestration case embedded in the SFI2 network slicing architecture. An attack prevention security mechanism is developed for the SFI2 architecture using distributed embedded and cooperating ML agents. The case presented illustrates the architectural feature's orchestration process and benefits, highlighting its importance for the network slicing process.

Enhancing Network Slicing Architectures with Machine Learning, Security, Sustainability and Experimental Networks Integration

Jul 18, 2023Abstract:Network Slicing (NS) is an essential technique extensively used in 5G networks computing strategies, mobile edge computing, mobile cloud computing, and verticals like the Internet of Vehicles and industrial IoT, among others. NS is foreseen as one of the leading enablers for 6G futuristic and highly demanding applications since it allows the optimization and customization of scarce and disputed resources among dynamic, demanding clients with highly distinct application requirements. Various standardization organizations, like 3GPP's proposal for new generation networks and state-of-the-art 5G/6G research projects, are proposing new NS architectures. However, new NS architectures have to deal with an extensive range of requirements that inherently result in having NS architecture proposals typically fulfilling the needs of specific sets of domains with commonalities. The Slicing Future Internet Infrastructures (SFI2) architecture proposal explores the gap resulting from the diversity of NS architectures target domains by proposing a new NS reference architecture with a defined focus on integrating experimental networks and enhancing the NS architecture with Machine Learning (ML) native optimizations, energy-efficient slicing, and slicing-tailored security functionalities. The SFI2 architectural main contribution includes the utilization of the slice-as-a-service paradigm for end-to-end orchestration of resources across multi-domains and multi-technology experimental networks. In addition, the SFI2 reference architecture instantiations will enhance the multi-domain and multi-technology integrated experimental network deployment with native ML optimization, energy-efficient aware slicing, and slicing-tailored security functionalities for the practical domain.

* 10 pages, 11 figures

On Modeling Network Slicing Communication Resources with SARSA Optimization

Jan 11, 2023Abstract:Network slicing is a crucial enabler to support the composition and deployment of virtual network infrastructures required by the dynamic behavior of networks like 5G/6G mobile networks, IoT-aware networks, e-health systems, and industry verticals like the internet of vehicles (IoV) and industry 4.0. The communication slices and their allocated communication resources are essential in slicing architectures for resource orchestration and allocation, virtual network function (VNF) deployment, and slice operation functionalities. The communication slices provide the communications capabilities required to support slice operation, SLA guarantees, and QoS/ QoE application requirements. Therefore, this contribution proposes a networking slicing conceptual model to formulate the optimization problem related to the sharing of communication resources among communication slices. First, we present a conceptual model of network slicing, we then formulate analytically some aspects of the model and the optimization problem to address. Next, we proposed to use a SARSA agent to solve the problem and implement a proof of concept prototype. Finally, we present the obtained results and discuss them.

Reinforcement Learning Agent Design and Optimization with Bandwidth Allocation Model

Nov 23, 2022Abstract:Reinforcement learning (RL) is currently used in various real-life applications. RL-based solutions have the potential to generically address problems, including the ones that are difficult to solve with heuristics and meta-heuristics and, in addition, the set of problems and issues where some intelligent or cognitive approach is required. However, reinforcement learning agents require a not straightforward design and have important design issues. RL agent design issues include the target problem modeling, state-space explosion, the training process, and agent efficiency. Research currently addresses these issues aiming to foster RL dissemination. A BAM model, in summary, allocates and shares resources with users. There are three basic BAM models and several hybrids that differ in how they allocate and share resources among users. This paper addresses the issue of an RL agent design and efficiency. The RL agent's objective is to allocate and share resources among users. The paper investigates how a BAM model can contribute to the RL agent design and efficiency. The AllocTC-Sharing (ATCS) model is analytically described and simulated to evaluate how it mimics the RL agent operation and how the ATCS can offload computational tasks from the RL agent. The essential argument researched is whether algorithms integrated with the RL agent design and operation have the potential to facilitate agent design and optimize its execution. The ATCS analytical model and simulation presented demonstrate that a BAM model offloads agent tasks and assists the agent's design and optimization.

On Evaluating Power Loss with HATSGA Algorithm for Power Network Reconfiguration in the Smart Grid

May 14, 2022

Abstract:This paper presents the power network reconfiguration algorithm HATSGA with a "R" modeling approach and evaluates its behavior in computing new reconfiguration topologies for the power network in the Smart Grid context. The modeling of the power distribution network with the language "R" is used to represent the network and support the computation of distinct algorithm configurations towards the evaluation of new reconfiguration topologies. The HATSGA algorithm adopts a hybrid Tabu Search and Genetic Algorithm strategy and can be configured in different ways to compute network reconfiguration solutions. The evaluation of power loss with HATSGA uses the IEEE 14-Bus topology as the power test scenario. The evaluation of reconfiguration topologies with minimum power loss with HATSGA indicates that an efficient solution can be reached with a feasible computational time. This suggests that HATSGA can be potentially used for computing reconfiguration network topologies and, beyond that, it can be used for autonomic self-healing management approaches where a feasible computational time is required.

* 7 pp

Enhanced Pub/Sub Communications for Massive IoT Traffic with SARSA Reinforcement Learning

Jan 03, 2021

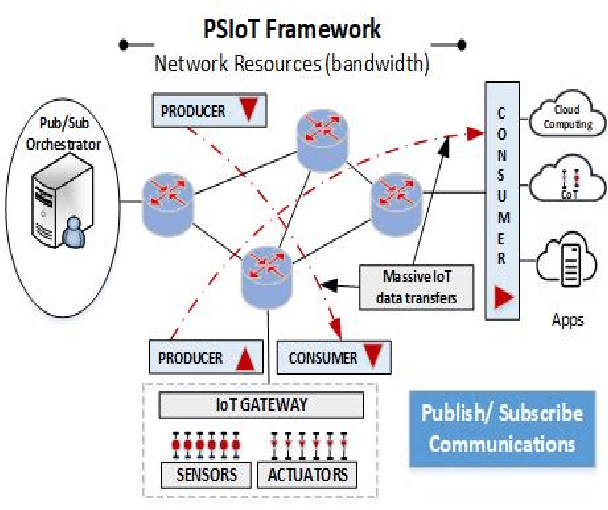

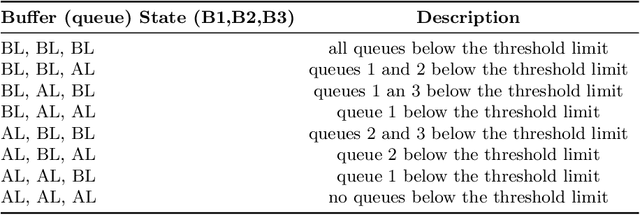

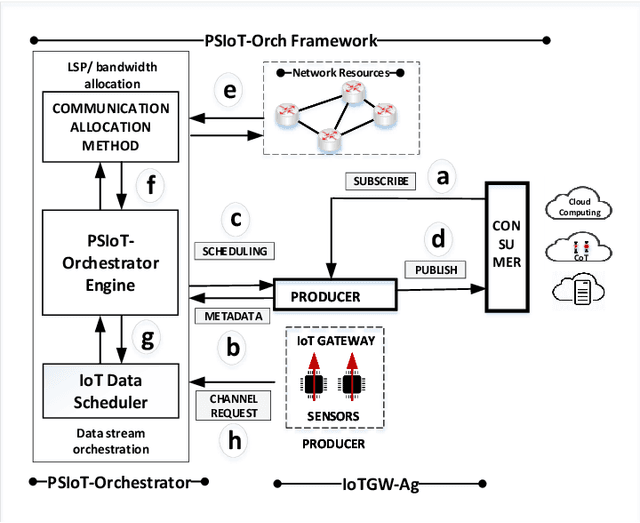

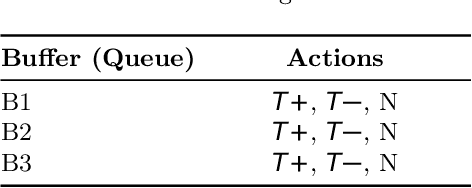

Abstract:Sensors are being extensively deployed and are expected to expand at significant rates in the coming years. They typically generate a large volume of data on the internet of things (IoT) application areas like smart cities, intelligent traffic systems, smart grid, and e-health. Cloud, edge and fog computing are potential and competitive strategies for collecting, processing, and distributing IoT data. However, cloud, edge, and fog-based solutions need to tackle the distribution of a high volume of IoT data efficiently through constrained and limited resource network infrastructures. This paper addresses the issue of conveying a massive volume of IoT data through a network with limited communications resources (bandwidth) using a cognitive communications resource allocation based on Reinforcement Learning (RL) with SARSA algorithm. The proposed network infrastructure (PSIoTRL) uses a Publish/ Subscribe architecture to access massive and highly distributed IoT data. It is demonstrated that the PSIoTRL bandwidth allocation for buffer flushing based on SARSA enhances the IoT aggregator buffer occupation and network link utilization. The PSIoTRL dynamically adapts the IoT aggregator traffic flushing according to the Pub/Sub topic's priority and network constraint requirements.

A Methodological Approach to Model CBR-based Systems

Sep 09, 2020

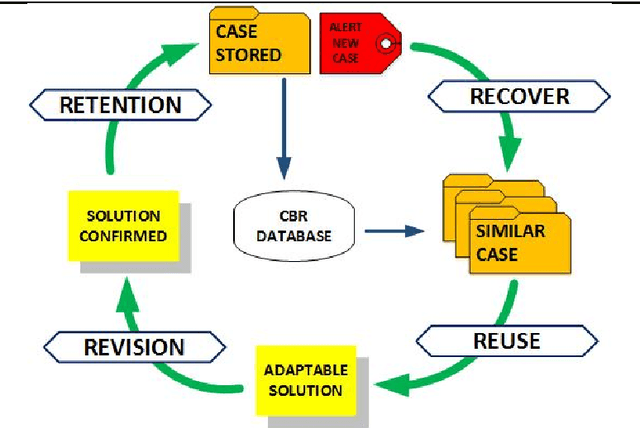

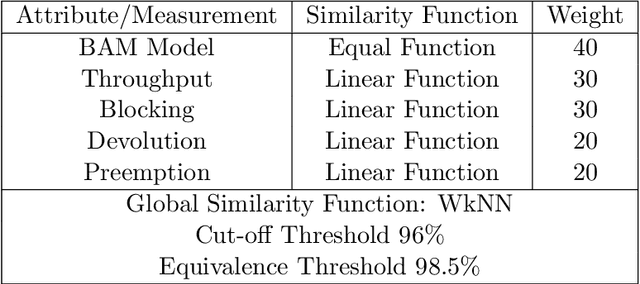

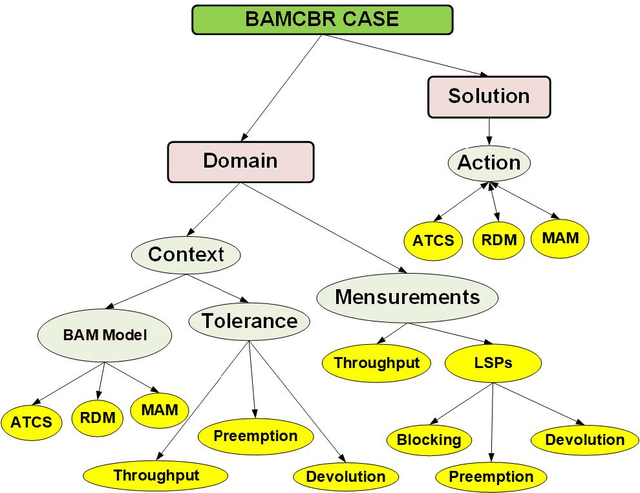

Abstract:Artificial intelligence (AI) has been used in various areas to support system optimization and find solutions where the complexity makes it challenging to use algorithmic and heuristics. Case-based Reasoning (CBR) is an AI technique intensively exploited in domains like management, medicine, design, construction, retail and smart grid. CBR is a technique for problem-solving and captures new knowledge by using past experiences. One of the main CBR deployment challenges is the target system modeling process. This paper presents a straightforward methodological approach to model CBR-based applications using the concepts of abstract and concrete models. Splitting the modeling process with two models facilitates the allocation of expertise between the application domain and the CBR technology. The methodological approach intends to facilitate the CBR modeling process and to foster CBR use in various areas outside computer science.

* pp 1-16, 3 figures

A Electric Network Reconfiguration Strategy with Case-Based Reasoning for the Smart Grid

Jul 11, 2019

Abstract:The complexity, heterogeneity and scale of electrical networks have grown far beyond the limits of exclusively human-based management at the Smart Grid (SG). Likewise, researchers cogitate the use of artificial intelligence and heuristics techniques to create cognitive and autonomic management tools that aim better assist and enhance SG management processes like in the grid reconfiguration. The development of self-healing management approaches towards a cognitive and autonomic distribution power network reconfiguration is a scenario in which the scalability and on-the-fly computation are issues. This paper proposes the use of Case-Based Reasoning (CBR) coupled with the HATSGA algorithm for the fast reconfiguration of large distribution power networks. The suitability and the scalability of the CBR-based reconfiguration strategy using HATSGA algorithm are evaluated. The evaluation indicates that the adopted HATSGA algorithm computes new reconfiguration topologies with a feasible computational time for large networks. The CBR strategy looks for managerial acceptable reconfiguration solutions at the CBR database and, as such, contributes to reduce the required number of reconfiguration computation using HATSGA. This suggests CBR can be applied with a fast reconfiguration algorithm resulting in more efficient, dynamic and cognitive grid recovery strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge