Jingyi Cheng

A Short-Term Predict-Then-Cluster Framework for Meal Delivery Services

Jan 11, 2025

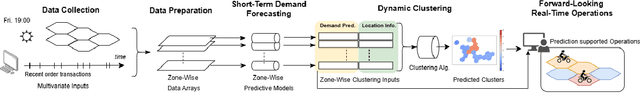

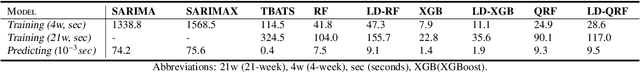

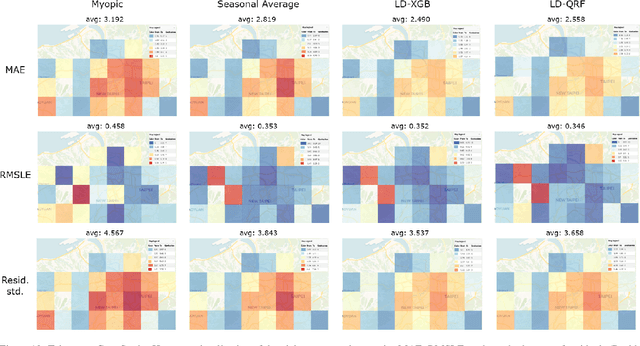

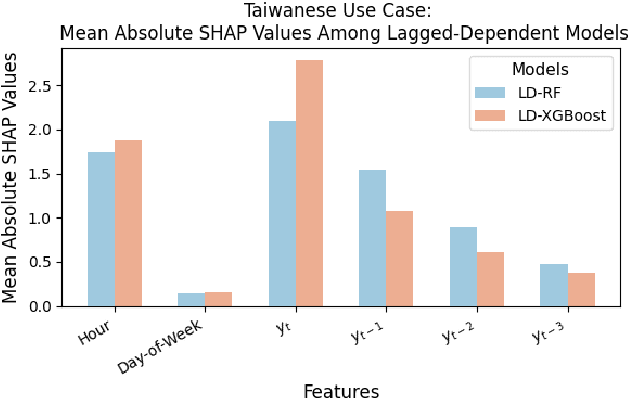

Abstract:Micro-delivery services offer promising solutions for on-demand city logistics, but their success relies on efficient real-time delivery operations and fleet management. On-demand meal delivery platforms seek to optimize real-time operations based on anticipatory insights into citywide demand distributions. To address these needs, this study proposes a short-term predict-then-cluster framework for on-demand meal delivery services. The framework utilizes ensemble-learning methods for point and distributional forecasting with multivariate features, including lagged-dependent inputs to capture demand dynamics. We introduce Constrained K-Means Clustering (CKMC) and Contiguity Constrained Hierarchical Clustering with Iterative Constraint Enforcement (CCHC-ICE) to generate dynamic clusters based on predicted demand and geographical proximity, tailored to user-defined operational constraints. Evaluations of European and Taiwanese case studies demonstrate that the proposed methods outperform traditional time series approaches in both accuracy and computational efficiency. Clustering results demonstrate that the incorporation of distributional predictions effectively addresses demand uncertainties, improving the quality of operational insights. Additionally, a simulation study demonstrates the practical value of short-term demand predictions for proactive strategies, such as idle fleet rebalancing, significantly enhancing delivery efficiency. By addressing demand uncertainties and operational constraints, our predict-then-cluster framework provides actionable insights for optimizing real-time operations. The approach is adaptable to other on-demand platform-based city logistics and passenger mobility services, promoting sustainable and efficient urban operations.

Real-Time Integrated Dispatching and Idle Fleet Steering with Deep Reinforcement Learning for A Meal Delivery Platform

Jan 10, 2025Abstract:To achieve high service quality and profitability, meal delivery platforms like Uber Eats and Grubhub must strategically operate their fleets to ensure timely deliveries for current orders while mitigating the consequential impacts of suboptimal decisions that leads to courier understaffing in the future. This study set out to solve the real-time order dispatching and idle courier steering problems for a meal delivery platform by proposing a reinforcement learning (RL)-based strategic dual-control framework. To address the inherent sequential nature of these problems, we model both order dispatching and courier steering as Markov Decision Processes. Trained via a deep reinforcement learning (DRL) framework, we obtain strategic policies by leveraging the explicitly predicted demands as part of the inputs. In our dual-control framework, the dispatching and steering policies are iteratively trained in an integrated manner. These forward-looking policies can be executed in real-time and provide decisions while jointly considering the impacts on local and network levels. To enhance dispatching fairness, we propose convolutional deep Q networks to construct fair courier embeddings. To simultaneously rebalance the supply and demand within the service network, we propose to utilize mean-field approximated supply-demand knowledge to reallocate idle couriers at the local level. Utilizing the policies generated by the RL-based strategic dual-control framework, we find the delivery efficiency and fairness of workload distribution among couriers have been improved, and under-supplied conditions have been alleviated within the service network. Our study sheds light on designing an RL-based framework to enable forward-looking real-time operations for meal delivery platforms and other on-demand services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge