Jin-Seop Lee

Stabilizing Open-Set Test-Time Adaptation via Primary-Auxiliary Filtering and Knowledge-Integrated Prediction

Aug 26, 2025Abstract:Deep neural networks demonstrate strong performance under aligned training-test distributions. However, real-world test data often exhibit domain shifts. Test-Time Adaptation (TTA) addresses this challenge by adapting the model to test data during inference. While most TTA studies assume that the training and test data share the same class set (closed-set TTA), real-world scenarios often involve open-set data (open-set TTA), which can degrade closed-set accuracy. A recent study showed that identifying open-set data during adaptation and maximizing its entropy is an effective solution. However, the previous method relies on the source model for filtering, resulting in suboptimal filtering accuracy on domain-shifted test data. In contrast, we found that the adapting model, which learns domain knowledge from noisy test streams, tends to be unstable and leads to error accumulation when used for filtering. To address this problem, we propose Primary-Auxiliary Filtering (PAF), which employs an auxiliary filter to validate data filtered by the primary filter. Furthermore, we propose Knowledge-Integrated Prediction (KIP), which calibrates the outputs of the adapting model, EMA model, and source model to integrate their complementary knowledge for OSTTA. We validate our approach across diverse closed-set and open-set datasets. Our method enhances both closed-set accuracy and open-set discrimination over existing methods. The code is available at https://github.com/powerpowe/PAF-KIP-OSTTA .

TAG: A Simple Yet Effective Temporal-Aware Approach for Zero-Shot Video Temporal Grounding

Aug 11, 2025Abstract:Video Temporal Grounding (VTG) aims to extract relevant video segments based on a given natural language query. Recently, zero-shot VTG methods have gained attention by leveraging pretrained vision-language models (VLMs) to localize target moments without additional training. However, existing approaches suffer from semantic fragmentation, where temporally continuous frames sharing the same semantics are split across multiple segments. When segments are fragmented, it becomes difficult to predict an accurate target moment that aligns with the text query. Also, they rely on skewed similarity distributions for localization, making it difficult to select the optimal segment. Furthermore, they heavily depend on the use of LLMs which require expensive inferences. To address these limitations, we propose a \textit{TAG}, a simple yet effective Temporal-Aware approach for zero-shot video temporal Grounding, which incorporates temporal pooling, temporal coherence clustering, and similarity adjustment. Our proposed method effectively captures the temporal context of videos and addresses distorted similarity distributions without training. Our approach achieves state-of-the-art results on Charades-STA and ActivityNet Captions benchmark datasets without rely on LLMs. Our code is available at https://github.com/Nuetee/TAG

DCG-SQL: Enhancing In-Context Learning for Text-to-SQL with Deep Contextual Schema Link Graph

May 26, 2025Abstract:Text-to-SQL, which translates a natural language question into an SQL query, has advanced with in-context learning of Large Language Models (LLMs). However, existing methods show little improvement in performance compared to randomly chosen demonstrations, and significant performance drops when smaller LLMs (e.g., Llama 3.1-8B) are used. This indicates that these methods heavily rely on the intrinsic capabilities of hyper-scaled LLMs, rather than effectively retrieving useful demonstrations. In this paper, we propose a novel approach for effectively retrieving demonstrations and generating SQL queries. We construct a Deep Contextual Schema Link Graph, which contains key information and semantic relationship between a question and its database schema items. This graph-based structure enables effective representation of Text-to-SQL samples and retrieval of useful demonstrations for in-context learning. Experimental results on the Spider benchmark demonstrate the effectiveness of our approach, showing consistent improvements in SQL generation performance and efficiency across both hyper-scaled LLMs and small LLMs. Our code will be released.

DomCLP: Domain-wise Contrastive Learning with Prototype Mixup for Unsupervised Domain Generalization

Dec 12, 2024

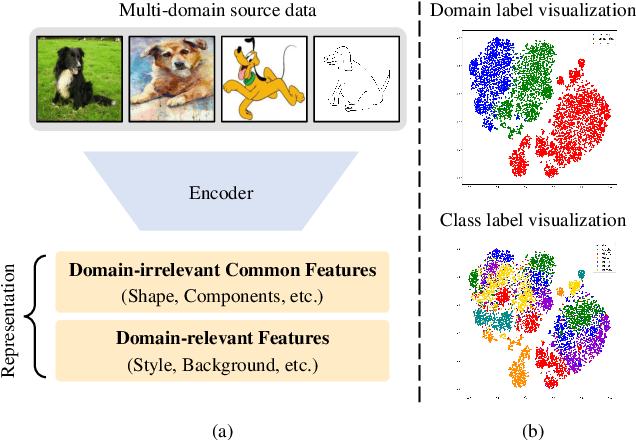

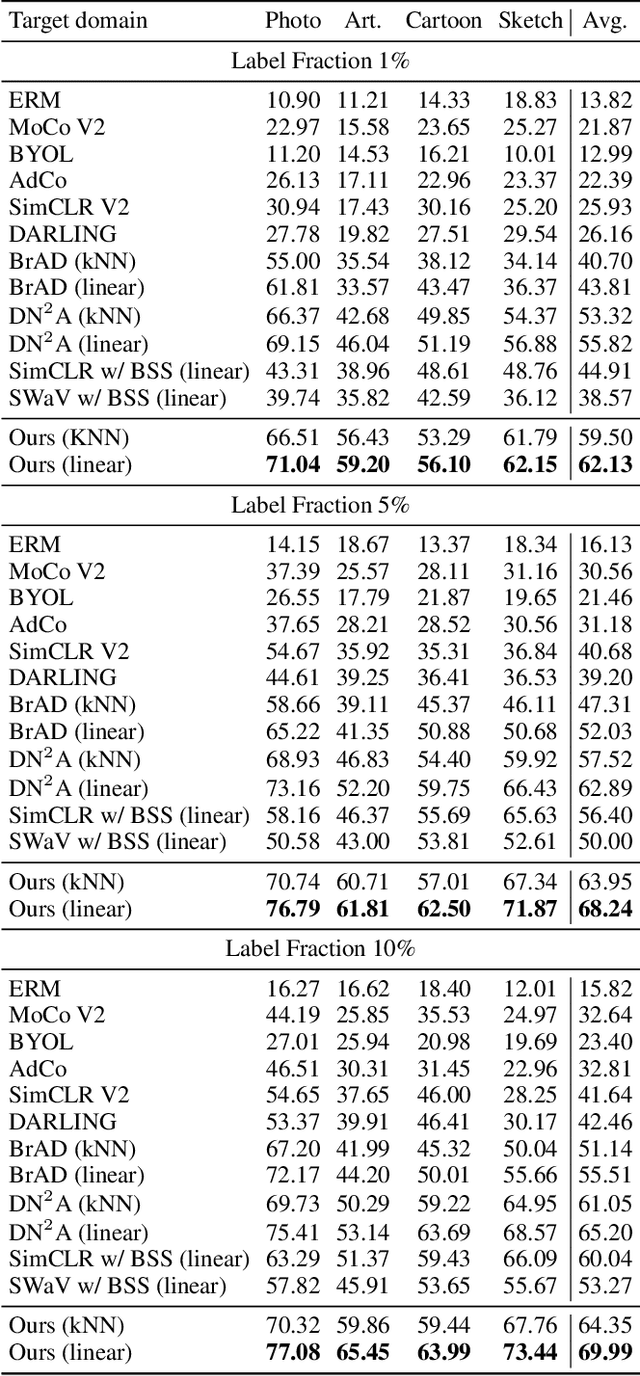

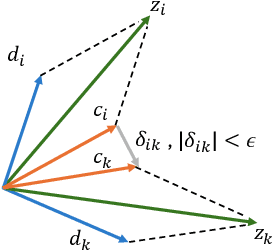

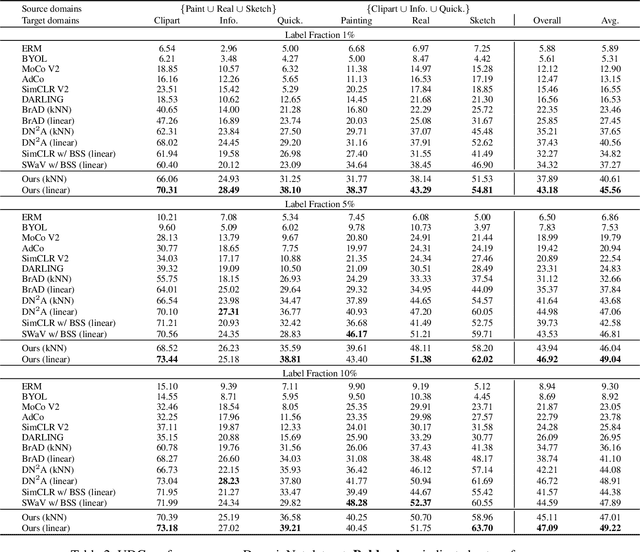

Abstract:Self-supervised learning (SSL) methods based on the instance discrimination tasks with InfoNCE have achieved remarkable success. Despite their success, SSL models often struggle to generate effective representations for unseen-domain data. To address this issue, research on unsupervised domain generalization (UDG), which aims to develop SSL models that can generate domain-irrelevant features, has been conducted. Most UDG approaches utilize contrastive learning with InfoNCE to generate representations, and perform feature alignment based on strong assumptions to generalize domain-irrelevant common features from multi-source domains. However, existing methods that rely on instance discrimination tasks are not effective at extracting domain-irrelevant common features. This leads to the suppression of domain-irrelevant common features and the amplification of domain-relevant features, thereby hindering domain generalization. Furthermore, strong assumptions underlying feature alignment can lead to biased feature learning, reducing the diversity of common features. In this paper, we propose a novel approach, DomCLP, Domain-wise Contrastive Learning with Prototype Mixup. We explore how InfoNCE suppresses domain-irrelevant common features and amplifies domain-relevant features. Based on this analysis, we propose Domain-wise Contrastive Learning (DCon) to enhance domain-irrelevant common features. We also propose Prototype Mixup Learning (PMix) to generalize domain-irrelevant common features across multiple domains without relying on strong assumptions. The proposed method consistently outperforms state-of-the-art methods on the PACS and DomainNet datasets across various label fractions, showing significant improvements. Our code will be released. Our project page is available at https://github.com/jinsuby/DomCLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge