Jiewen Sheng

Differentially Private Log-Location-Scale Regression Using Functional Mechanism

Apr 12, 2024

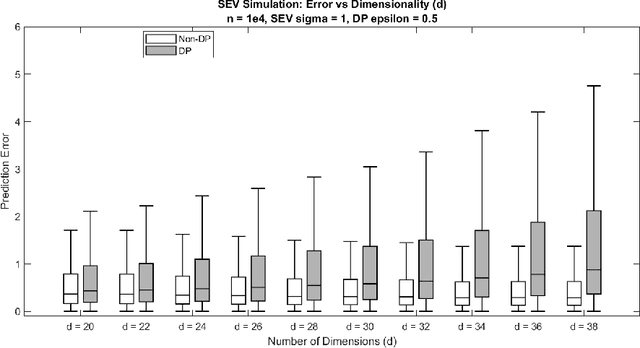

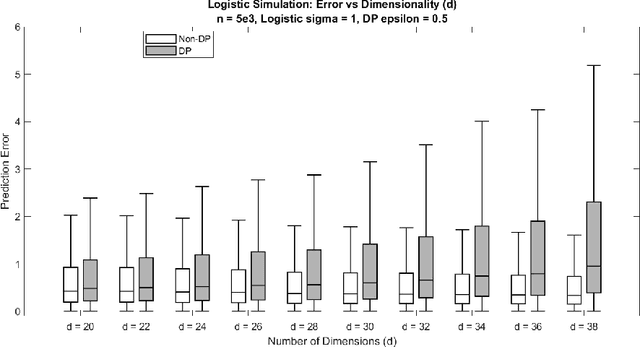

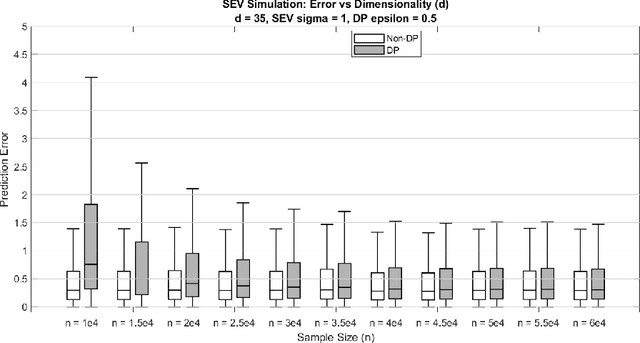

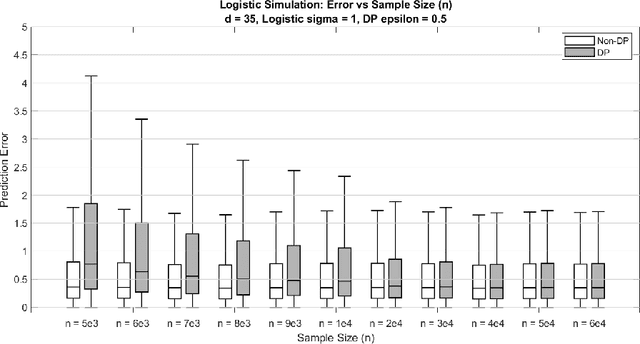

Abstract:This article introduces differentially private log-location-scale (DP-LLS) regression models, which incorporate differential privacy into LLS regression through the functional mechanism. The proposed models are established by injecting noise into the log-likelihood function of LLS regression for perturbed parameter estimation. We will derive the sensitivities utilized to determine the magnitude of the injected noise and prove that the proposed DP-LLS models satisfy $\epsilon$-differential privacy. In addition, we will conduct simulations and case studies to evaluate the performance of the proposed models. The findings suggest that predictor dimension, training sample size, and privacy budget are three key factors impacting the performance of the proposed DP-LLS regression models. Moreover, the results indicate that a sufficiently large training dataset is needed to simultaneously ensure decent performance of the proposed models and achieve a satisfactory level of privacy protection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge