Jesse Parent

Connectionism, Complexity, and Living Systems: a comparison of Artificial and Biological Neural Networks

Mar 15, 2021

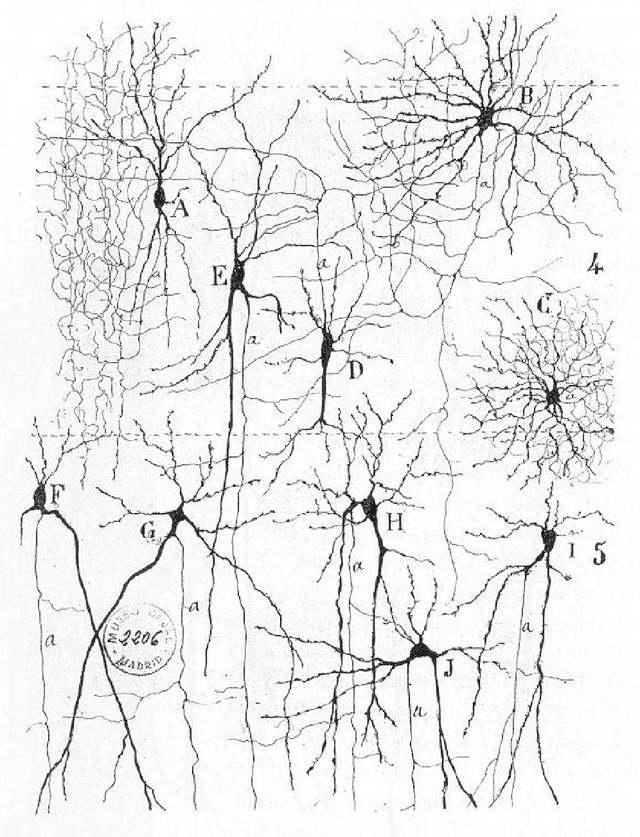

Abstract:While Artificial Neural Networks (ANNs) have yielded impressive results in the realm of simulated intelligent behavior, it is important to remember that they are but sparse approximations of Biological Neural Networks (BNNs). We go beyond comparison of ANNs and BNNs to introduce principles from BNNs that might guide the further development of ANNs as embodied neural models. These principles include representational complexity, complex network structure/energetics, and robust function. We then consider these principles in ways that might be implemented in the future development of ANNs. In conclusion, we consider the utility of this comparison, particularly in terms of building more robust and dynamic ANNs. This even includes constructing a morphology and sensory apparatus to create an embodied ANN, which when complemented with the organizational and functional advantages of BNNs unlocks the adaptive potential of lifelike networks.

Embodied Continual Learning Across Developmental Time Via Developmental Braitenberg Vehicles

Mar 07, 2021

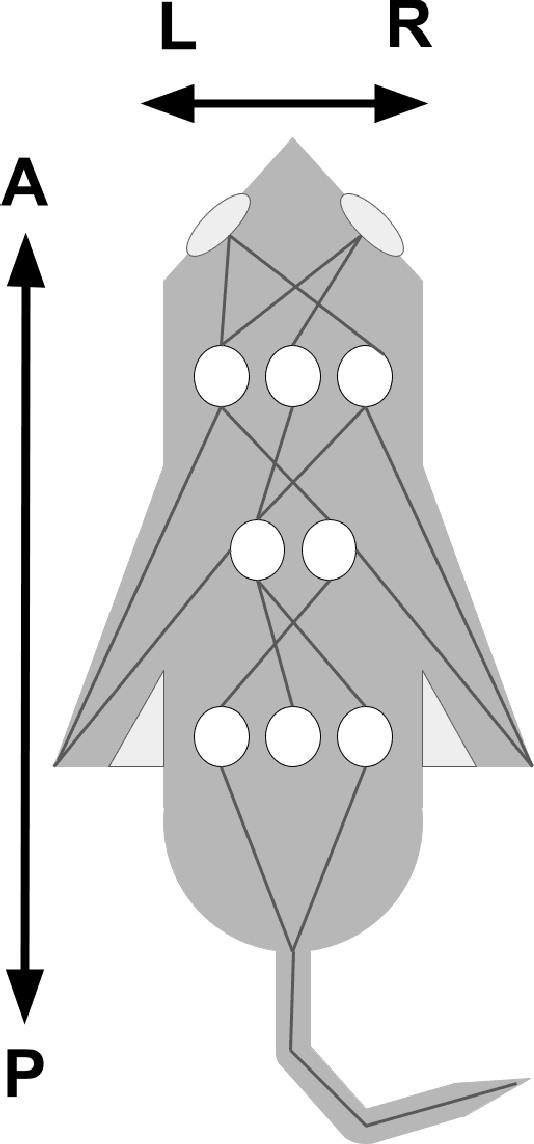

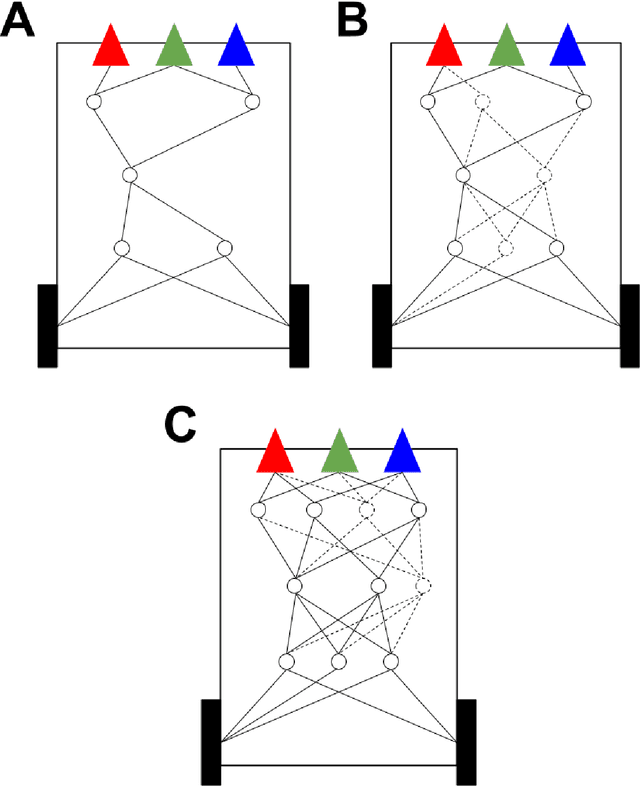

Abstract:There is much to learn through synthesis of Developmental Biology, Cognitive Science and Computational Modeling. One lesson we can learn from this perspective is that the initialization of intelligent programs cannot solely rely on manipulation of numerous parameters. Our path forward is to present a design for developmentally-inspired learning agents based on the Braitenberg Vehicle. Using these agents to exemplify artificial embodied intelligence, we move closer to modeling embodied experience and morphogenetic growth as components of cognitive developmental capacity. We consider various factors regarding biological and cognitive development which influence the generation of adult phenotypes and the contingency of available developmental pathways. These mechanisms produce emergent connectivity with shifting weights and adaptive network topography, thus illustrating the importance of developmental processes in training neural networks. This approach provides a blueprint for adaptive agent behavior that might result from a developmental approach: namely by exploiting critical periods or growth and acquisition, an explicitly embodied network architecture, and a distinction between the assembly of neural networks and active learning on these networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge