Jesper Hauch

Learning and Generalizing Polynomials in Simulation Metamodeling

Jul 20, 2023

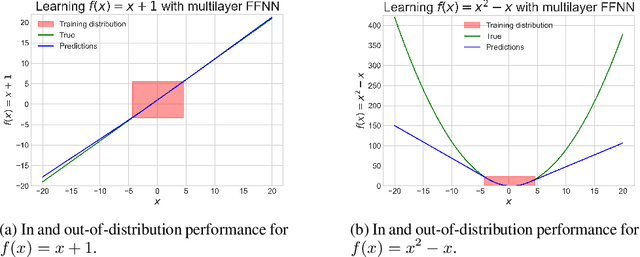

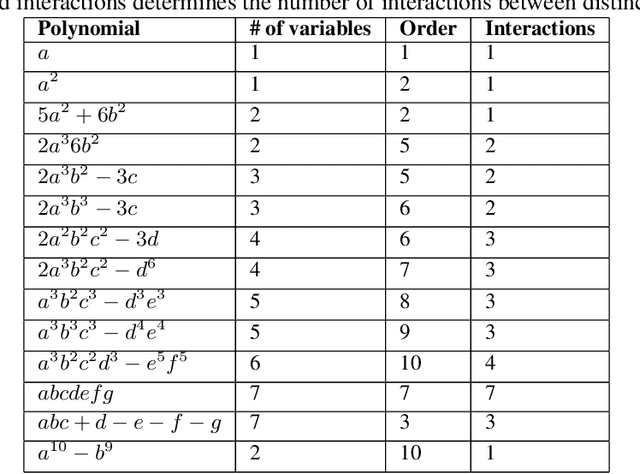

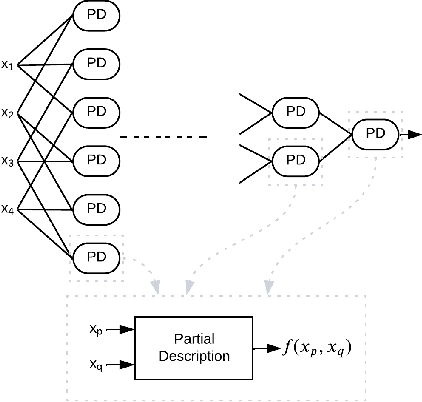

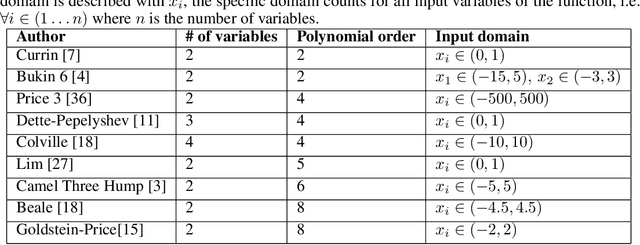

Abstract:The ability to learn polynomials and generalize out-of-distribution is essential for simulation metamodels in many disciplines of engineering, where the time step updates are described by polynomials. While feed forward neural networks can fit any function, they cannot generalize out-of-distribution for higher-order polynomials. Therefore, this paper collects and proposes multiplicative neural network (MNN) architectures that are used as recursive building blocks for approximating higher-order polynomials. Our experiments show that MNNs are better than baseline models at generalizing, and their performance in validation is true to their performance in out-of-distribution tests. In addition to MNN architectures, a simulation metamodeling approach is proposed for simulations with polynomial time step updates. For these simulations, simulating a time interval can be performed in fewer steps by increasing the step size, which entails approximating higher-order polynomials. While our approach is compatible with any simulation with polynomial time step updates, a demonstration is shown for an epidemiology simulation model, which also shows the inductive bias in MNNs for learning and generalizing higher-order polynomials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge