Jean-Sébastien Dessureault

AI2: The next leap toward native language based and explainable machine learning framework

Jan 12, 2023Abstract:The machine learning frameworks flourished in the last decades, allowing artificial intelligence to get out of academic circles to be applied to enterprise domains. This field has significantly advanced, but there is still some meaningful improvement to reach the subsequent expectations. The proposed framework, named AI$^{2}$, uses a natural language interface that allows a non-specialist to benefit from machine learning algorithms without necessarily knowing how to program with a programming language. The primary contribution of the AI$^{2}$ framework allows a user to call the machine learning algorithms in English, making its interface usage easier. The second contribution is greenhouse gas (GHG) awareness. It has some strategies to evaluate the GHG generated by the algorithm to be called and to propose alternatives to find a solution without executing the energy-intensive algorithm. Another contribution is a preprocessing module that helps to describe and to load data properly. Using an English text-based chatbot, this module guides the user to define every dataset so that it can be described, normalized, loaded and divided appropriately. The last contribution of this paper is about explainability. For decades, the scientific community has known that machine learning algorithms imply the famous black-box problem. Traditional machine learning methods convert an input into an output without being able to justify this result. The proposed framework explains the algorithm's process with the proper texts, graphics and tables. The results, declined in five cases, present usage applications from the user's English command to the explained output. Ultimately, the AI$^{2}$ framework represents the next leap toward native language-based, human-oriented concerns about machine learning framework.

ck-means, a novel unsupervised learning method that combines fuzzy and crispy clustering methods to extract intersecting data

Jun 17, 2022

Abstract:Clustering data is a popular feature in the field of unsupervised machine learning. Most algorithms aim to find the best method to extract consistent clusters of data, but very few of them intend to cluster data that share the same intersections between two features or more. This paper proposes a method to do so. The main idea of this novel method is to generate fuzzy clusters of data using a Fuzzy C-Means (FCM) algorithm. The second part involves applying a filter that selects a range of minimum and maximum membership values, emphasizing the border data. A {\mu} parameter defines the amplitude of this range. It finally applies a k-means algorithm using the membership values generated by the FCM. Naturally, the data having similar membership values will regroup in a new crispy cluster. The algorithm is also able to find the optimal number of clusters for the FCM and the k-means algorithm, according to the consistency of the clusters given by the Silhouette Index (SI). The result is a list of data and clusters that regroup data sharing the same intersection, intersecting two features or more. ck-means allows extracting the very similar data that does not naturally fall in the same cluster but at the intersection of two clusters or more. The algorithm also always finds itself the optimal number of clusters.

Explainable Global Error Weighted on Feature Importance: The xGEWFI metric to evaluate the error of data imputation and data augmentation

Jun 17, 2022

Abstract:Evaluating the performance of an algorithm is crucial. Evaluating the performance of data imputation and data augmentation can be similar since both generated data can be compared with an original distribution. Although, the typical evaluation metrics have the same flaw: They calculate the feature's error and the global error on the generated data without weighting the error with the feature importance. The result can be good if all of the feature's importance is similar. However, in most cases, the importance of the features is imbalanced, and it can induce an important bias on the features and global errors. This paper proposes a novel metric named "Explainable Global Error Weighted on Feature Importance"(xGEWFI). This new metric is tested in a whole preprocessing method that 1. detects the outliers and replaces them with a null value. 2. imputes the data missing, and 3. augments the data. At the end of the process, the xGEWFI error is calculated. The distribution error between the original and generated data is calculated using a Kolmogorov-Smirnov test (KS test) for each feature. Those results are multiplied by the importance of the respective features, calculated using a Random Forest (RF) algorithm. The metric result is expressed in an explainable format, aiming for an ethical AI.

DPDR: A novel machine learning method for the Decision Process for Dimensionality Reduction

Jun 17, 2022

Abstract:This paper discusses the critical decision process of extracting or selecting the features in a supervised learning context. It is often confusing to find a suitable method to reduce dimensionality. There are pros and cons to deciding between a feature selection and feature extraction according to the data's nature and the user's preferences. Indeed, the user may want to emphasize the results toward integrity or interpretability and a specific data resolution. This paper proposes a new method to choose the best dimensionality reduction method in a supervised learning context. It also helps to drop or reconstruct the features until a target resolution is reached. This target resolution can be user-defined, or it can be automatically defined by the method. The method applies a regression or a classification, evaluates the results, and gives a diagnosis about the best dimensionality reduction process in this specific supervised learning context. The main algorithms used are the Random Forest algorithms (RF), the Principal Component Analysis (PCA) algorithm, and the multilayer perceptron (MLP) neural network algorithm. Six use cases are presented, and every one is based on some well-known technique to generate synthetic data. This research discusses each choice that can be made in the process, aiming to clarify the issues about the entire decision process of selecting or extracting the features.

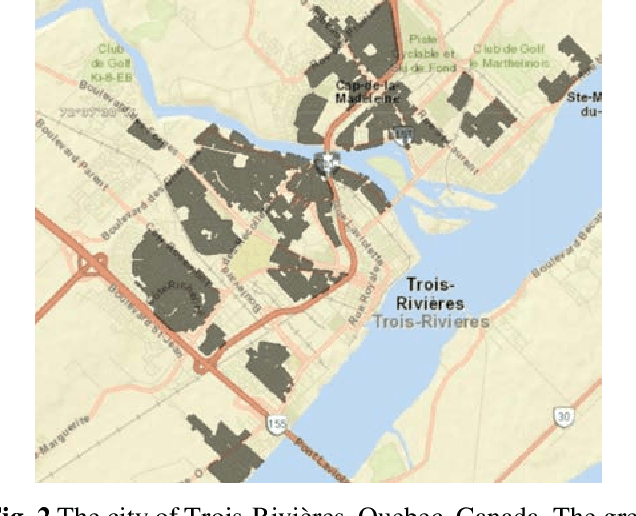

Unsupervised Machine learning methods for city vitality index

Jan 29, 2021

Abstract:This paper concerns the challenge to evaluate and predict a district vitality index (VI) over the years. There is no standard method to do it, and it is even more complicated to do it retroactively in the last decades. Although, it is essential to evaluate and learn features of the past to predict a VI in the future. This paper proposes a method to evaluate such a VI, based on a k-mean clustering algorithm. The meta parameters of this unsupervised machine learning technique are optimized by a genetic algorithm method. Based on the resulting clusters and VI, a linear regression is applied to predict the VI of each district of a city. The weights of each feature used in the clustering are calculated using a random forest regressor algorithm. This method can be a powerful insight for urbanists and inspire the redaction of a city plan in the smart city context.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge