Jay Morgan

Domain-informed graph neural networks: a quantum chemistry case study

Aug 25, 2022

Abstract:We explore different strategies to integrate prior domain knowledge into the design of a deep neural network (DNN). We focus on graph neural networks (GNN), with a use case of estimating the potential energy of chemical systems (molecules and crystals) represented as graphs. We integrate two elements of domain knowledge into the design of the GNN to constrain and regularise its learning, towards higher accuracy and generalisation. First, knowledge on the existence of different types of relations (chemical bonds) between atoms is used to modulate the interaction of nodes in the GNN. Second, knowledge of the relevance of some physical quantities is used to constrain the learnt features towards a higher physical relevance using a simple multi-task paradigm. We demonstrate the general applicability of our knowledge integrations by applying them to two architectures that rely on different mechanisms to propagate information between nodes and to update node states.

A Computability Perspective on (Verified) Machine Learning

Feb 12, 2021Abstract:There is a strong consensus that combining the versatility of machine learning with the assurances given by formal verification is highly desirable. It is much less clear what verified machine learning should mean exactly. We consider this question from the (unexpected?) perspective of computable analysis. This allows us to define the computational tasks underlying verified ML in a model-agnostic way, and show that they are in principle computable.

Adaptive Neighbourhoods for the Discovery of Adversarial Examples

Jan 22, 2021

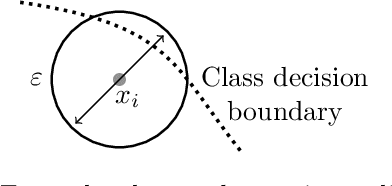

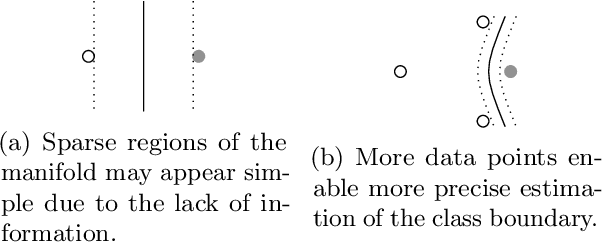

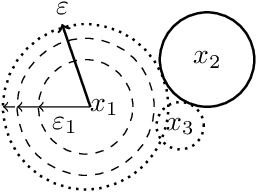

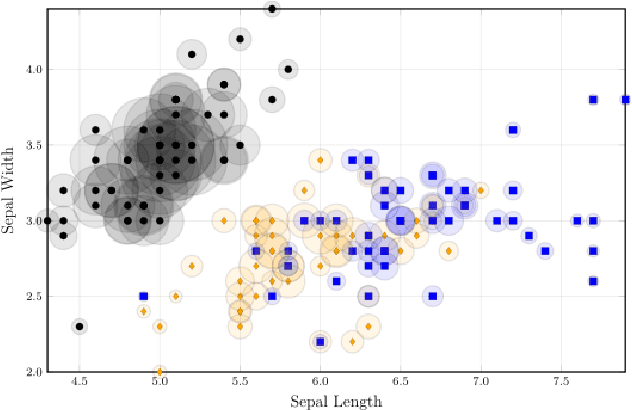

Abstract:Deep Neural Networks (DNNs) have often supplied state-of-the-art results in pattern recognition tasks. Despite their advances, however, the existence of adversarial examples have caught the attention of the community. Many existing works have proposed methods for searching for adversarial examples within fixed-sized regions around training points. Our work complements and improves these existing approaches by adapting the size of these regions based on the problem complexity and data sampling density. This makes such approaches more appropriate for other types of data and may further improve adversarial training methods by increasing the region sizes without creating incorrect labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge