Jan Hadl

Declarative Knowledge Distillation from Large Language Models for Visual Question Answering Datasets

Oct 12, 2024

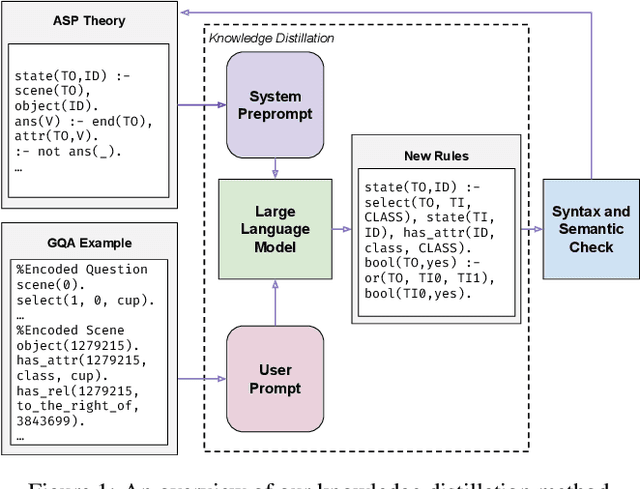

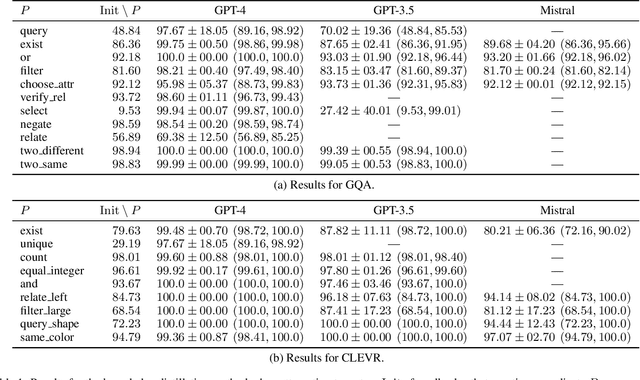

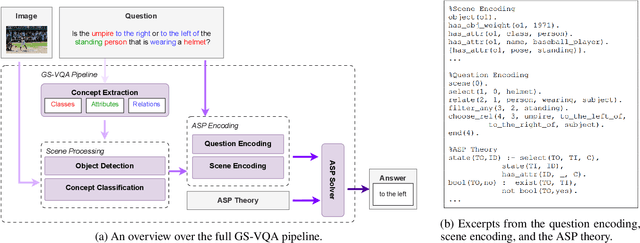

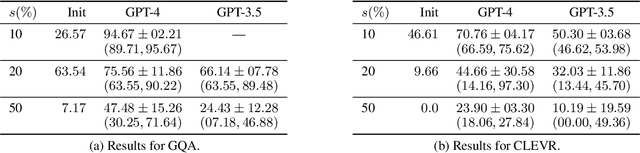

Abstract:Visual Question Answering (VQA) is the task of answering a question about an image and requires processing multimodal input and reasoning to obtain the answer. Modular solutions that use declarative representations within the reasoning component have a clear advantage over end-to-end trained systems regarding interpretability. The downside is that crafting the rules for such a component can be an additional burden on the developer. We address this challenge by presenting an approach for declarative knowledge distillation from Large Language Models (LLMs). Our method is to prompt an LLM to extend an initial theory on VQA reasoning, given as an answer-set program, to meet the requirements of the VQA task. Examples from the VQA dataset are used to guide the LLM, validate the results, and mend rules if they are not correct by using feedback from the ASP solver. We demonstrate that our approach works on the prominent CLEVR and GQA datasets. Our results confirm that distilling knowledge from LLMs is in fact a promising direction besides data-driven rule learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge