Henry Wilde

Segmentation analysis and the recovery of queuing parameters via the Wasserstein distance: a study of administrative data for patients with chronic obstructive pulmonary disease

Aug 14, 2020

Abstract:This work uses a data-driven approach to analyse how the resource requirements of patients with chronic obstructive pulmonary disease (COPD) may change, quantifying how those changes impact the hospital system with which the patients interact. This approach is composed of a novel combination of often distinct modes of analysis: segmentation, operational queuing theory, and the recovery of parameters from incomplete data. By combining these methods as presented here, this work demonstrates that potential limitations around the availability of fine-grained data can be overcome. Thus, finding useful operational results despite using only administrative data. The paper begins by finding a useful clustering of the population from this granular data that feeds into a multi-class M/M/c model, whose parameters are recovered from the data via parameterisation and the Wasserstein distance. This model is then used to conduct an informative analysis of the underlying queuing system and the needs of the population under study through several what-if scenarios. The analyses used to form and study this model consider, in effect, all types of patient arrivals and how those types impact the system. With that, this study finds that there are no quick solutions to reduce the impact of COPD patients on the system, including adding capacity to the system. In this analysis, the only effective intervention to reduce the strain caused by those presenting with COPD is to enact external policies which directly improve the overall health of the COPD population before they arrive at the hospital.

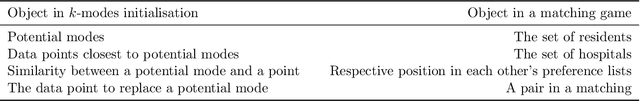

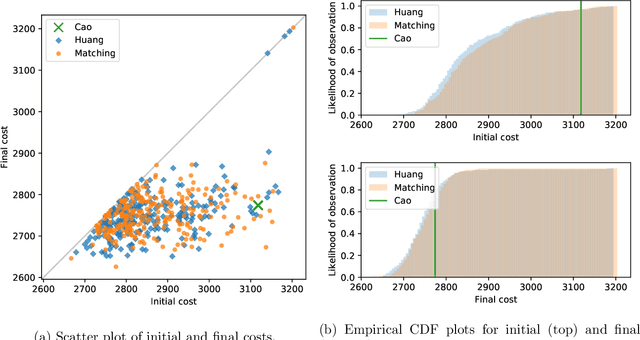

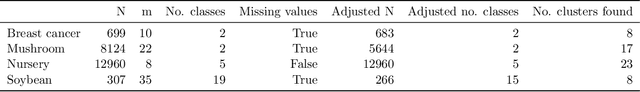

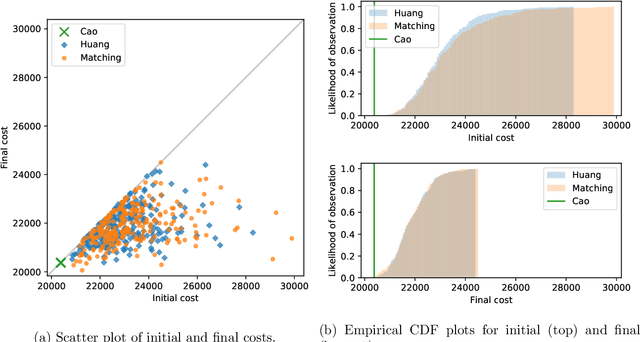

A novel initialisation based on hospital-resident assignment for the k-modes algorithm

Feb 07, 2020

Abstract:This paper presents a new way of selecting an initial solution for the k-modes algorithm that allows for a notion of mathematical fairness and a leverage of the data that the common initialisations from literature do not. The method, which utilises the Hospital-Resident Assignment Problem to find the set of initial cluster centroids, is compared with the current initialisations on both benchmark datasets and a body of newly generated artificial datasets. Based on this analysis, the proposed method is shown to outperform the other initialisations in the majority of cases, especially when the number of clusters is optimised. In addition, we find that our method outperforms the leading established method specifically for low-density data.

Evolutionary Dataset Optimisation: learning algorithm quality through evolution

Sep 02, 2019

Abstract:In this paper we propose a new method for learning how algorithms perform. Classically, algorithms are compared on a finite number of existing (or newly simulated) benchmark data sets based on some fixed metrics. The algorithm(s) with the smallest value of this metric are chosen to be the `best performing'. We offer a new approach to flip this paradigm. We instead aim to gain a richer picture of the performance of an algorithm by generating artificial data through genetic evolution, the purpose of which is to create populations of datasets for which a particular algorithm performs well. These data sets can be studied to learn as to what attributes lead to a particular progress of a given algorithm. Following a detailed description of the algorithm as well as a brief description of an open source implementation, a number of numeric experiments are presented to show the performance of the method which we call Evolutionary Dataset Optimisation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge