Heng Song

A Fusion Model for Artwork Identification Based on Convolutional Neural Networks and Transformers

Feb 27, 2025

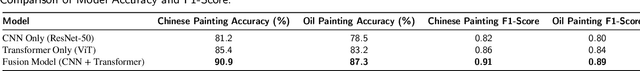

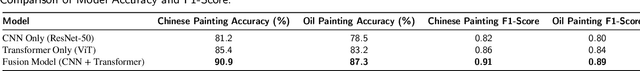

Abstract:The identification of artwork is crucial in areas like cultural heritage protection, art market analysis, and historical research. With the advancement of deep learning, Convolutional Neural Networks (CNNs) and Transformer models have become key tools for image classification. While CNNs excel in local feature extraction, they struggle with global context, and Transformers are strong in capturing global dependencies but weak in fine-grained local details. To address these challenges, this paper proposes a fusion model combining CNNs and Transformers for artwork identification. The model first extracts local features using CNNs, then captures global context with a Transformer, followed by a feature fusion mechanism to enhance classification accuracy. Experiments on Chinese and oil painting datasets show the fusion model outperforms individual CNN and Transformer models, improving classification accuracy by 9.7% and 7.1%, respectively, and increasing F1 scores by 0.06 and 0.05. The results demonstrate the model's effectiveness and potential for future improvements, such as multimodal integration and architecture optimization.

A Fusion Model for Art Author Identification Based on Convolutional Neural Networks and Transformers

Feb 26, 2025

Abstract:The identification of art authors is crucial in areas like cultural heritage protection, art market analysis, and historical research. With the advancement of deep learning, Convolutional Neural Networks (CNNs) and Transformer models have become key tools for image classification. While CNNs excel in local feature extraction, they struggle with global context, and Transformers are strong in capturing global dependencies but weak in fine-grained local details. To address these challenges, this paper proposes a fusion model combining CNNs and Transformers for art author identification. The model first extracts local features using CNNs, then captures global context with a Transformer, followed by a feature fusion mechanism to enhance classification accuracy. Experiments on Chinese and oil painting datasets show the fusion model outperforms individual CNN and Transformer models, improving classification accuracy by 9.7\% and 7.1\%, respectively, and increasing F1 scores by 0.06 and 0.05. The results demonstrate the model's effectiveness and potential for future improvements, such as multimodal integration and architecture optimization.

A Task-Driven Multi-UAV Coalition Formation Mechanism

Mar 08, 2024

Abstract:With the rapid advancement of UAV technology, the problem of UAV coalition formation has become a hotspot. Therefore, designing task-driven multi-UAV coalition formation mechanism has become a challenging problem. However, existing coalition formation mechanisms suffer from low relevance between UAVs and task requirements, resulting in overall low coalition utility and unstable coalition structures. To address these problems, this paper proposed a novel multi-UAV coalition network collaborative task completion model, considering both coalition work capacity and task-requirement relationships. This model stimulated the formation of coalitions that match task requirements by using a revenue function based on the coalition's revenue threshold. Subsequently, an algorithm for coalition formation based on marginal utility was proposed. Specifically, the algorithm utilized Shapley value to achieve fair utility distribution within the coalition, evaluated coalition values based on marginal utility preference order, and achieved stable coalition partition through a limited number of iterations. Additionally, we theoretically proved that this algorithm has Nash equilibrium solution. Finally, experimental results demonstrated that the proposed algorithm, compared to currently classical algorithms, not only forms more stable coalitions but also further enhances the overall utility of coalitions effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge