Haoyou Jiang

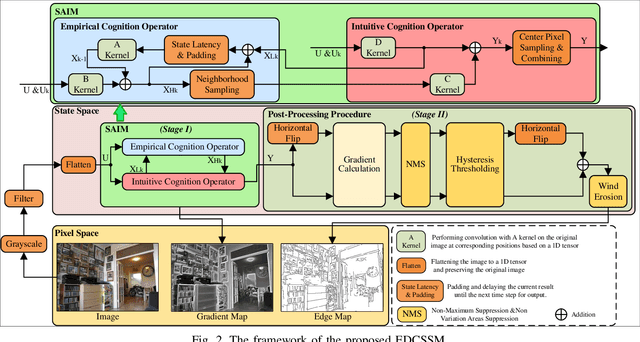

EDCSSM: Edge Detection with Convolutional State Space Model

Sep 03, 2024

Abstract:Edge detection in images is the foundation of many complex tasks in computer graphics. Due to the feature loss caused by multi-layer convolution and pooling architectures, learning-based edge detection models often produce thick edges and struggle to detect the edges of small objects in images. Inspired by state space models, this paper presents an edge detection algorithm which effectively addresses the aforementioned issues. The presented algorithm obtains state space variables of the image from dual-input channels with minimal down-sampling processes and utilizes these state variables for real-time learning and memorization of image text. Additionally, to achieve precise edges while filtering out false edges, a post-processing algorithm called wind erosion has been designed to handle the binary edge map. To further enhance the processing speed of the algorithm, we have designed parallel computing circuits for the most computationally intensive parts of presented algorithm, significantly improving computational speed and efficiency. Experimental results demonstrate that the proposed algorithm achieves precise thin edge localization and exhibits noise suppression capabilities across various types of images. With the parallel computing circuits, the algorithm to achieve processing speeds exceeds 30 FPS on 5K images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge