Hamid Shamszare

User Intent to Use DeekSeep for Healthcare Purposes and their Trust in the Large Language Model: Multinational Survey Study

Feb 18, 2025

Abstract:Large language models (LLMs) increasingly serve as interactive healthcare resources, yet user acceptance remains underexplored. This study examines how ease of use, perceived usefulness, trust, and risk perception interact to shape intentions to adopt DeepSeek, an emerging LLM-based platform, for healthcare purposes. A cross-sectional survey of 556 participants from India, the United Kingdom, and the United States was conducted to measure perceptions and usage patterns. Structural equation modeling assessed both direct and indirect effects, including potential quadratic relationships. Results revealed that trust plays a pivotal mediating role: ease of use exerts a significant indirect effect on usage intentions through trust, while perceived usefulness contributes to both trust development and direct adoption. By contrast, risk perception negatively affects usage intent, emphasizing the importance of robust data governance and transparency. Notably, significant non-linear paths were observed for ease of use and risk, indicating threshold or plateau effects. The measurement model demonstrated strong reliability and validity, supported by high composite reliabilities, average variance extracted, and discriminant validity measures. These findings extend technology acceptance and health informatics research by illuminating the multifaceted nature of user adoption in sensitive domains. Stakeholders should invest in trust-building strategies, user-centric design, and risk mitigation measures to encourage sustained and safe uptake of LLMs in healthcare. Future work can employ longitudinal designs or examine culture-specific variables to further clarify how user perceptions evolve over time and across different regulatory environments. Such insights are critical for harnessing AI to enhance outcomes.

From Zero to The Hero: A Collaborative Market Aware Recommendation System for Crowd Workers

Jul 06, 2021

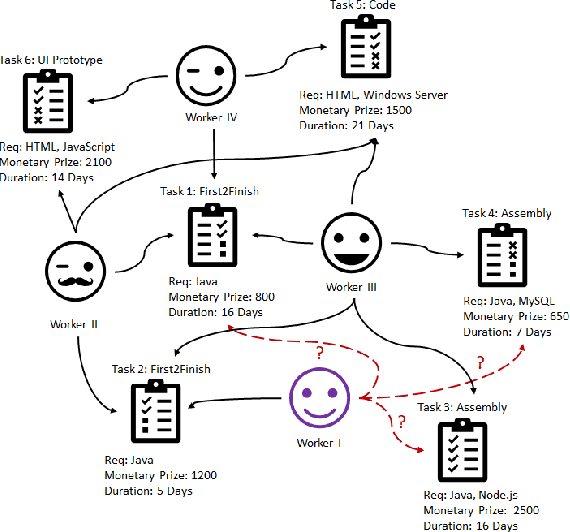

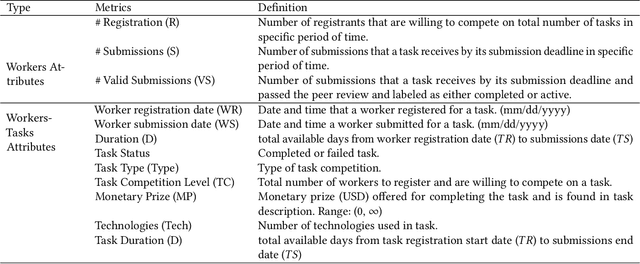

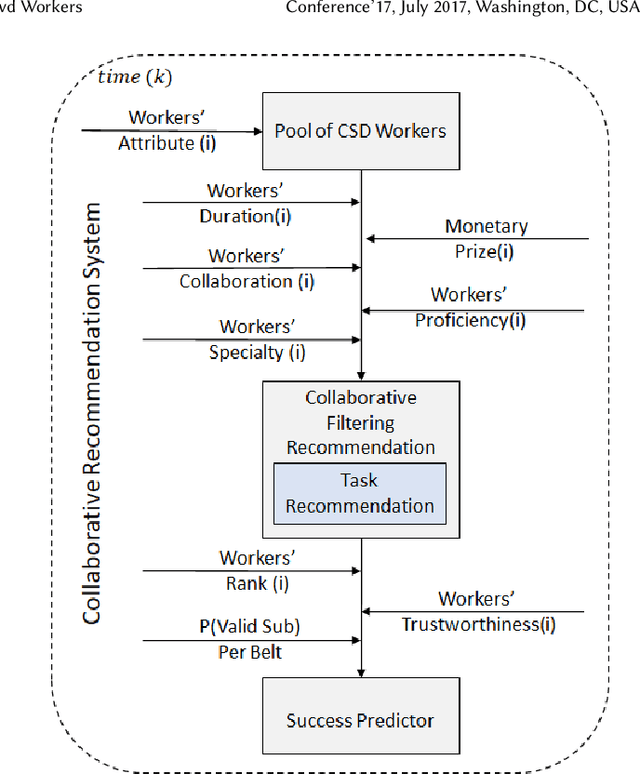

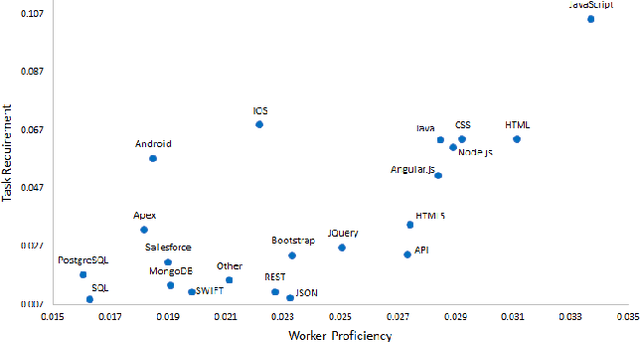

Abstract:The success of software crowdsourcing depends on active and trustworthy pool of worker supply. The uncertainty of crowd workers' behaviors makes it challenging to predict workers' success and plan accordingly. In a competitive crowdsourcing marketplace, competition for success over shared tasks adds another layer of uncertainty in crowd workers' decision-making process. Preliminary analysis on software worker behaviors reveals an alarming task dropping rate of 82.9%. These factors lead to the need for an automated recommendation system for CSD workers to improve the visibility and predictability of their success in the competition. To that end, this paper proposes a collaborative recommendation system for crowd workers. The proposed recommendation system method uses five input metrics based on workers' collaboration history in the pool, workers' preferences in taking tasks in terms of monetary prize and duration, workers' specialty, and workers' proficiency. The proposed method then recommends the most suitable tasks for a worker to compete on based on workers' probability of success in the task. Experimental results on 260 active crowd workers demonstrate that just following the top three success probabilities of task recommendations, workers can achieve success up to 86%

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge