Guto Leoni Santos

The Greatest Teacher, Failure is: Using Reinforcement Learning for SFC Placement Based on Availability and Energy Consumption

Oct 12, 2020

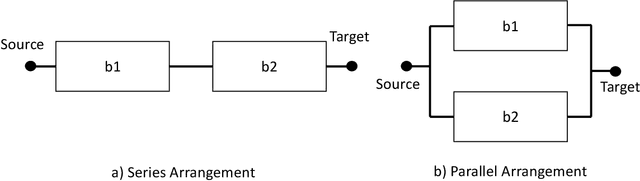

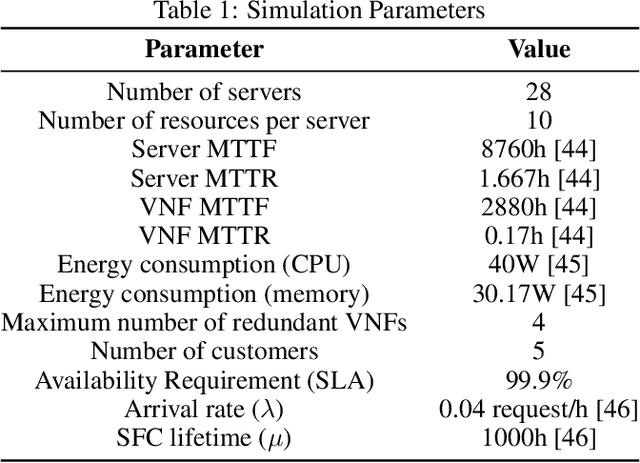

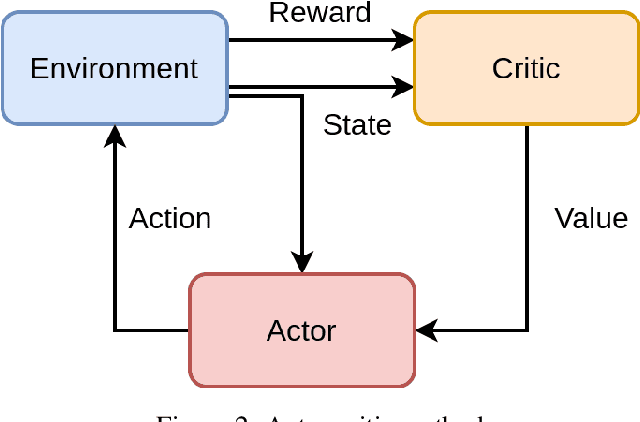

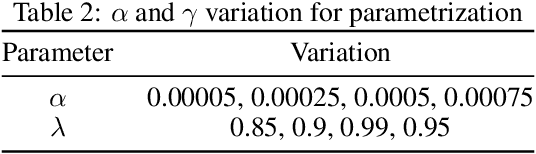

Abstract:Software defined networking (SDN) and network functions virtualisation (NFV) are making networks programmable and consequently much more flexible and agile. To meet service level agreements, achieve greater utilisation of legacy networks, faster service deployment, and reduce expenditure, telecommunications operators are deploying increasingly complex service function chains (SFCs). Notwithstanding the benefits of SFCs, increasing heterogeneity and dynamism from the cloud to the edge introduces significant SFC placement challenges, not least adding or removing network functions while maintaining availability, quality of service, and minimising cost. In this paper, an availability- and energy-aware solution based on reinforcement learning (RL) is proposed for dynamic SFC placement. Two policy-aware RL algorithms, Advantage Actor-Critic (A2C) and Proximal Policy Optimisation (PPO2), are compared using simulations of a ground truth network topology based on the Rede Nacional de Ensino e Pesquisa (RNP) Network, Brazil's National Teaching and Research Network backbone. The simulation results showed that PPO2 generally outperformed A2C and a greedy approach both in terms of acceptance rate and energy consumption. A2C outperformed PPO2 only in the scenario where network servers had a greater number of computing resources.

Using Reinforcement Learning to Allocate and Manage Service Function Chains in Cellular Networks

Jul 06, 2020

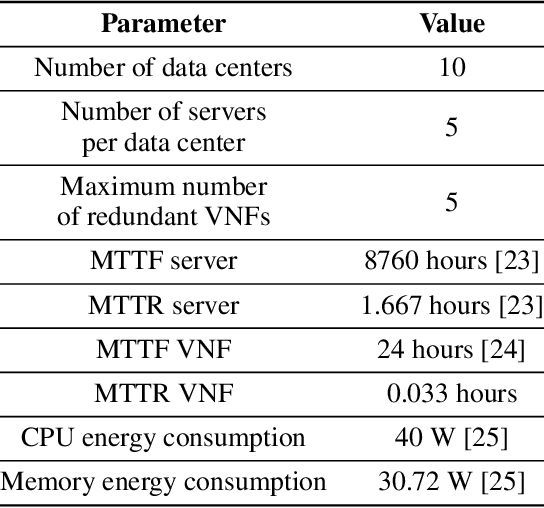

Abstract:It is expected that the next generation cellular networks provide a connected society with fully mobility to empower the socio-economic transformation. Several other technologies will benefits of this evolution, such as Internet of Things, smart cities, smart agriculture, vehicular networks, healthcare applications, and so on. Each of these scenarios presents specific requirements and demands different network configurations. To deal with this heterogeneity, virtualization technology is key technology. Indeed, the network function virtualization (NFV) paradigm provides flexibility for the network manager, allocating resources according to the demand, and reduces acquisition and operational costs. In addition, it is possible to specify an ordered set of network virtual functions (VNFs) for a given service, which is called as service function chain (SFC). However, besides the advantages from service virtualization, it is expected that network performance and availability do not be affected by its usage. In this paper, we propose the use of reinforcement learning to deploy a SFC of cellular network service and manage the VNFs operation. We consider that the SFC is deployed by the reinforcement learning agent considering a scenarios with distributed data centers, where the VNFs are deployed in virtual machines in commodity servers. The NFV management is related to create, delete, and restart the VNFs. The main purpose is to reduce the number of lost packets taking into account the energy consumption of the servers. We use the Proximal Policy Optimization (PPO2) algorithm to implement the agent and preliminary results show that the agent is able to allocate the SFC and manage the VNFs, reducing the number of lost packets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge