Gopika Ajaykumar

Johns Hopkins University

An Introduction to Causal Inference Methods for Observational Human-Robot Interaction Research

Oct 31, 2023Abstract:Quantitative methods in Human-Robot Interaction (HRI) research have primarily relied upon randomized, controlled experiments in laboratory settings. However, such experiments are not always feasible when external validity, ethical constraints, and ease of data collection are of concern. Furthermore, as consumer robots become increasingly available, increasing amounts of real-world data will be available to HRI researchers, which prompts the need for quantative approaches tailored to the analysis of observational data. In this article, we present an alternate approach towards quantitative research for HRI researchers using methods from causal inference that can enable researchers to identify causal relationships in observational settings where randomized, controlled experiments cannot be run. We highlight different scenarios that HRI research with consumer household robots may involve to contextualize how methods from causal inference can be applied to observational HRI research. We then provide a tutorial summarizing key concepts from causal inference using a graphical model perspective and link to code examples throughout the article, which are available at https://gitlab.com/causal/causal_hri. Our work paves the way for further discussion on new approaches towards observational HRI research while providing a starting point for HRI researchers to add causal inference techniques to their analytical toolbox.

Older Adults' Task Preferences for Robot Assistance in the Home

Feb 24, 2023

Abstract:Artificial intelligence technologies that can assist with at-home tasks have the potential to help older adults age in place. Robot assistance in particular has been applied towards physical and cognitive support for older adults living independently at home. Surveys, questionnaires, and group interviews have been used to understand what tasks older adults want robots to assist them with. We build upon prior work exploring older adults' task preferences for robot assistance through field interviews situated within older adults' aging contexts. Our findings support results from prior work indicating older adults' preference for physical assistance over social and care-related support from robots and indicating their preference for control when adopting robot assistance, while highlighting the variety of individual constraints, boundaries, and needs that may influence their preferences.

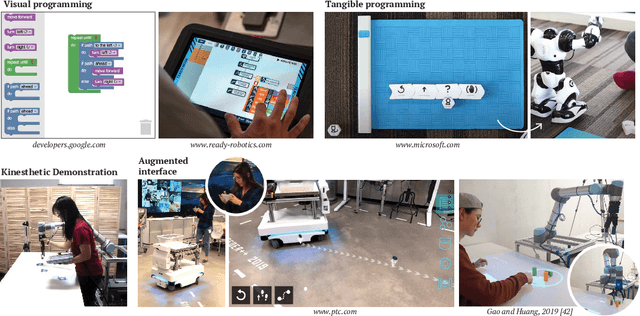

Multimodal Robot Programming by Demonstration: A Preliminary Exploration

Jan 17, 2023Abstract:Recent years have seen a growth in the number of industrial robots working closely with end-users such as factory workers. This growing use of collaborative robots has been enabled in part due to the availability of end-user robot programming methods that allow users who are not robot programmers to teach robots task actions. Programming by Demonstration (PbD) is one such end-user programming method that enables users to bypass the complexities of specifying robot motions using programming languages by instead demonstrating the desired robot behavior. Demonstrations are often provided by physically guiding the robot through the motions required for a task action in a process known as kinesthetic teaching. Kinesthetic teaching enables users to directly demonstrate task behaviors in the robot's configuration space, making it a popular end-user robot programming method for collaborative robots known for its low cognitive burden. However, because kinesthetic teaching restricts the programmer's teaching to motion demonstrations, it fails to leverage information from other modalities that humans naturally use when providing physical task demonstrations to one other, such as gaze and speech. Incorporating multimodal information into the traditional kinesthetic programming workflow has the potential to enhance robot learning by highlighting critical aspects of a program, reducing ambiguity, and improving situational awareness for the robot learner and can provide insight into the human programmer's intent and difficulties. In this extended abstract, we describe a preliminary study on multimodal kinesthetic demonstrations and future directions for using multimodal demonstrations to enhance robot learning and user programming experiences.

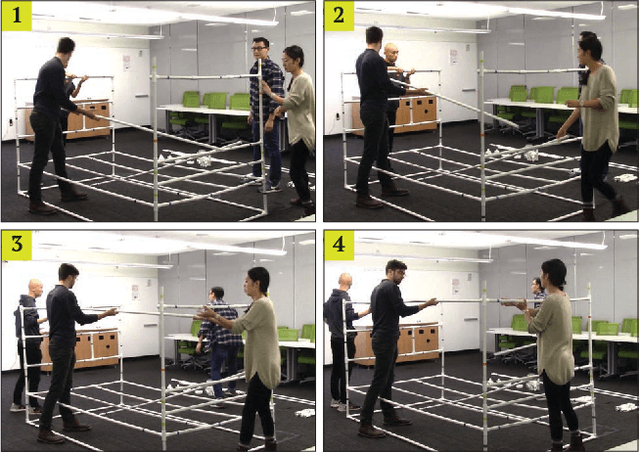

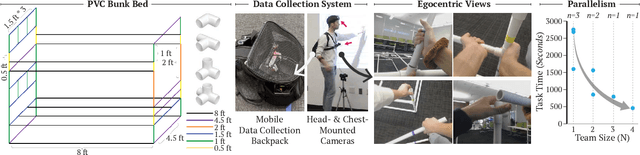

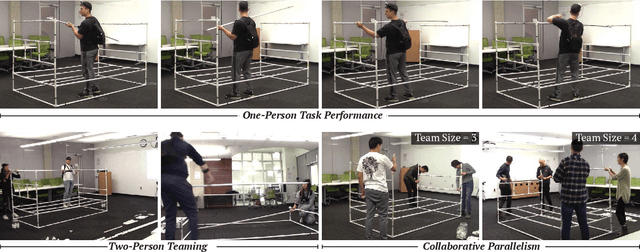

FACT: A Full-body Ad-hoc Collaboration Testbed for Modeling Complex Teamwork

Jun 07, 2021

Abstract:Robots are envisioned to work alongside humans in applications ranging from in-home assistance to collaborative manufacturing. Research on human-robot collaboration (HRC) has helped develop various aspects of social intelligence necessary for robots to participate in effective, fluid collaborations with humans. However, HRC research has focused on dyadic, structured, and minimal collaborations between humans and robots that may not fully represent the large scale and emergent nature of more complex, unstructured collaborative activities. Thus, there remains a need for shared testbeds, datasets, and evaluation metrics that researchers can use to better model natural, ad-hoc human collaborative behaviors and develop robot capabilities intended for large scale emergent collaborations. We present one such shared resource - FACT (Full-body Ad-hoc Collaboration Testbed), an openly accessible testbed for researchers to obtain an expansive view of the individual and team-based behaviors involved in complex, co-located teamwork. We detail observations from a preliminary exploration with teams of various sizes and discuss potential research questions that may be investigated using the testbed. Our goal is for FACT to be an initial resource that supports a more holistic investigation of human-robot collaboration.

A Survey on End-User Robot Programming

May 04, 2021

Abstract:As robots interact with a broader range of end-users, end-user robot programming has helped democratize robot programming by empowering end-users who may not have experience in robot programming to customize robots to meet their individual contextual needs. This article surveys work on end-user robot programming, with a focus on end-user program specification. It describes the primary domains, programming phases, and design choices represented by the end-user robot programming literature. The survey concludes by highlighting open directions for further investigation to enhance and widen the reach of end-user robot programming systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge