Faysal Mahmud

Unmasking Deepfake Faces from Videos Using An Explainable Cost-Sensitive Deep Learning Approach

Dec 17, 2023

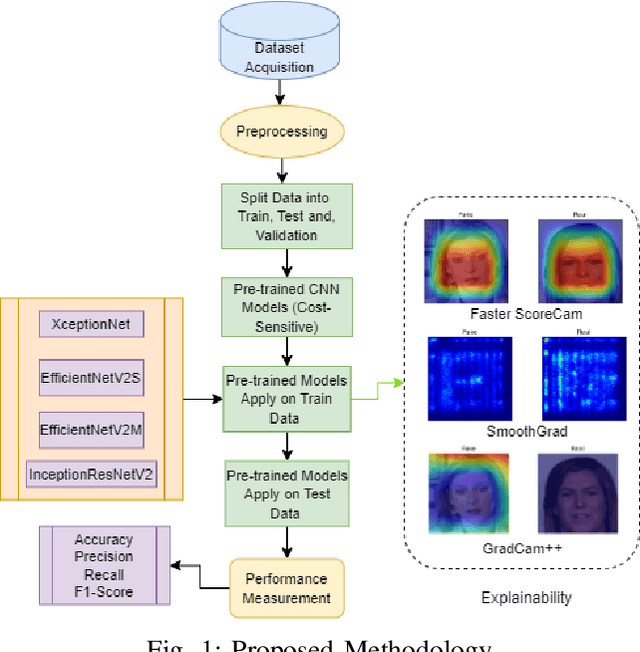

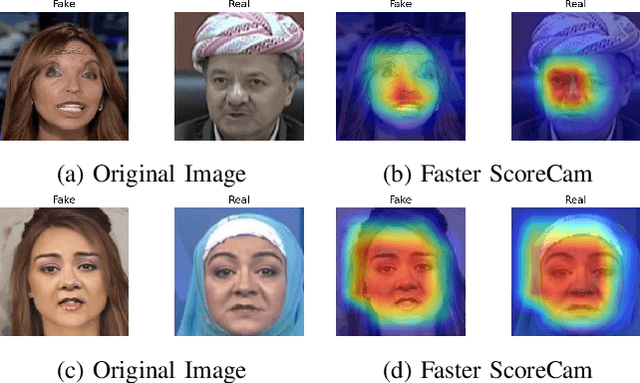

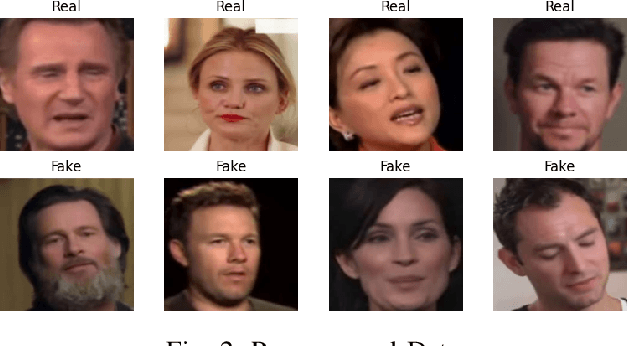

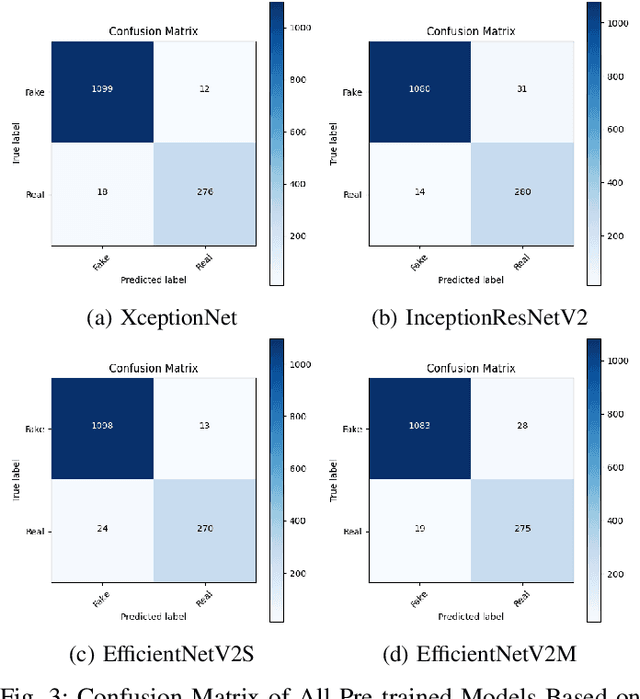

Abstract:Deepfake technology is widely used, which has led to serious worries about the authenticity of digital media, making the need for trustworthy deepfake face recognition techniques more urgent than ever. This study employs a resource-effective and transparent cost-sensitive deep learning method to effectively detect deepfake faces in videos. To create a reliable deepfake detection system, four pre-trained Convolutional Neural Network (CNN) models: XceptionNet, InceptionResNetV2, EfficientNetV2S, and EfficientNetV2M were used. FaceForensics++ and CelebDf-V2 as benchmark datasets were used to assess the performance of our method. To efficiently process video data, key frame extraction was used as a feature extraction technique. Our main contribution is to show the models adaptability and effectiveness in correctly identifying deepfake faces in videos. Furthermore, a cost-sensitive neural network method was applied to solve the dataset imbalance issue that arises frequently in deepfake detection. The XceptionNet model on the CelebDf-V2 dataset gave the proposed methodology a 98% accuracy, which was the highest possible whereas, the InceptionResNetV2 model, achieves an accuracy of 94% on the FaceForensics++ dataset. Source Code: https://github.com/Faysal-MD/Unmasking-Deepfake-Faces-from-Videos-An-Explainable-Cost-Sensitive-Deep-Learning-Approach-IEEE2023

An Interpretable Deep Learning Approach for Skin Cancer Categorization

Dec 17, 2023

Abstract:Skin cancer is a serious worldwide health issue, precise and early detection is essential for better patient outcomes and effective treatment. In this research, we use modern deep learning methods and explainable artificial intelligence (XAI) approaches to address the problem of skin cancer detection. To categorize skin lesions, we employ four cutting-edge pre-trained models: XceptionNet, EfficientNetV2S, InceptionResNetV2, and EfficientNetV2M. Image augmentation approaches are used to reduce class imbalance and improve the generalization capabilities of our models. Our models decision-making process can be clarified because of the implementation of explainable artificial intelligence (XAI). In the medical field, interpretability is essential to establish credibility and make it easier to implement AI driven diagnostic technologies into clinical workflows. We determined the XceptionNet architecture to be the best performing model, achieving an accuracy of 88.72%. Our study shows how deep learning and explainable artificial intelligence (XAI) can improve skin cancer diagnosis, laying the groundwork for future developments in medical image analysis. These technologies ability to allow for early and accurate detection could enhance patient care, lower healthcare costs, and raise the survival rates for those with skin cancer. Source Code: https://github.com/Faysal-MD/An-Interpretable-Deep-Learning?Approach-for-Skin-Cancer-Categorization-IEEE2023

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge