Evgeny Hershkovitch Neiterman

LayerDropBack: A Universally Applicable Approach for Accelerating Training of Deep Networks

Dec 23, 2024Abstract:Training very deep convolutional networks is challenging, requiring significant computational resources and time. Existing acceleration methods often depend on specific architectures or require network modifications. We introduce LayerDropBack (LDB), a simple yet effective method to accelerate training across a wide range of deep networks. LDB introduces randomness only in the backward pass, maintaining the integrity of the forward pass, guaranteeing that the same network is used during both training and inference. LDB can be seamlessly integrated into the training process of any model without altering its architecture, making it suitable for various network topologies. Our extensive experiments across multiple architectures (ViT, Swin Transformer, EfficientNet, DLA) and datasets (CIFAR-100, ImageNet) show significant training time reductions of 16.93\% to 23.97\%, while preserving or even enhancing model accuracy. Code is available at \url{https://github.com/neiterman21/LDB}.

ChannelDropBack: Forward-Consistent Stochastic Regularization for Deep Networks

Nov 23, 2024Abstract:Incorporating stochasticity into the training process of deep convolutional networks is a widely used technique to reduce overfitting and improve regularization. Existing techniques often require modifying the architecture of the network by adding specialized layers, are effective only to specific network topologies or types of layers - linear or convolutional, and result in a trained model that is different from the deployed one. We present ChannelDropBack, a simple stochastic regularization approach that introduces randomness only into the backward information flow, leaving the forward pass intact. ChannelDropBack randomly selects a subset of channels within the network during the backpropagation step and applies weight updates only to them. As a consequence, it allows for seamless integration into the training process of any model and layers without the need to change its architecture, making it applicable to various network topologies, and the exact same network is deployed during training and inference. Experimental evaluations validate the effectiveness of our approach, demonstrating improved accuracy on popular datasets and models, including ImageNet and ViT. Code is available at \url{https://github.com/neiterman21/ChannelDropBack.git}.

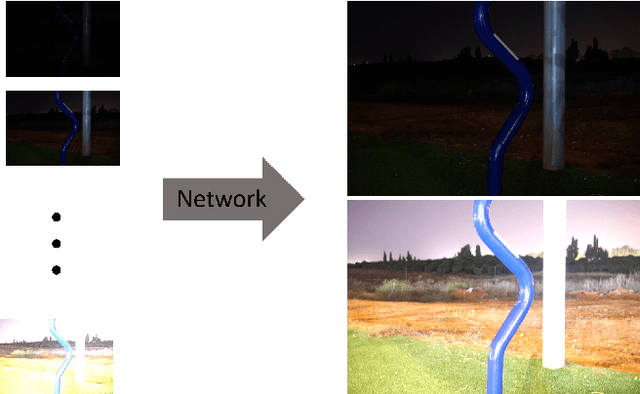

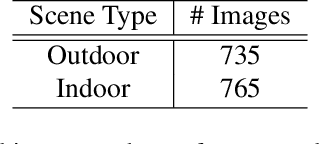

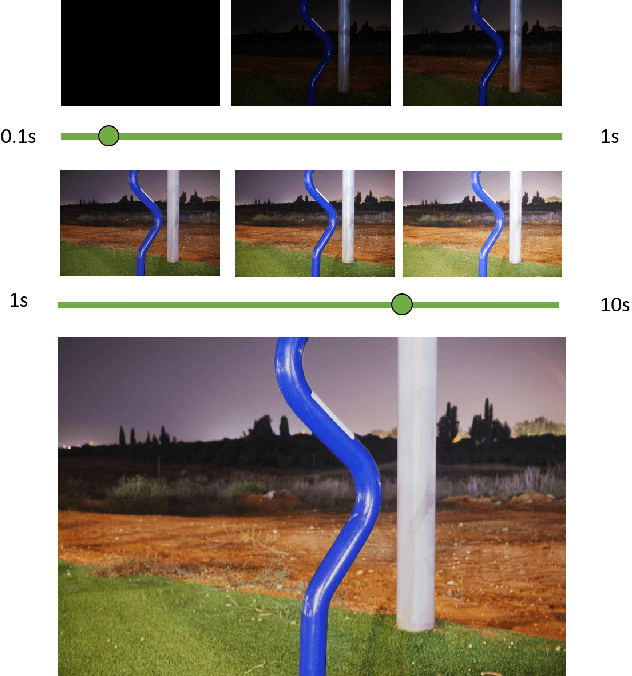

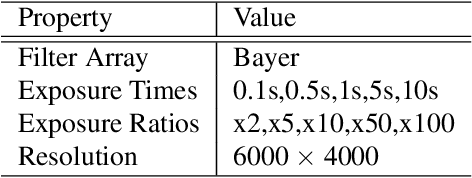

CEL-Net: Continuous Exposure for Extreme Low-Light Imaging

Dec 07, 2020

Abstract:Deep learning methods for enhancing dark images learn a mapping from input images to output images with pre-determined discrete exposure levels. Often, at inference time the input and optimal output exposure levels of the given image are different from the seen ones during training. As a result the enhanced image might suffer from visual distortions, such as low contrast or dark areas. We address this issue by introducing a deep learning model that can continuously generalize at inference time to unseen exposure levels without the need to retrain the model. To this end, we introduce a dataset of 1500 raw images captured in both outdoor and indoor scenes, with five different exposure levels and various camera parameters. Using the dataset, we develop a model for extreme low-light imaging that can continuously tune the input or output exposure level of the image to an unseen one. We investigate the properties of our model and validate its performance, showing promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge