Emiliano Schena

BIOWISH: Biometric Recognition using Wearable Inertial Sensors detecting Heart Activity

Oct 18, 2022

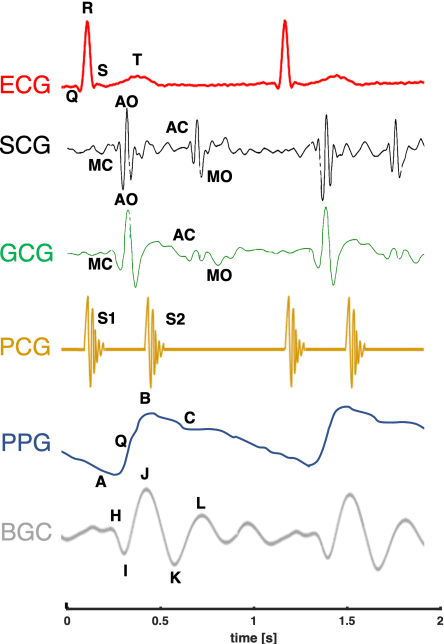

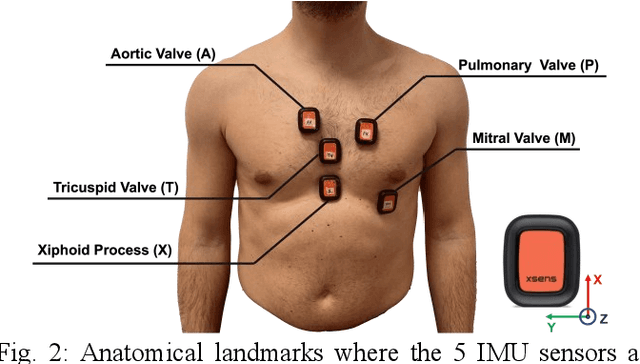

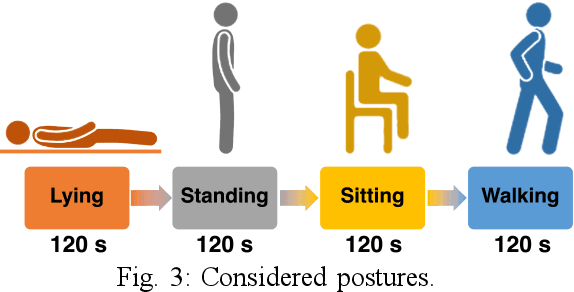

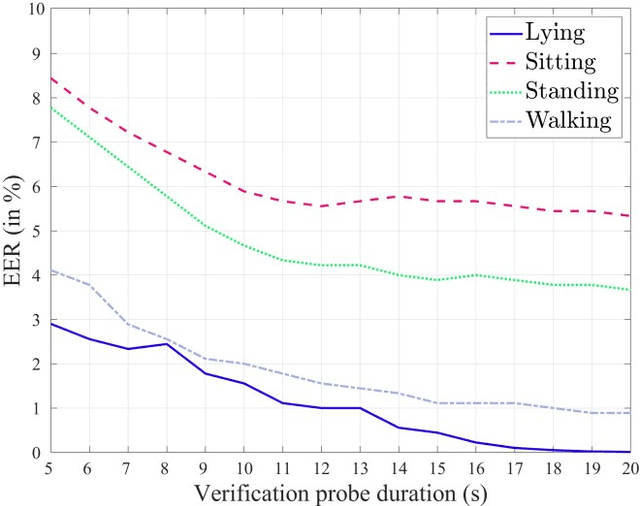

Abstract:Wearable devices are increasingly used, thanks to the wide set of applications that can be deployed exploiting their ability to monitor physical activity and health-related parameters. Their usage has been recently proposed to perform biometric recognition, leveraging on the uniqueness of the recorded traits to generate discriminative identifiers. Most of the studies conducted on this topic have considered signals derived from cardiac activity, detecting it mainly using electrical measurements thorugh electrocardiography, or optical recordings employing photoplethysmography. In this paper we instead propose a BIOmetric recognition approach using Wearable Inertial Sensors detecting Heart activity (BIOWISH). In more detail, we investigate the feasibility of exploiting mechanical measurements obtained through seismocardiography and gyrocardiography to recognize a person. Several feature extractors and classifiers, including deep learning techniques relying on transfer learning and siamese training, are employed to derive distinctive characteristics from the considered signals, and differentiate between legitimate and impostor subjects. An multi-session database, comprising acquisitions taken from subjects performing different activities, is employed to perform experimental tests simulating a verification system. The obtained results testify that identifiers derived from measurements of chest vibrations, collected by wearable inertial sensors, could be employed to guarantee high recognition performance, even when considering short-time recordings.

Functional mimicry of Ruffini receptors with Fiber Bragg Gratings and Deep Neural Networks enables a bio-inspired large-area tactile sensitive skin

Mar 23, 2022

Abstract:Collaborative robots are expected to physically interact with humans in daily living and workplace, including industrial and healthcare settings. A related key enabling technology is tactile sensing, which currently requires addressing the outstanding scientific challenge to simultaneously detect contact location and intensity by means of soft conformable artificial skins adapting over large areas to the complex curved geometries of robot embodiments. In this work, the development of a large-area sensitive soft skin with a curved geometry is presented, allowing for robot total-body coverage through modular patches. The biomimetic skin consists of a soft polymeric matrix, resembling a human forearm, embedded with photonic Fiber Bragg Grating (FBG) transducers, which partially mimics Ruffini mechanoreceptor functionality with diffuse, overlapping receptive fields. A Convolutional Neural Network deep learning algorithm and a multigrid Neuron Integration Process were implemented to decode the FBG sensor outputs for inferring contact force magnitude and localization through the skin surface. Results achieved 35 mN (IQR = 56 mN) and 3.2 mm (IQR = 2.3 mm) median errors, for force and localization predictions, respectively. Demonstrations with an anthropomorphic arm pave the way towards AI-based integrated skins enabling safe human-robot cooperation via machine intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge