Edwin Salcedo

High-Resolution Water Sampling via a Solar-Powered Autonomous Surface Vehicle

Dec 10, 2025

Abstract:Accurate water quality assessment requires spatially resolved sampling, yet most unmanned surface vehicles (USVs) can collect only a limited number of samples or rely on single-point sensors with poor representativeness. This work presents a solar-powered, fully autonomous USV featuring a novel syringe-based sampling architecture capable of acquiring 72 discrete, contamination-minimized water samples per mission. The vehicle incorporates a ROS 2 autonomy stack with GPS-RTK navigation, LiDAR and stereo-vision obstacle detection, Nav2-based mission planning, and long-range LoRa supervision, enabling dependable execution of sampling routes in unstructured environments. The platform integrates a behavior-tree autonomy architecture adapted from Nav2, enabling mission-level reasoning and perception-aware navigation. A modular 6x12 sampling system, controlled by distributed micro-ROS nodes, provides deterministic actuation, fault isolation, and rapid module replacement, achieving spatial coverage beyond previously reported USV-based samplers. Field trials in Achocalla Lagoon (La Paz, Bolivia) demonstrated 87% waypoint accuracy, stable autonomous navigation, and accurate physicochemical measurements (temperature, pH, conductivity, total dissolved solids) comparable to manually collected references. These results demonstrate that the platform enables reliable high-resolution sampling and autonomous mission execution, providing a scalable solution for aquatic monitoring in remote environments.

Low-Cost Machine Vision System for Sorting Green Lentils (Lens Culinaris) Based on Pneumatic Ejection and Deep Learning

Jul 28, 2025Abstract:This paper presents the design, development, and evaluation of a dynamic grain classification system for green lentils (Lens Culinaris), which leverages computer vision and pneumatic ejection. The system integrates a YOLOv8-based detection model that identifies and locates grains on a conveyor belt, together with a second YOLOv8-based classification model that categorises grains into six classes: Good, Yellow, Broken, Peeled, Dotted, and Reject. This two-stage YOLOv8 pipeline enables accurate, real-time, multi-class categorisation of lentils, implemented on a low-cost, modular hardware platform. The pneumatic ejection mechanism separates defective grains, while an Arduino-based control system coordinates real-time interaction between the vision system and mechanical components. The system operates effectively at a conveyor speed of 59 mm/s, achieving a grain separation accuracy of 87.2%. Despite a limited processing rate of 8 grams per minute, the prototype demonstrates the potential of machine vision for grain sorting and provides a modular foundation for future enhancements.

Predicting Surgical Safety Margins in Osteosarcoma Knee Resections: An Unsupervised Approach

May 11, 2025Abstract:According to the Pan American Health Organization, the number of cancer cases in Latin America was estimated at 4.2 million in 2022 and is projected to rise to 6.7 million by 2045. Osteosarcoma, one of the most common and deadly bone cancers affecting young people, is difficult to detect due to its unique texture and intensity. Surgical removal of osteosarcoma requires precise safety margins to ensure complete resection while preserving healthy tissue. Therefore, this study proposes a method for estimating the confidence interval of surgical safety margins in osteosarcoma surgery around the knee. The proposed approach uses MRI and X-ray data from open-source repositories, digital processing techniques, and unsupervised learning algorithms (such as k-means clustering) to define tumor boundaries. Experimental results highlight the potential for automated, patient-specific determination of safety margins.

Graph Learning-based Regional Heavy Rainfall Prediction Using Low-Cost Rain Gauges

Dec 22, 2024Abstract:Accurate and timely prediction of heavy rainfall events is crucial for effective flood risk management and disaster preparedness. By monitoring, analysing, and evaluating rainfall data at a local level, it is not only possible to take effective actions to prevent any severe climate variation but also to improve the planning of surface and underground hydrological resources. However, developing countries often lack the weather stations to collect data continuously due to the high cost of installation and maintenance. In light of this, the contribution of the present paper is twofold: first, we propose a low-cost IoT system for automatic recording, monitoring, and prediction of rainfall in rural regions. Second, we propose a novel approach to regional heavy rainfall prediction by implementing graph neural networks (GNNs), which are particularly well-suited for capturing the complex spatial dependencies inherent in rainfall patterns. The proposed approach was tested using a historical dataset spanning 72 months, with daily measurements, and experimental results demonstrated the effectiveness of the proposed method in predicting heavy rainfall events, making this approach particularly attractive for regions with limited resources or where traditional weather radar or station coverage is sparse.

Distributed Intelligent Video Surveillance for Early Armed Robbery Detection based on Deep Learning

Oct 13, 2024

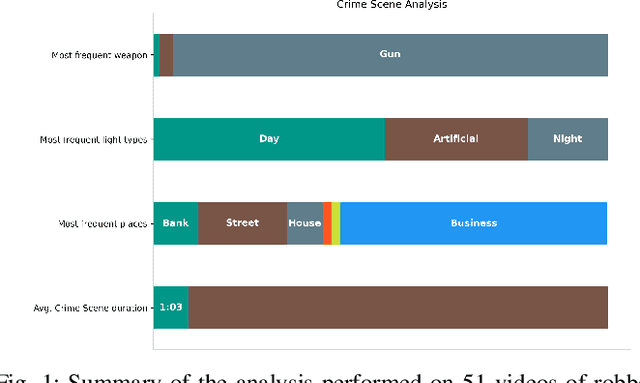

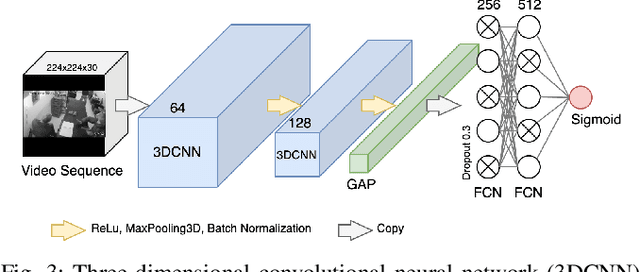

Abstract:Low employment rates in Latin America have contributed to a substantial rise in crime, prompting the emergence of new criminal tactics. For instance, "express robbery" has become a common crime committed by armed thieves, in which they drive motorcycles and assault people in public in a matter of seconds. Recent research has approached the problem by embedding weapon detectors in surveillance cameras; however, these systems are prone to false positives if no counterpart confirms the event. In light of this, we present a distributed IoT system that integrates a computer vision pipeline and object detection capabilities into multiple end-devices, constantly monitoring for the presence of firearms and sharp weapons. Once a weapon is detected, the end-device sends a series of frames to a cloud server that implements a 3DCNN to classify the scene as either a robbery or a normal situation, thus minimizing false positives. The deep learning process to train and deploy weapon detection models uses a custom dataset with 16,799 images of firearms and sharp weapons. The best-performing model, YOLOv5s, optimized using TensorRT, achieved a final mAP of 0.87 running at 4.43 FPS. Additionally, the 3DCNN demonstrated 0.88 accuracy in detecting abnormal situations. Extensive experiments validate that the proposed system significantly reduces false positives while autonomously monitoring multiple locations in real-time.

Edge AI-Based Vein Detector for Efficient Venipuncture in the Antecubital Fossa

Oct 27, 2023Abstract:Assessing the condition and visibility of veins is a crucial step before obtaining intravenous access in the antecubital fossa, which is a common procedure to draw blood or administer intravenous therapies (IV therapies). Even though medical practitioners are highly skilled at intravenous cannulation, they usually struggle to perform the procedure in patients with low visible veins due to fluid retention, age, overweight, dark skin tone, or diabetes. Recently, several investigations proposed combining Near Infrared (NIR) imaging and deep learning (DL) techniques for forearm vein segmentation. Although they have demonstrated compelling results, their use has been rather limited owing to the portability and precision requirements to perform venipuncture. In this paper, we aim to contribute to bridging this gap using three strategies. First, we introduce a new NIR-based forearm vein segmentation dataset of 2,016 labelled images collected from 1,008 subjects with low visible veins. Second, we propose a modified U-Net architecture that locates veins specifically in the antecubital fossa region of the examined patient. Finally, a compressed version of the proposed architecture was deployed inside a bespoke, portable vein finder device after testing four common embedded microcomputers and four common quantization modalities. Experimental results showed that the model compressed with Dynamic Range Quantization and deployed on a Raspberry Pi 4B card produced the best execution time and precision balance, with 5.14 FPS and 0.957 of latency and Intersection over Union (IoU), respectively. These results show promising performance inside a resource-restricted low-cost device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge