Dominik Seuss

Multi-label Learning with Missing Values using Combined Facial Action Unit Datasets

Aug 17, 2020

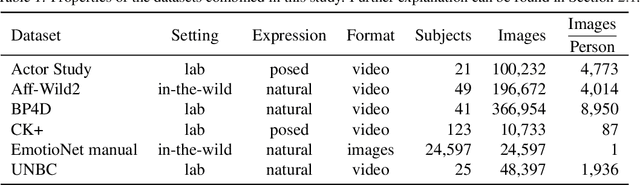

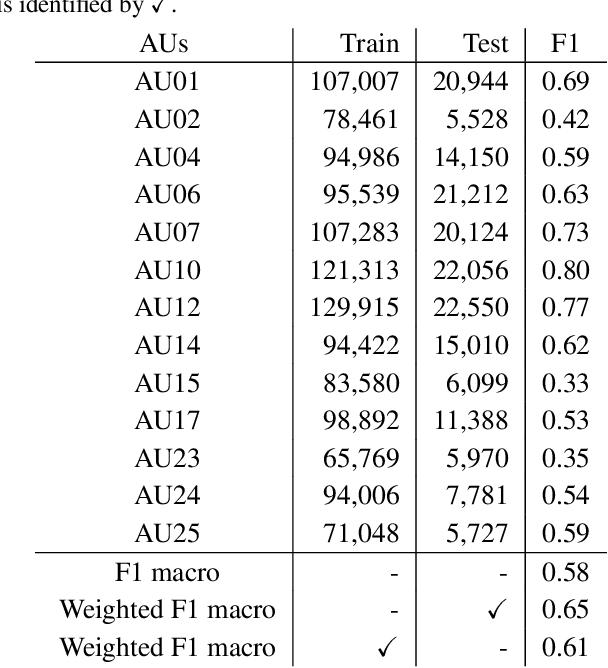

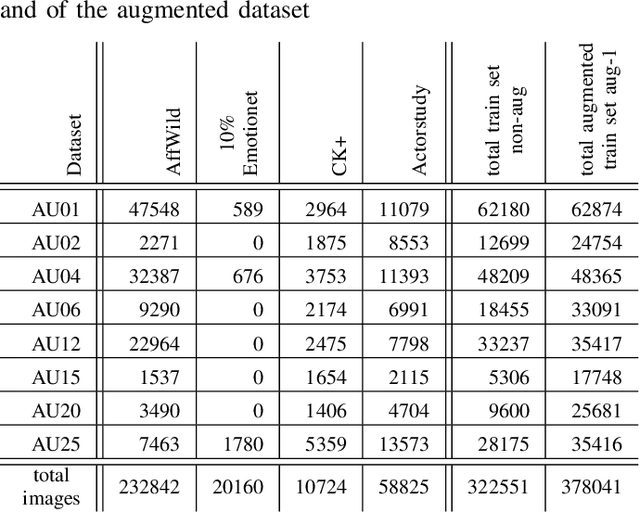

Abstract:Facial action units allow an objective, standardized description of facial micro movements which can be used to describe emotions in human faces. Annotating data for action units is an expensive and time-consuming task, which leads to a scarce data situation. By combining multiple datasets from different studies, the amount of training data for a machine learning algorithm can be increased in order to create robust models for automated, multi-label action unit detection. However, every study annotates different action units, leading to a tremendous amount of missing labels in a combined database. In this work, we examine this challenge and present our approach to create a combined database and an algorithm capable of learning under the presence of missing labels without inferring their values. Our approach shows competitive performance compared to recent competitions in action unit detection.

Unique Class Group Based Multi-Label Balancing Optimizer for Action Unit Detection

Mar 05, 2020

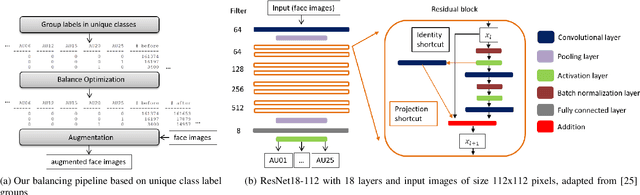

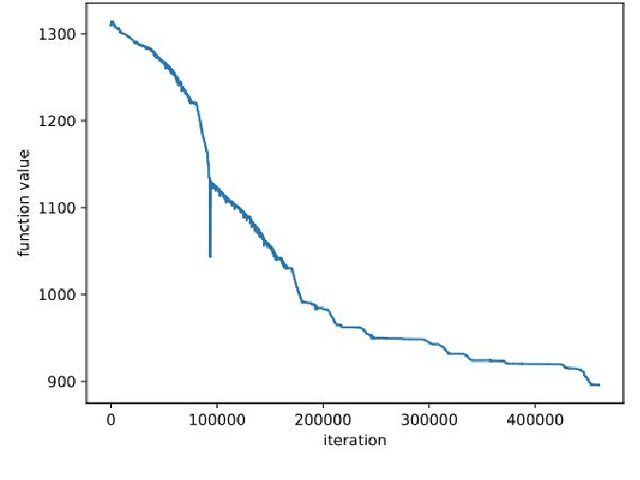

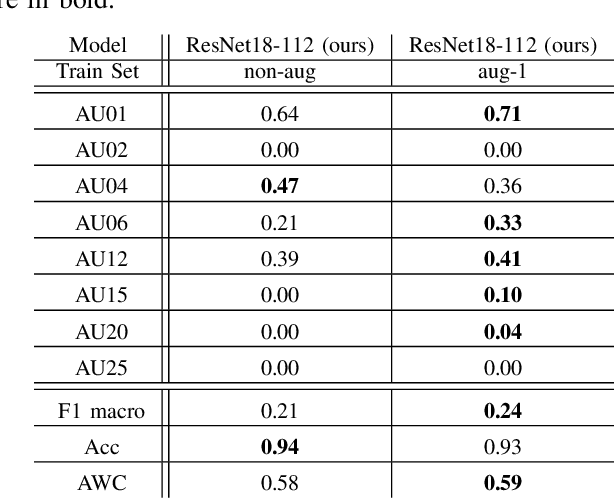

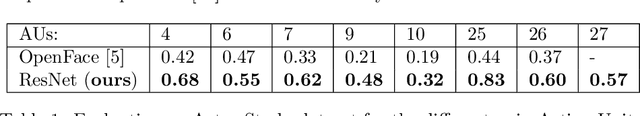

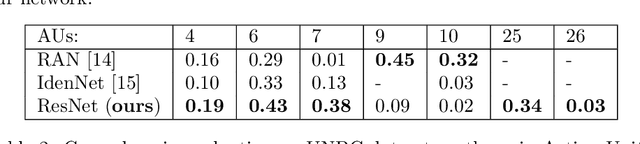

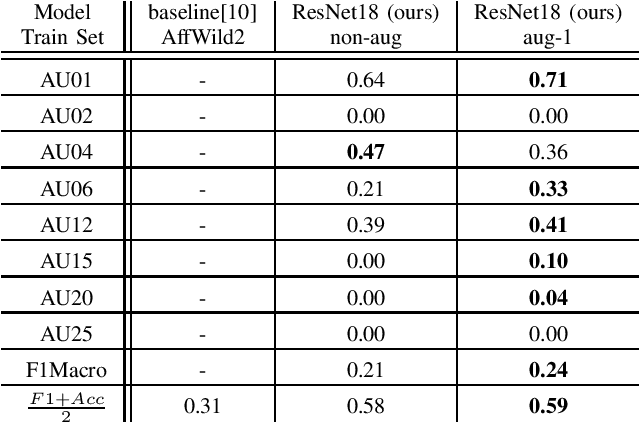

Abstract:Balancing methods for single-label data cannot be applied to multi-label problems as they would also resample the samples with high occurrences. We propose to reformulate this problem as an optimization problem in order to balance multi-label data. We apply this balancing algorithm to training datasets for detecting isolated facial movements, so-called Action Units. Several Action Units can describe combined emotions or physical states such as pain. As datasets in this area are limited and mostly imbalanced, we show how optimized balancing and then augmentation can improve Action Unit detection. At the IEEE Conference on Face and Gesture Recognition 2020, we ranked third in the Affective Behavior Analysis in-the-wild (ABAW) challenge for the Action Unit detection task.

Verifying Deep Learning-based Decisions for Facial Expression Recognition

Feb 14, 2020

Abstract:Neural networks with high performance can still be biased towards non-relevant features. However, reliability and robustness is especially important for high-risk fields such as clinical pain treatment. We therefore propose a verification pipeline, which consists of three steps. First, we classify facial expressions with a neural network. Next, we apply layer-wise relevance propagation to create pixel-based explanations. Finally, we quantify these visual explanations based on a bounding-box method with respect to facial regions. Although our results show that the neural network achieves state-of-the-art results, the evaluation of the visual explanations reveals that relevant facial regions may not be considered.

Multi-Label Class Balancing Algorithm for Action Unit Detection

Feb 08, 2020

Abstract:Isolated facial movements, so-called Action Units, can describe combined emotions or physical states such as pain. As datasets are limited and mostly imbalanced, we present an approach incorporating a multi-label class balancing algorithm. This submission is subject to the Action Unit detection task of the Affective Behavior Analysis in-the-wild (ABAW) challenge at the IEEE Conference on Face and Gesture Recognition 2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge